NVIDIA has released a new AI video search and summarization blueprint, a revolutionary technology that will revolutionize the way we analyze and understand video. This blueprint leverages generative AI, visual language models (VLM), and large language models (LLM) to achieve deep understanding and natural interaction of video content, surpassing the limitations of traditional video analysis and providing users with an unprecedented Video interactive experience. The editor of Downcodes will explain the core functions and application scenarios of this technology in detail.

NVIDIA recently released a new AI Blueprint for Video Search and Summarization. This technical solution will completely change the limitations of traditional video analysis. Different from the past fixed models that can only recognize preset objects, the new solution achieves in-depth understanding of video content and natural interaction by combining generative AI, visual language model (VLM) and large language model (LLM).

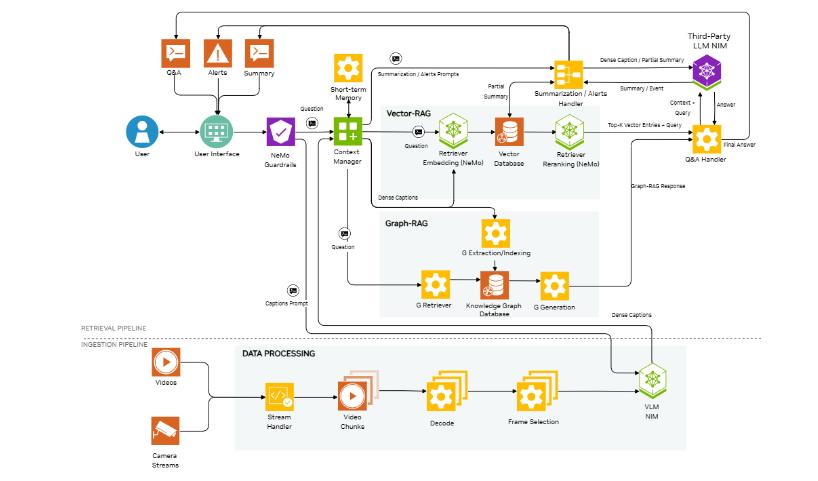

This system is built on the NVIDIA NIM microservice architecture, and its core advantage lies in its powerful video understanding capabilities. By organically combining technologies such as video segmentation processing, dense description generation, and knowledge graph construction, the system can accurately understand and analyze ultra-long video content. Users can achieve video summary generation, interactive Q&A, and customized event monitoring of real-time video streams through a simple REST API interface.

From the perspective of technical architecture, the solution contains multiple key components: the stream processor is responsible for interaction and synchronization between components; NeMo Guardrails ensures the compliance of user input; the VLM pipeline based on NVIDIA DeepStream SDK is responsible for video decoding and feature extraction; vector The database stores intermediate results; the Context-Aware RAG module integrates to generate a unified summary; the Graph-RAG module captures complex relationships in the video through the graph database.

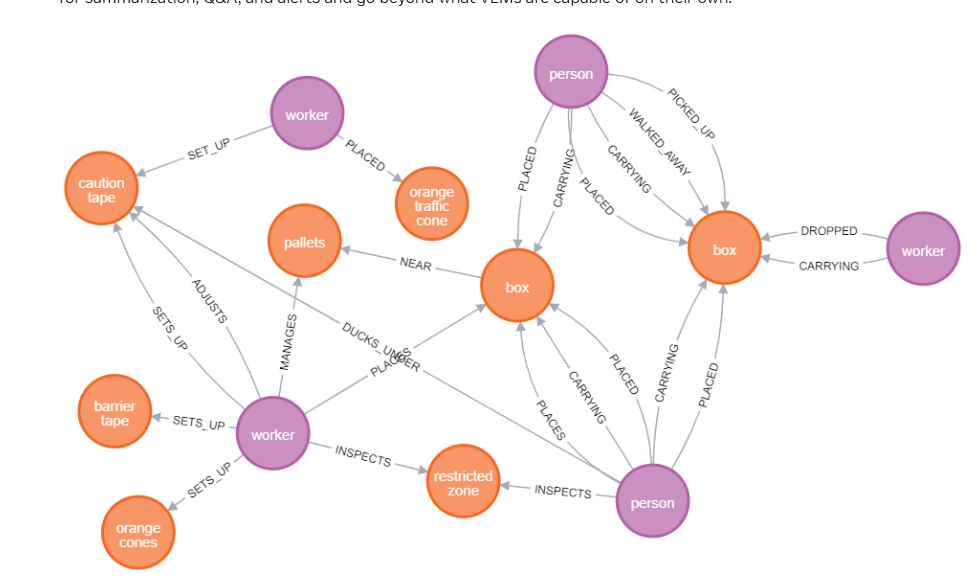

In practical applications, the system first cuts the video into smaller segments, generates dense descriptions through VLM, and then uses LLM to summarize and analyze the results. For live streams, the system can continuously process video clips and generate summaries in real time. At the same time, by building a knowledge graph, the system can accurately capture complex information in videos and support deeper question-and-answer interactions.

This technological breakthrough will revolutionize scenarios such as factories, warehouses, retail stores, airports, and transportation hubs. Operations teams can gain richer video analytics insights through natural language interactions to make smarter decisions.

Currently, NVIDIA has opened early access applications for this technology solution. Developers can choose the appropriate model through the API catalog provided by NVIDIA, either using NVIDIA-hosted services or choosing a local deployment solution. This flexible deployment option will help enterprises create customized video analytics solutions based on actual needs.

As AI technology continues to advance, we are witnessing earth-shaking changes in the field of video analysis. The launch of NVIDIA's latest technology solution will undoubtedly accelerate the application of intelligent video analysis in all walks of life.

Details: https://developer.nvidia.com/blog/build-a-video-search-and-summarization-agent-with-nvidia-ai-blueprint

All in all, NVIDIA's AI video search and summary blueprint represents a major leap forward in intelligent video analysis technology, and its powerful functions and flexible deployment methods will bring huge value to various industries. This technology has broad application prospects and is worth looking forward to its future development.