The editor of Downcodes brings you the latest technology information! Seattle startup Moondream has launched moondream2, an amazing compact visual language model, which is making waves in the industry with its small size and powerful performance. This open-source model performed well in various benchmark tests, even surpassing competitors with larger parameters in some aspects, bringing new possibilities for local image recognition on smartphones. Let’s take a closer look at what makes moondream2 unique and the technological innovation behind it.

Recently, Moondream, a Seattle startup, launched a compact visual language model called moondream2. Despite its small size, the model performed well in various benchmark tests and attracted much attention. As an open source model, moondream2 promises to enable local image recognition capabilities on smartphones.

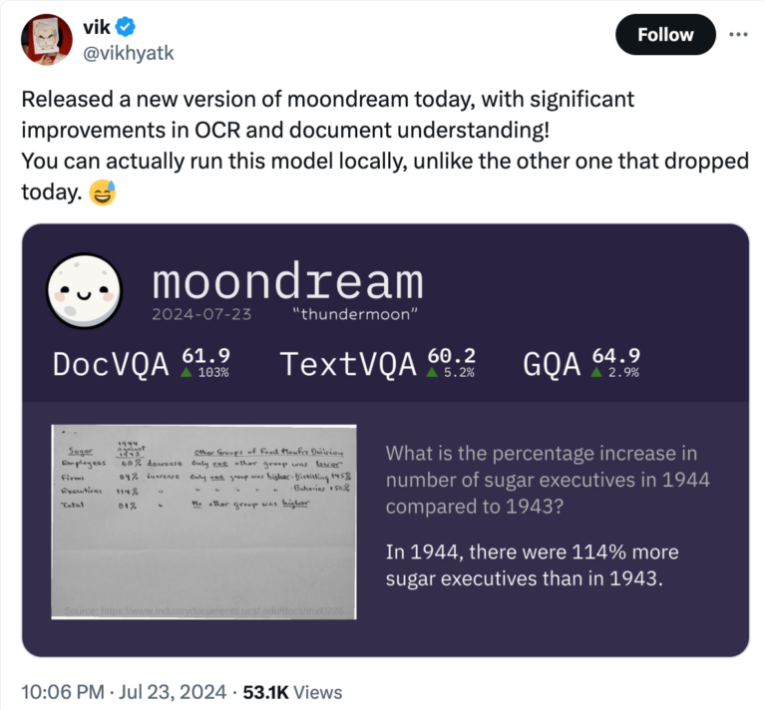

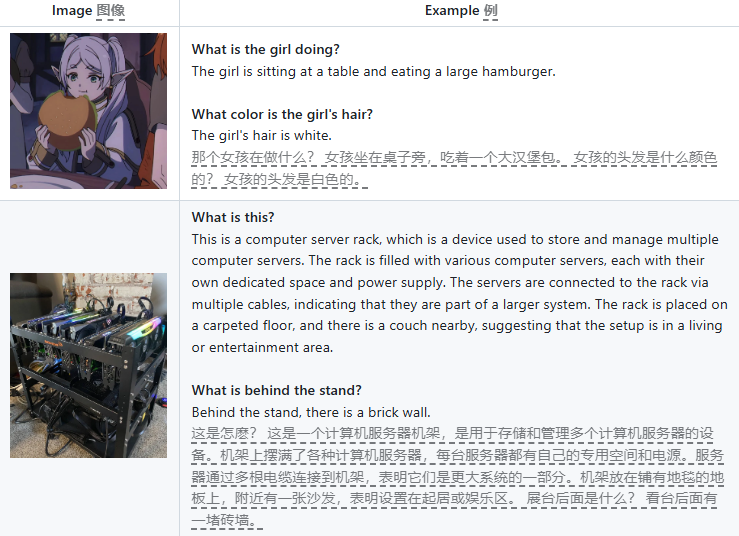

Moondream2 was officially released in March. The model can process text and image inputs, and has the capabilities of answering questions, text extraction (OCR), object counting and item classification. Since its release, the Moondream team has continued to update the model, continually improving its baseline performance. The July release shows significant improvements in OCR and document understanding, particularly in the analysis of historical economic data. The model's scores in DocVQA, TextVQA and GQA all exceed 60%, showing its strong ability when executed locally.

A notable feature of moondream2 is its compact size: there are only 1.6 billion parameters, which allows it to run not only on cloud servers, but also on local computers and even some lower-performance devices such as smartphones or single-board computers .

Despite its small size, its performance is comparable to some competing models with billions of parameters, and even outperforms these larger models on some benchmarks.

In a comparison of mobile device visual language models, researchers pointed out that although moondream2 has only 170 million parameters, its performance is equivalent to that of a 700 million parameter model, and its performance is only slightly inferior to the SQA data set. This shows that although small models perform well, they still face challenges in understanding specific contexts.

Vikhyat Korrapati, the developer of the model, said that moondream2 is built on other models such as SigLIP, Microsoft's Phi-1.5 and LLaVA training data sets. The open source model is now available for free download on GitHub, with a demo version shown on Hugging Face. On the coding platform, moondream2 has also attracted widespread attention from the developer community, receiving over 5,000 star reviews.

The success attracted investors: Moondream raised $4.5 million in a seed round led by Felicis Ventures, Microsoft's M12GitHub fund, and Ascend. The company's CEO, Jay Allen, has worked at Amazon Web Services (AWS) for many years and leads the growing startup.

The launch of moondream2 marks the birth of a series of professionally optimized open source models that require fewer resources while delivering similar performance to larger, older models. Although there are some small local models on the market, such as Apple's smart assistant and Google's Gemini Nano, these two manufacturers still outsource more complex tasks to the cloud.

huggingface:https://huggingface.co/vikhyatk/moondream2

github:https://github.com/vikhyat/moondream

The emergence of moondream2 heralds the vigorous development of lightweight AI models, providing new possibilities for localized AI applications. Its open source nature also promotes the active participation of the developer community and injects new vitality into the development of AI technology. We look forward to more similar innovations in the future!