Meta AI researchers have proposed a training-free method called AdaCache, designed to accelerate the inference speed of video diffusion Transformer models (DiTs). DiTs performs well in the field of video generation, but its large model size and complex attention mechanism lead to slow inference speed, limiting its application. AdaCache cleverly takes advantage of the fact that "not all videos are the same" by caching calculation results and customizing the caching strategy for each video, significantly improving inference efficiency while ensuring generation quality. The editor of Downcodes will explain this technology in detail for you.

Generating high-quality, time-continuous videos requires significant computational resources, especially for longer time spans. Although the latest Diffusion Transformer models (DiTs) have made significant progress in video generation, this challenge is exacerbated by slower inference due to their reliance on larger models and more complex attention mechanisms. To solve this problem, researchers at Meta AI proposed a training-free method called AdaCache to accelerate video DiTs.

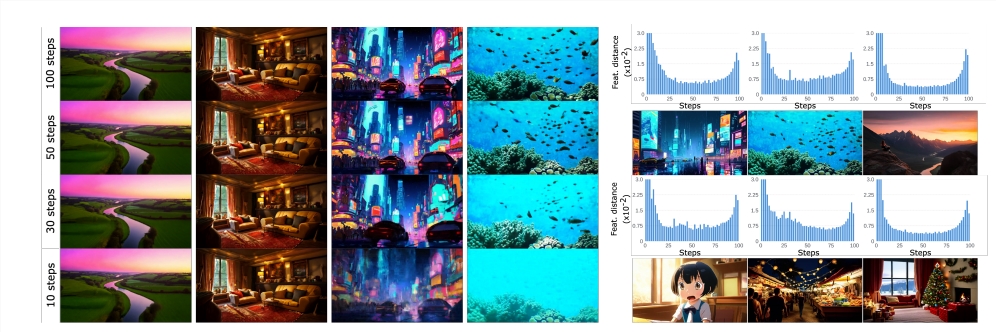

The core idea of AdaCache is based on the fact that "not all videos are the same", which means that some videos require fewer denoising steps than others to achieve reasonable quality. Based on this, this method not only caches the calculation results during the diffusion process, but also designs a customized caching strategy for each video generation, thereby maximizing the trade-off between quality and latency.

The researchers further introduced a motion regularization (MoReg) scheme, which uses video information in AdaCache to control the allocation of computing resources according to motion content. Since video sequences containing high-frequency textures and large amounts of motion content require more diffusion steps to achieve reasonable quality, MoReg can better allocate computational resources.

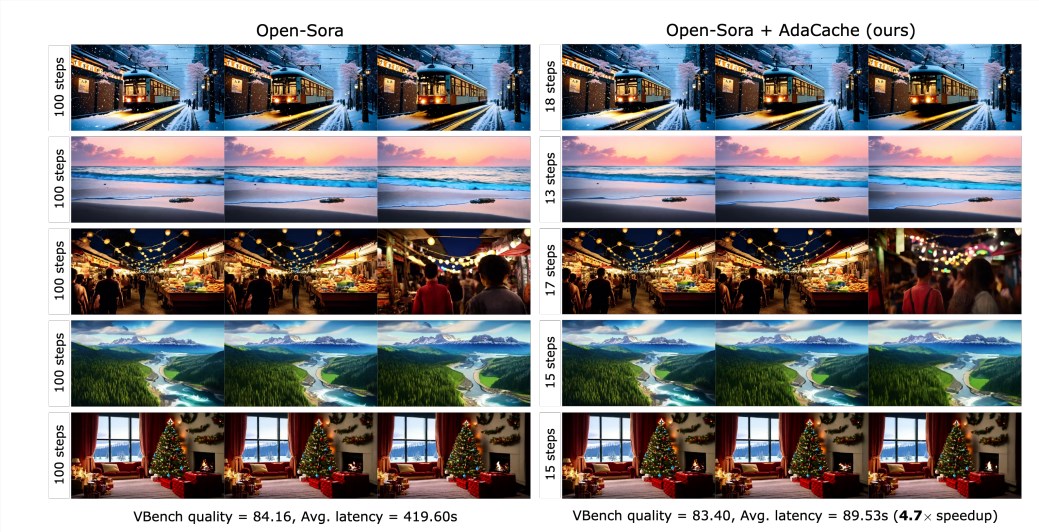

Experimental results show that AdaCache can significantly improve inference speed (e.g., up to 4.7x speedup in Open-Sora720p-2s video generation) without sacrificing generation quality. In addition, AdaCache also has good generalization ability and can be applied to different video DiT models, such as Open-Sora, Open-Sora-Plan and Latte. AdaCache offers significant advantages in both speed and quality compared to other training-free acceleration methods such as Δ-DiT, T-GATE, and PAB.

User studies show that users prefer AdaCache-generated videos to other methods and consider their quality to be comparable to baseline models. This study confirms the effectiveness of AdaCache and makes an important contribution to the field of efficient video generation. Meta AI believes that AdaCache can be widely used and promote the popularization of high-fidelity long video generation.

Paper: https://arxiv.org/abs/2411.02397

Project home page:

https://adacache-dit.github.io/

GitHub:

https://github.com/AdaCache-DiT/AdaCache

All in all, AdaCache provides a novel and effective method for efficient video generation, and its significant performance improvement and good user experience make it highly potential for future applications. The editor of Downcodes believes that the emergence of AdaCache will promote the further development of high-fidelity long video generation.