Recently, the popularity of AI large language model (LLM) applications has driven fierce competition in the hardware field. AMD has launched its latest Strix Point APU series, and its Ryzen AI processors show significant advantages in handling LLM tasks, with performance far exceeding Intel's Lunar Lake series. The editor of Downcodes will give you an in-depth understanding of the performance of the Strix Point APU series and the technological innovation behind it.

Recently, AMD released its latest Strix Point APU series, emphasizing the series' outstanding performance in AI large language model (LLM) applications, far surpassing Intel's Lunar Lake series processors. As the demand for AI workloads continues to grow, competition for hardware becomes increasingly fierce. In response to the market, AMD launched AI processors designed for mobile platforms, aiming for higher performance and lower latency.

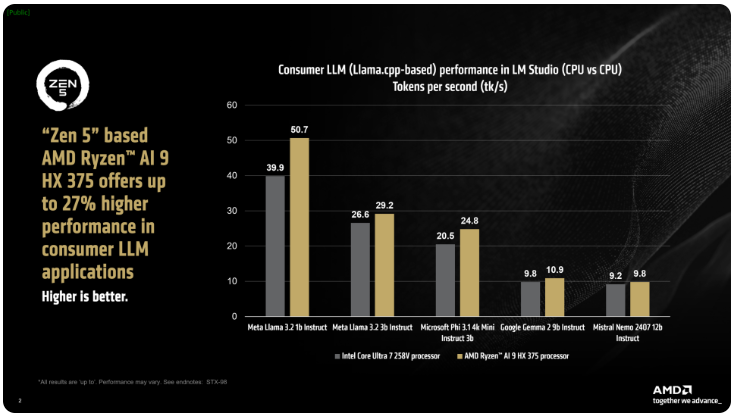

AMD said that the Ryzen AI300 processor of the ix Point series can significantly increase the number of Tokens processed per second when processing AI LLM tasks. Compared with Intel's Core Ultra258V, the performance of Ryzen AI9375 has improved by 27%. Although Core Ultra7V is not the fastest model in the L Lake series, its core and thread count are close to higher-end Lunar Lake processors, showing the competitiveness of AMD products in this area.

AMD's LM Studio tool is a consumer-oriented application based on the llama.cpp framework, designed to simplify the use of large language models. The framework optimizes the performance of x86 CPUs. Although a GPU is not required to run LLM, using a GPU can further accelerate processing. According to the test, Ryzen AI9HX375 can achieve 35 times lower latency in the Meta Llama3.21b Instruct model, processing 50.7 Tokens per second. In comparison, Core Ultra7258V is only 39.9 Tokens.

Not only that, Strix Point APU is also equipped with a powerful Radeon integrated graphics card based on RDNA3.5 architecture, which can offload tasks to the iGPU through the ulkan API to further improve the performance of LLM. Using Variable Graphics Memory (VGM) technology, the Ryzen AI300 processor can optimize memory allocation, improve energy efficiency, and ultimately achieve a performance improvement of up to 60%.

In comparison tests, AMD used the same settings on the Intel AI Playground platform and found that the Ryzen AI9HX375 was 87% faster than the Core Ultra7258V on Microsoft Phi3.1 and 13% faster on the Mistral7b Instruct0.3 model. Still, the results are more interesting when compared to the Core Ultra9288V, the flagship of the Lunar Lake series. Currently, AMD is focusing on making the use of large language models more popular through LM Studio, aiming to make it easier for more non-technical users to get started.

The launch of the AMD Strix Point APU series marks further intensification of competition in the field of AI processors, and also indicates that future AI applications will have more powerful hardware support. Its improvements in performance and energy efficiency will bring users a smoother and more powerful AI experience. The editor of Downcodes will continue to pay attention to the latest developments in this field and bring more exciting reports to readers.