In recent years, the training cost of large-scale language models has remained high, which has become an important factor restricting the development of AI. How to reduce training costs and improve efficiency has become the focus of the industry. The editor of Downcodes brings you an interpretation of the latest paper from researchers at Harvard University and Stanford University. This paper proposes an "accuracy-aware" scaling rule that effectively reduces training costs by adjusting model training accuracy, even in some cases. In this case, it can also improve model performance. Let’s take a closer look at this exciting research.

In the field of artificial intelligence, larger scale seems to mean greater capabilities. In pursuit of more powerful language models, major technology companies are frantically stacking model parameters and training data, only to find that costs are also rising. Isn’t there a cost-effective and efficient way to train language models?

Researchers from Harvard and Stanford universities recently published a paper in which they found that the accuracy of model training is like a hidden key that can unlock the "cost code" of language model training.

What is model accuracy? Simply put, it refers to the model parameters and the number of digits used in the calculation process. Traditional deep learning models usually use 32-bit floating point numbers (FP32) for training, but in recent years, with the development of hardware, lower precision number types are used, such as 16-bit floating point numbers (FP16) or 8-bit integers (INT8) Training is already possible.

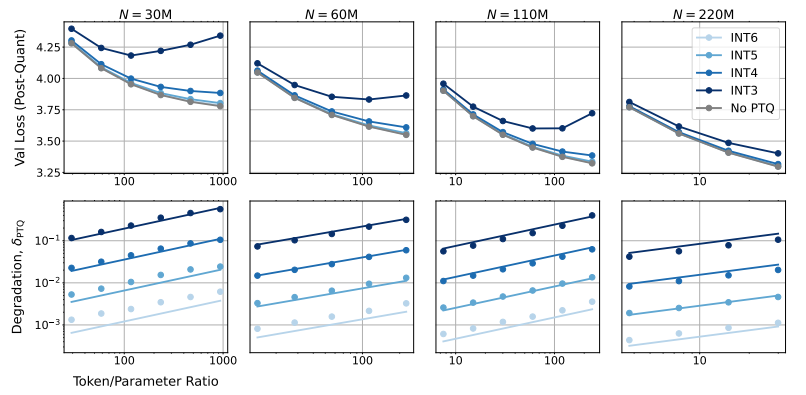

So, what impact will reducing model accuracy have on model performance? This is exactly the question this paper wants to explore. Through a large number of experiments, the researchers analyzed the cost and performance changes of model training and inference under different accuracy, and proposed a new set of "accuracy-aware" scaling rules.

They found that training with lower precision effectively reduces the "effective number of parameters" of the model, thereby reducing the amount of computation required for training. This means that, with the same computational budget, we can train larger-scale models, or at the same scale, using lower accuracy can save a lot of computational resources.

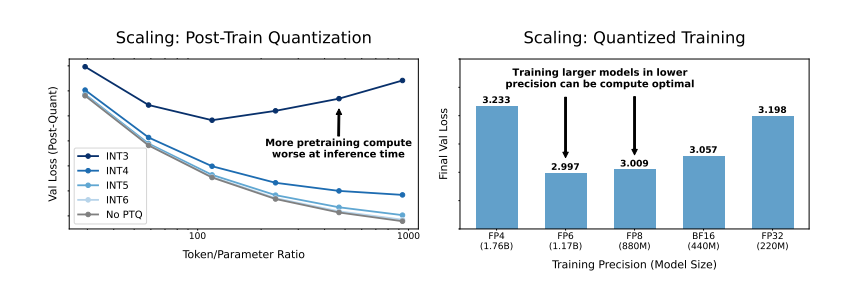

Even more surprisingly, the researchers also found that in some cases, training with lower precision can actually improve the performance of the model! For example, for those that require "post-training quantization" If the model uses lower precision during the training stage, the model will be more robust to the reduction in precision after quantization, thus showing better performance during the inference stage.

So, which precision should we choose to train the model? By analyzing their scaling rules, the researchers came to some interesting conclusions:

Traditional 16-bit precision training may not be optimal. Their research suggests that 7-8 digits of precision may be a more cost-effective option.

It is also unwise to blindly pursue ultra-low precision (such as 4-digit) training. Because at extremely low accuracy, the number of effective parameters of the model will drop sharply. In order to maintain performance, we need to significantly increase the model size, which will in turn lead to higher computational costs.

The optimal training accuracy may vary for models of different sizes. For those models that require a lot of "overtraining", such as the Llama-3 and Gemma-2 series, training with higher accuracy may be more cost-effective.

This research provides a new perspective on understanding and optimizing language model training. It tells us that the choice of accuracy is not static, but needs to be weighed based on the specific model size, training data volume, and application scenarios.

Of course, there are some limitations to this study. For example, the model they used is relatively small-scale, and the experimental results may not be directly generalizable to larger-scale models. In addition, they only focused on the loss function of the model and did not evaluate the performance of the model on downstream tasks.

Nonetheless, this research still has important implications. It reveals the complex relationship between model accuracy, model performance and training cost, and provides us with valuable insights for designing and training more powerful and economical language models in the future.

Paper: https://arxiv.org/pdf/2411.04330

All in all, this research provides new ideas and methods for reducing the cost of large-scale language model training, and provides important reference value for future AI development. The editor of Downcodes looks forward to more progress in model accuracy research and contributes to building more cost-effective AI models.