In recent years, large language models (LLMs) have shown amazing capabilities in various fields, but their mathematical reasoning capabilities are surprisingly weak. The editor of Downcodes will interpret a latest study for you, which reveals the incredible "secret" of LLM in arithmetic operations, and analyzes the limitations of this method and the direction of future improvement. This research not only deepens our understanding of the internal operating mechanism of LLM, but also provides a valuable reference for improving the mathematical capabilities of LLM.

Recently, AI large language models (LLM) have performed well in various tasks, including writing poetry, writing code, and chatting. They are simply omnipotent! But, can you believe it? These "genius" AIs are actually " Mathematics rookies! They often overturn when solving simple arithmetic problems, which is surprising.

A latest study has revealed the "weird" secret behind LLM's arithmetic reasoning capabilities: they neither rely on powerful algorithms nor memory, but adopt a strategy called "heuristic hodgepodge"! This is like a student who does not study mathematical formulas and theorems seriously, but relies on some "little cleverness" and "rules of thumb" to get the answer.

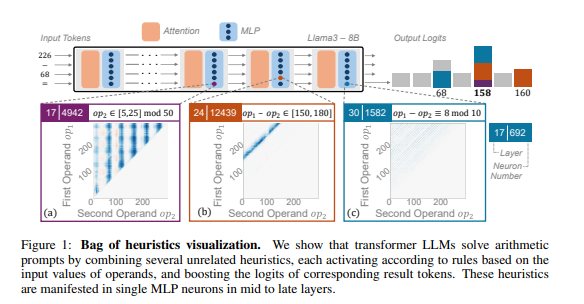

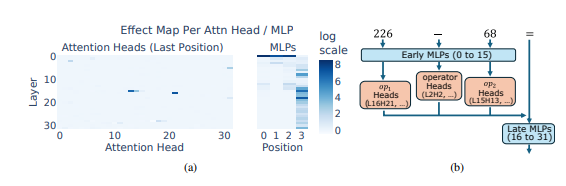

The researchers used arithmetic reasoning as a typical task and conducted in-depth analysis of multiple LLMs such as Llama3, Pythia and GPT-J. They found that the part of the LLM model responsible for arithmetic calculations (called the "circuit") is composed of many individual neurons, each of which acts like a "miniature calculator" and is only responsible for recognizing specific numerical patterns and outputting the corresponding answer. For example, one neuron might be responsible for identifying "numbers whose singles digit is 8," while another neuron might be responsible for identifying "subtraction operations whose results are between 150 and 180."

These "mini calculators" are like a jumble of tools, and instead of using them according to a specific algorithm, LLM uses a random combination of these "tools" to calculate an answer based on the pattern of numbers it inputs. It's like a chef who doesn't have a fixed recipe, but mixes it at will based on the ingredients available on hand, and finally makes a "dark cuisine".

What is even more surprising is that this "heuristic hodgepodge" strategy actually appeared in the early stages of LLM training and was gradually improved as the training progressed. This means that LLM relies on this “patchwork” approach to reasoning from the outset, rather than developing this strategy at a later stage.

So, what problems will this "weird" arithmetic reasoning method cause? Researchers found that the "heuristic hodgepodge" strategy has limited generalization ability and is prone to errors. This is because LLM has a limited number of "little clevernesses", and these "little clevernesses" themselves may also have flaws that prevent them from giving correct answers when encountering new numerical patterns. Just like a chef who can only make "tomato scrambled eggs", if he is suddenly asked to make "fish-flavored shredded pork", he will definitely be in a hurry and be at a loss.

This study revealed the limitations of LLM's arithmetic reasoning ability and also pointed out the direction for improving the mathematical ability of LLM in the future. Researchers believe that relying solely on existing training methods and model architecture may not be enough to improve LLM's arithmetic reasoning capabilities, and new methods need to be explored to help LLM learn more powerful and general algorithms so that they can truly become "mathematical masters" .

Paper address: https://arxiv.org/pdf/2410.21272

All in all, this study provides an in-depth analysis of LLM's "weird" strategies in mathematical reasoning, provides a new perspective for us to understand the limitations of LLM, and points out the direction for future research. I believe that with the continuous development of technology, LLM's mathematical capabilities will be significantly improved.