The editor of Downcodes learned that DeepSeek, a subsidiary of Chinese private equity giant Magic Square Quantitative, recently released its latest inference-focused large-scale language model R1-Lite-Preview. This model is currently only open to the public through the DeepSeek Chat web chatbot platform, and its performance has attracted widespread attention, even approaching or exceeding the o1-preview model recently released by OpenAI. DeepSeek is known for its contributions to the open source AI ecosystem, and this launch continues its commitment to accessibility and transparency.

DeepSeek, a subsidiary of Chinese private equity giant Huifang Quantitative, recently released its latest inference-focused large-scale language model R1-Lite-Preview. The model is currently only available to the public through DeepSeek Chat, a web chatbot platform.

DeepSeek is known for its innovative contributions to the open source AI ecosystem, and this new release aims to bring high-level inference capabilities to the public while maintaining a commitment to accessibility and transparency. Although R1-Lite-Preview is currently only available in chat applications, it has attracted widespread attention with performance close to or even exceeding OpenAI's recently released o1-preview model.

R1-Lite-Preview uses "chain thinking" reasoning, which can show the different thinking processes it goes through when responding to user queries.

Although some thought chains may appear nonsensical or wrong to humans, overall, R1-Lite-Preview's answers are very accurate and can even solve the "traps" encountered by some traditional powerful AI models such as GPT-4o and Claude series. ” Questions, such as how many R’s are there in the word “strawberry”? “Which is bigger, 9.11 or 9.9?”

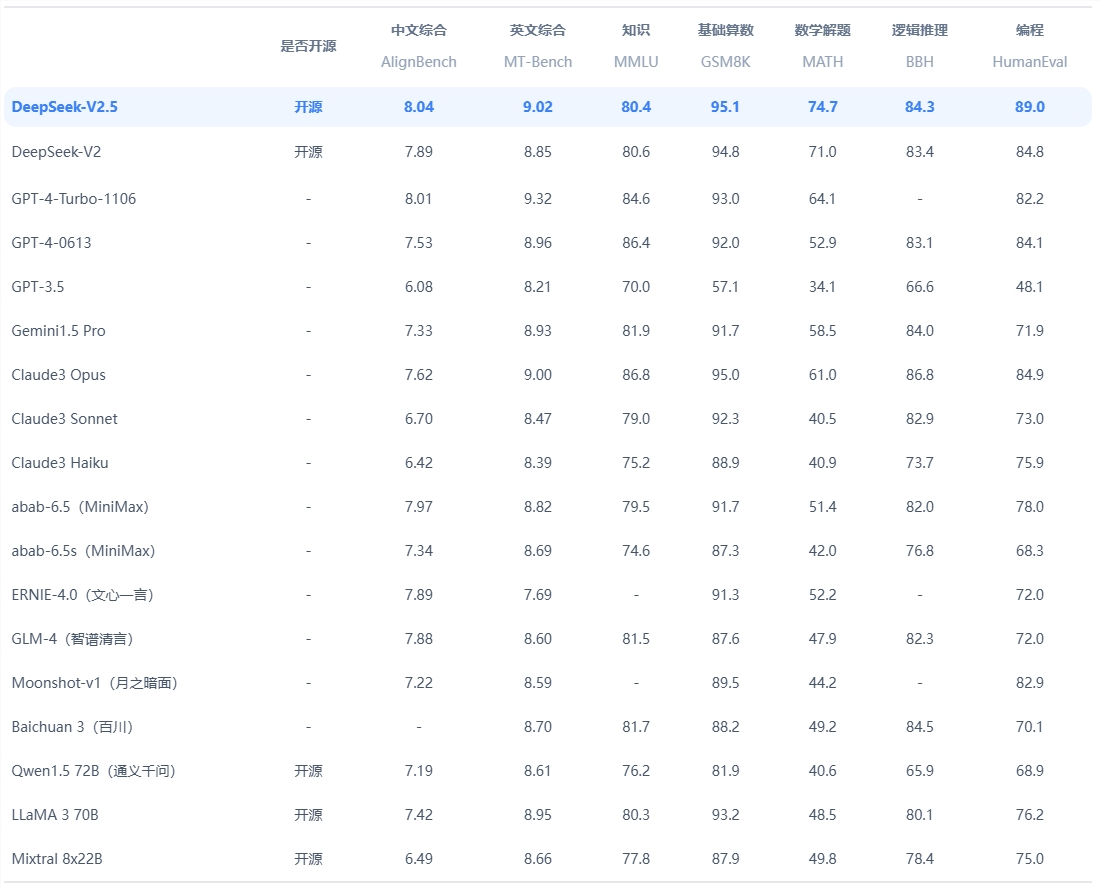

According to DeepSeek, the model excels in tasks that require logical reasoning, mathematical thinking, and real-time problem solving. Its performance exceeds the level of OpenAI o1-preview in established benchmarks such as AIME (American Invitational Mathematics Examination) and MATH.

Additionally, DeepSeek released extended data on the model, demonstrating a steady improvement in accuracy when the model is given more time, or "think tokens," to solve the problem. The chart highlights that as the depth of thinking increases, the model's score on benchmarks such as AIME improves.

The current release of R1-Lite-Preview performs well in key benchmarks, capable of handling a range of tasks from complex mathematics to logic scenarios, with scores comparable to top inference models such as GPQA and Codeforces. The model's transparent reasoning process allows users to observe its logical steps in real time, enhancing the system's sense of responsibility and credibility.

It is worth noting that DeepSeek has not released the complete code for third-party independent analysis or benchmarking, nor has it provided an API interface for independent testing. The company has not yet published relevant blog posts or technical documents describing the training or testing of R1-Lite-Preview. structure, which makes the origin behind it still full of doubts.

R1-Lite-Preview is currently available for free through DeepSeek Chat (chat.deepseek.com), but its advanced “deep thought” mode is limited to 50 messages per day, allowing users to experience its powerful capabilities. DeepSeek plans to release open source versions of the R1 series models and related APIs to further support the development of the open source AI community.

DeepSeek continues to drive innovation in the open source AI space, and the release of R1-Lite-Preview adds a new dimension to its inference and scalability. As businesses and researchers explore applications for inference-intensive AI, DeepSeek's commitment to openness will ensure that its models become an important resource for development and innovation.

Official entrance: https://www.deepseek.com/

All in all, R1-Lite-Preview demonstrates DeepSeek's strong strength in the field of large-scale language models, and its open source plan is also worth looking forward to. However, the lack of disclosure of code and technical documents also casts a layer of mystery on its technical details. The editor of Downcodes will continue to pay attention to the subsequent progress of DeepSeek.