Downcodes editor reported: The University of Washington research team released a new visual tracking model called SAMURAI, which is based on SAM2 and aims to overcome the challenges of visual tracking in complex scenes, especially in dealing with fast-moving and self-occlusion objects. SAMURAI significantly improves object motion prediction capabilities and mask selection accuracy by introducing temporal motion cues and motion perception memory selection mechanisms, achieving robust and accurate tracking without retraining or fine-tuning. Its strong zero-shot performance allows it to perform well without being trained on a specific dataset.

SAM2 performs well in object segmentation tasks, but has some limitations in visual tracking. For example, in crowded scenes, fixed-window memorization fails to take into account the quality of the selected memory, which may cause errors to propagate throughout the video sequence.

In order to solve this problem, the research team proposed SAMURAI, which significantly improves the prediction ability of object motion and the accuracy of mask selection by introducing temporal motion cues and a motion perception memory selection mechanism. This innovation enables SAMURAI to achieve robust and accurate tracking without the need for retraining or fine-tuning.

In terms of real-time operation, SAMURAI demonstrated strong zero-shot performance, which means that the model can still perform well without being trained on a specific data set.

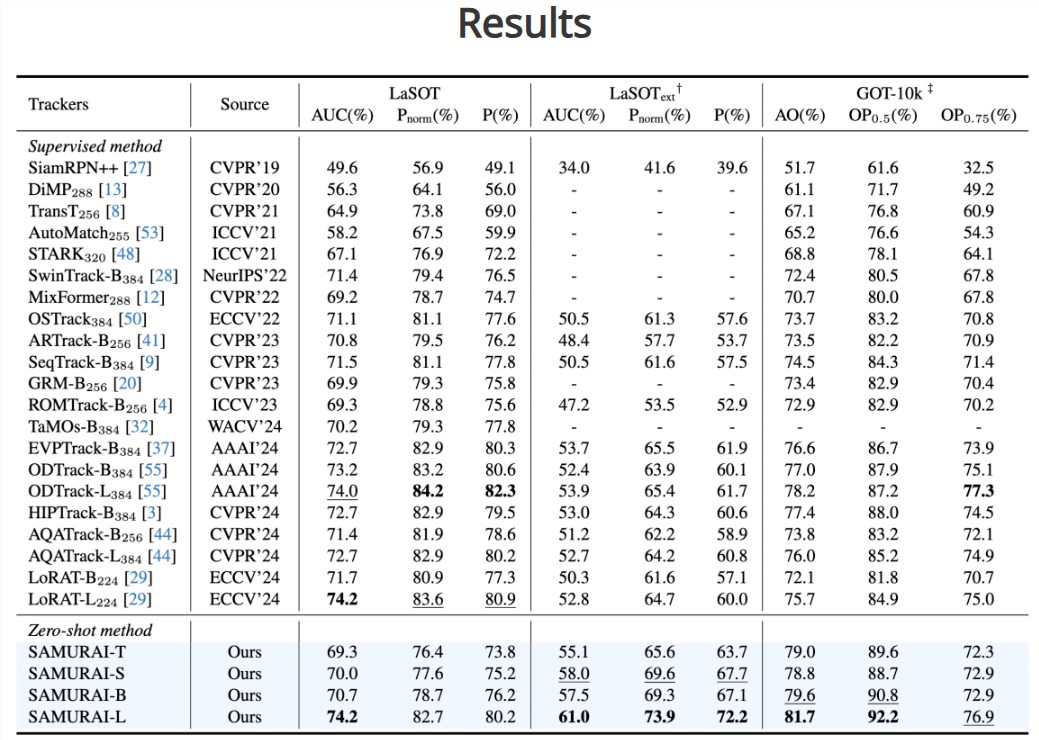

Through evaluation, the research team found that SAMURAI's success rate and accuracy on multiple benchmark data sets have been significantly improved. On the LaSOT-ext data set, SAMURAI achieved an AUC increase of 7.1%, while on the GOT-10k data set it achieved an AO increase of 3.5%. In addition, compared with fully supervised methods, SAMURAI performs equally competitively on the LaSOT dataset, demonstrating its robustness and broad application potential in complex tracking scenarios.

The research team stated that the success of SAMURAI lays the foundation for the future application of visual tracking technology in more complex and dynamic environments. They hope that this innovation can promote the development of the field of visual tracking, meet the needs of real-time applications, and provide stronger visual recognition capabilities for various smart devices.

Project entrance: https://yangchris11.github.io/samurai/

The emergence of the SAMURAI model has brought new breakthroughs to visual tracking technology, and its efficiency and accuracy in complex scenes are impressive. In the future, this model is expected to be widely used in fields such as autonomous driving and robot vision, promoting the further development of artificial intelligence technology. The editor of Downcodes looks forward to seeing SAMURAI achieve more impressive results in the future!