The editor of Downcodes learned that the Allen Institute for Artificial Intelligence (Ai2) recently released Molmo, a new open source multi-modal AI model family. Its performance is amazing, even surpassing OpenAI's GPT-4o and Anthropic's in multiple third-party benchmark tests. Claude3.5Sonnet and Google's Gemini1.5. Not only does Molmo analyze user-uploaded images, it also uses 1,000 times less data during training than its competitors, thanks to its unique training techniques. This breakthrough demonstrates Ai2’s commitment to open research, making high-performance models and open weights and data available to the wider community and enterprise.

Molmo not only accepts user-uploaded images for analysis, but also uses “1,000 times less data than competitors” for training, thanks to its unique training techniques.

This release demonstrates Ai2’s commitment to open research, providing high-performance models with open weights and data for use by the wider community and enterprises. The Molmo family includes four main models, namely Molmo-72B, Molmo-7B-D, Molmo-7B-O and MolmoE-1B. Molmo-72B is the flagship model, containing 7.2 billion parameters, and its performance is particularly outstanding.

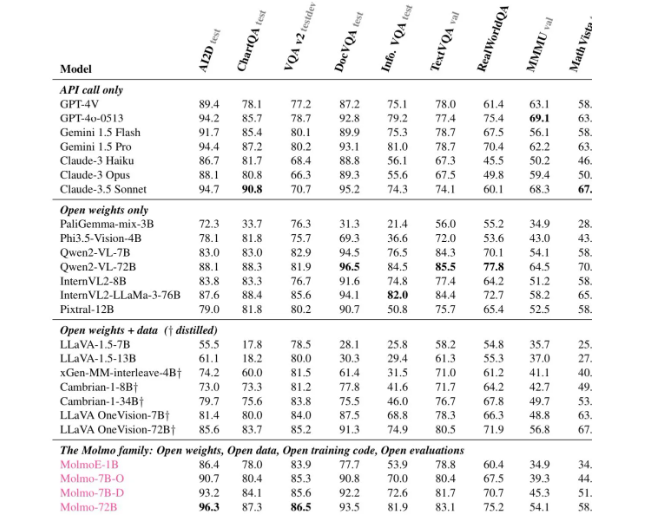

According to various evaluations, Molmo-72B received top scores on 11 important benchmarks and was ranked second only to -4o in terms of user preference. Ai2 also launched an OLMoE model, using a "small model combination" approach to improve cost-effectiveness.

Molmo's architecture has been carefully designed for efficiency and superior performance. All models use OpenAI's ViT-L/14336px CLIP model as the visual encoder to process multi-scale images into visual commands. The language model part is the decoder Transformer, which has different capacities and openness.

In terms of training, Mol undergoes two stages of training: first, multi-model pre-training, and second, supervised fine-tuning. Unlike many modern models, Molmo does not rely on reinforcement learning with human feedback, but instead updates model parameters through a carefully tuned training process.

Molmo performed well in multiple benchmarks, especially in complex tasks such as document reading and visual reasoning, demonstrating its strong capabilities. Ai2 has released these models and data sets on Hugging Face, and will launch more models and extended technical reports in the coming months to provide more resources for researchers.

If you want to learn about Molmo's capabilities, public demonstrations are now available through Molmo's official website (https://molmo.allenai.org/).

Highlight:

Ai2Molmo’s open source modal AI model surpasses the industry’s top products.

? Mol-72B performs well in multiple benchmarks, second only to GPT4o.

It is highly open, and models and data sets can be used freely by researchers.

All in all, the emergence of Molmo marks a major advancement in the field of multi-modal AI, and its open source nature also provides valuable resources for researchers around the world. The editor of Downcodes hopes that Molmo will be more widely used and developed in the future and promote the continuous progress of AI technology.