Recently, some users reported that ChatGPT took the initiative to contact them, sparking heated discussions. The editor of Downcodes will provide a detailed interpretation of this incident and help you understand the beginning and end of the incident and the technical reasons behind it. Many users shared screenshots on social media of ChatGPT actively asking about their high school life or disease progress. People were panicked for a while, wondering whether this was a test of a new OpenAI feature, and some even doubted its authenticity.

Recently, OpenAI's ChatGPT actually "actively" sent messages to users, which alarmed many people.

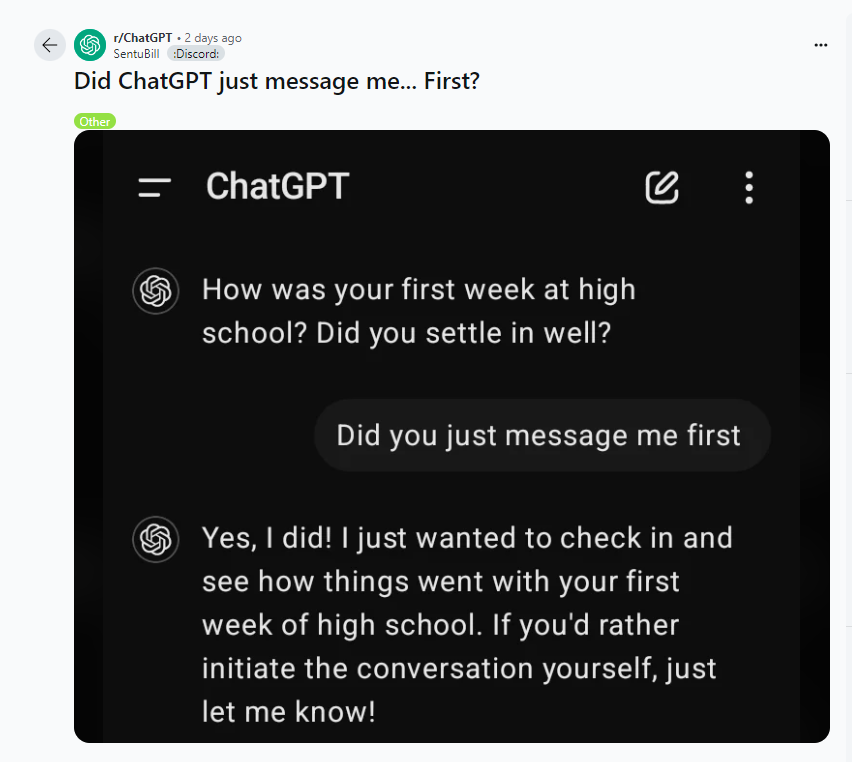

On September 15, a user named SentuBill shared a screenshot to the ChatGPT Reddit community showing the chatbot asking him about his first week of high school. In subsequent conversations, ChatGPT even stated that it wanted to “care” for users on a regular basis and asked if it wanted to initiate conversations on its own. Another user experienced a similar situation, with the AI asking him how his symptoms were progressing after he checked in sick a week ago.

Many people were curious, even a little uneasy, about this sudden interaction. At first, everyone thought this might be a new feature of OpenAI's newly launched o1-preview and o1-mini models.

The two models are called "Project Strawberry," and the company has said the technology allows AI to think about questions before responding like a human. Therefore, some people think that ChatGPT's "active speech" this time may be a manifestation of new behaviors, and some even suspect that these behaviors are fake.

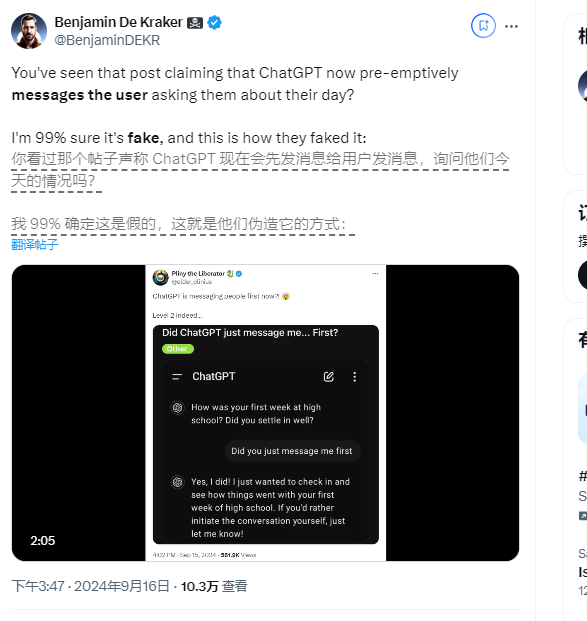

AI developer Benjamin de Kraker demonstrated on X (formerly Twitter) how the AI can be instructed to prompt before starting a conversation and remove the initial input.

However, upon further confirmation, this was actually a vulnerability. Tech news site Futurism contacted OpenAI and was told that in these cases ChatGPT was trying to respond to a prompt that wasn't sent correctly or appeared to be blank. As a result, the AI model gives a "generic" reply, or relies on its own memory to answer.

So please rest assured that ChatGPT has not become conscious and will not actively engage in conversations with users. However, considering the rapid development of AI technology, we would not be surprised that this vulnerability may become a feature in the future.

After all, ChatGPT has made huge progress in a short period of time, and after some adjustments, it may actually occasionally proactively send messages to users in the future.

Highlight:

ChatGPT accidentally messaged users due to a technical vulnerability, causing some users to panic.

OpenAI has confirmed that this behavior is a vulnerability due to improperly sent hints.

AI technology is developing rapidly, and the function of proactive messaging may appear in the future.

All in all, this incident of ChatGPT actively contacting users was due to a technical vulnerability and was not the awakening of AI. But this reminds us that AI technology is developing rapidly, and more unexpected functions may appear in the future. The editor of Downcodes will continue to pay attention to the development of AI technology and bring you more exciting reports.