The editor of Downcodes learned that Apple researchers have launched a new benchmark test called GSM-Symbolic for the mathematical reasoning capabilities of large language models (LLM). This test is based on GSM8K and is designed to more comprehensively evaluate LLM's reasoning capabilities, rather than relying solely on its probabilistic pattern matching. Although GSM8K is popular, it has problems such as data pollution and performance fluctuations. GSM-Symbolic overcomes these shortcomings by generating diversified mathematical problems from symbolic templates, providing a guarantee for more accurate evaluation.

Recently, Apple researchers conducted an in-depth study of the mathematical reasoning capabilities of large language models (LLM) and launched a new benchmark called GSM-Symbolic.

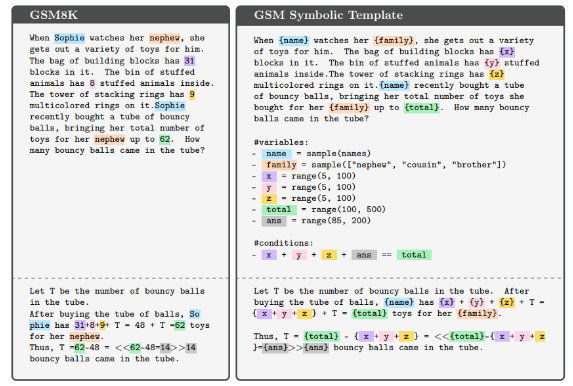

This new benchmark is developed on the basis of GSM8K, which is mainly used to evaluate basic mathematics ability. Although the performance of many LLMs has improved on GSM8K, the scientific community still has questions about the reasoning capabilities of these models, believing that existing evaluation metrics may not fully reflect their true capabilities. Research has found that LLMs often rely on probabilistic pattern matching rather than true logical reasoning, making them very sensitive to small changes in input.

In the new study, researchers used symbolic templates to generate diverse mathematical problems that provide more reliable assessments. Experimental results show that the performance of LLM decreases significantly when the numerical value or complexity of the problem increases. Furthermore, even adding information that is superficially relevant to the problem but not actually irrelevant can cause model performance to degrade by up to 65%. These results once again confirm that LLM relies more on pattern matching rather than formal logical reasoning when reasoning.

The GSM8K dataset contains more than 8,000 grade-level math problems, and its popularity raises several risks, such as data contamination and performance fluctuations caused by small problem changes. In order to deal with these challenges, the emergence of GSM-Symbolic allows the diversity of problems to be effectively controlled. This benchmark evaluates more than 20 open and closed models using 5,000 samples from 100 templates, demonstrating the insights and limitations of LLM's mathematical reasoning capabilities.

Preliminary experiments show that the performance of different models on GSM-Symbolic varies significantly, and the overall accuracy is lower than the reported performance on GSM8K. The study further explored the impact of changing variable names and values on LLM, and the results showed that changes in values had a greater impact on performance. In addition, the complexity of the problem also directly affects the accuracy, with complex problems leading to significant performance degradation. These results suggest that the model may rely more on pattern matching than on true reasoning abilities when dealing with mathematical problems.

This study highlights the limitations of current GSM8K evaluations and introduces a new benchmark, GSM-Symbolic, designed to evaluate the mathematical reasoning capabilities of LLMs. Overall, the findings indicate that LLMs still need to further improve their logical reasoning abilities when dealing with complex problems.

Paper: https://arxiv.org/abs/2410.05229

All in all, the GSM-Symbolic benchmark proposed by Apple provides a new perspective for evaluating the mathematical reasoning capabilities of large language models. It also reveals that LLM still has room for improvement in logical reasoning, which points the way for future model improvements. We look forward to more research in the future to further promote the development of LLM’s reasoning capabilities.