The Meta FAIR team released a new Transformer model, Dualformer, which simulates human dual cognitive systems (System 1 and System 2) and achieves significant improvements in reasoning capabilities and computing efficiency. Unlike traditional Transformer models that only simulate System 1 or System 2, Dualformer can flexibly switch between fast and slow reasoning modes to adapt to the complexity of different tasks. This innovation stems from its unique training method - using random reasoning trajectories for training and randomly discarding different parts of the trajectories to simulate shortcuts in the human thinking process.

Meta's FAIR team recently launched a new Transformer model called Dualformer, which imitates the human dual cognitive system and can seamlessly integrate fast and slow reasoning modes, achieving significant breakthroughs in reasoning capabilities and computing efficiency. .

Human thought processes are generally thought to be controlled by two systems: System 1, which is fast and intuitive, and System 2, which is slower and more logical.

Traditional Transformer models usually only simulate one of System 1 or System 2, resulting in a model that is either fast but has poor reasoning capabilities, or has strong reasoning capabilities but is slow and has high computational costs.

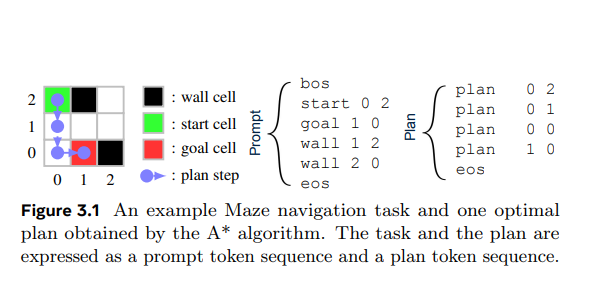

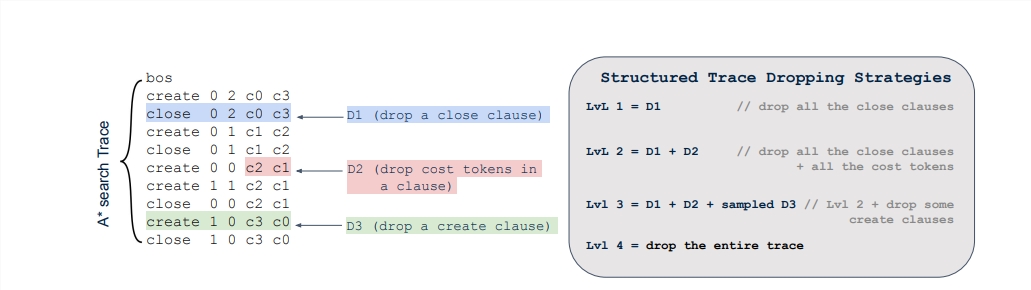

What's innovative about the Dualformer is the way it's trained. The researchers trained the model using random inference trajectories, randomly discarding different parts of the trajectories during training, similar to analyzing human thought processes and creating shortcuts. This training strategy enables Dualformer to flexibly switch between different modes during inference:

Fast mode: Dualformer only outputs the final solution, which is extremely fast.

Slow mode: Dualformer will output a complete reasoning chain and final solution, with stronger reasoning capabilities.

Automatic mode: Dualformer can automatically select the appropriate mode based on the complexity of the task.

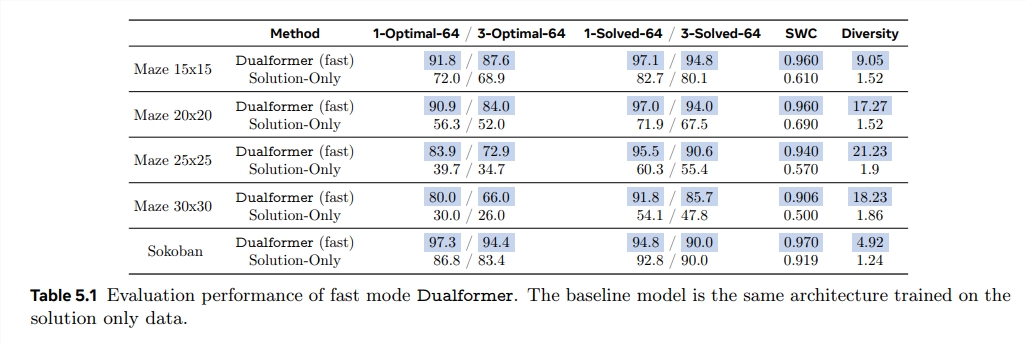

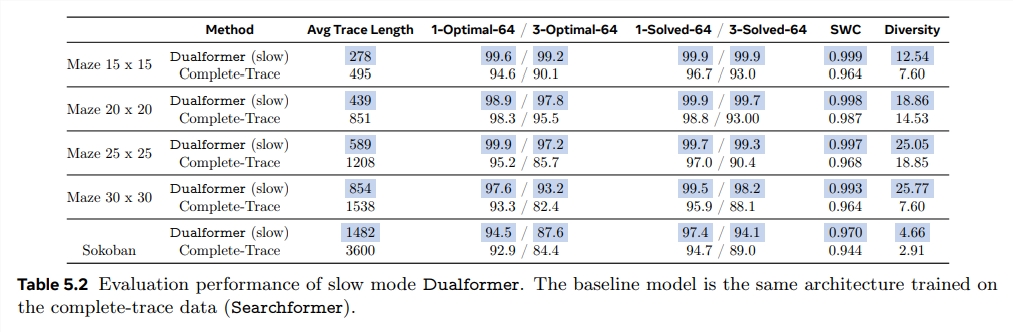

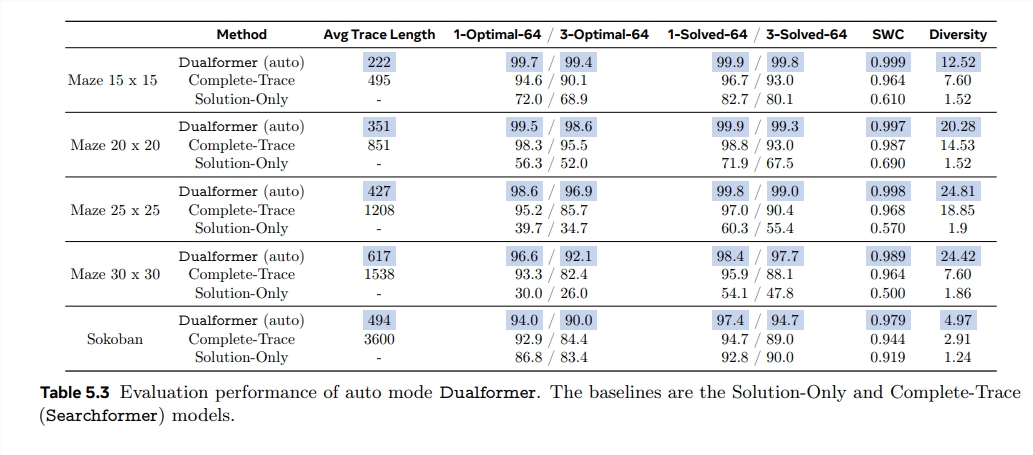

Experimental results show that Dualformer performs well on tasks such as maze navigation and mathematical problem solving. In slow mode, Dualformer can solve the 30x30 maze navigation task with a success rate of 97.6%, surpassing the Searchformer model trained using only complete inference trajectories, while reducing inference steps by 45.5%.

In fast mode, Dualformer's success rate is also as high as 80%, which is much higher than the Solution-Only model trained using only the final solution. In automatic mode, Dualformer can significantly reduce inference steps while maintaining a high success rate.

The success of Dualformer shows that applying human cognitive theory to artificial intelligence model design can effectively improve model performance. This model of integrating fast and slow thinking provides new ideas for building more powerful and efficient AI systems.

Paper address: https://arxiv.org/pdf/2410.09918

The editor of Downcodes concluded: The emergence of Dualformer marks a big step forward in the design of artificial intelligence models closer to human thinking patterns. Its breakthroughs in reasoning efficiency and accuracy provide new directions and future development of AI technology. Enlightenment.