The editor of Downcodes will take you to learn about the latest breakthroughs of Meta researchers! They used the Transformer model to overcome a long-standing unsolved problem in the field of dynamic systems - finding the global Lyapunov function. This research not only demonstrates the powerful capabilities of large-scale language models in complex mathematical reasoning, but more importantly, it proposes an innovative "reverse generation" method that effectively solves the problem of insufficient training data and pave the way for AI in scientific discovery. Applications in it have opened up new avenues. The research results have been published on arXiv, and the paper address has been provided.

Large language models perform well in many tasks, but their reasoning capabilities have been controversial. Researchers at Meta recently published a paper showing how they use the Transformer model to solve a long-standing problem in mathematics: discovering the global Lyapunov function of a dynamical system.

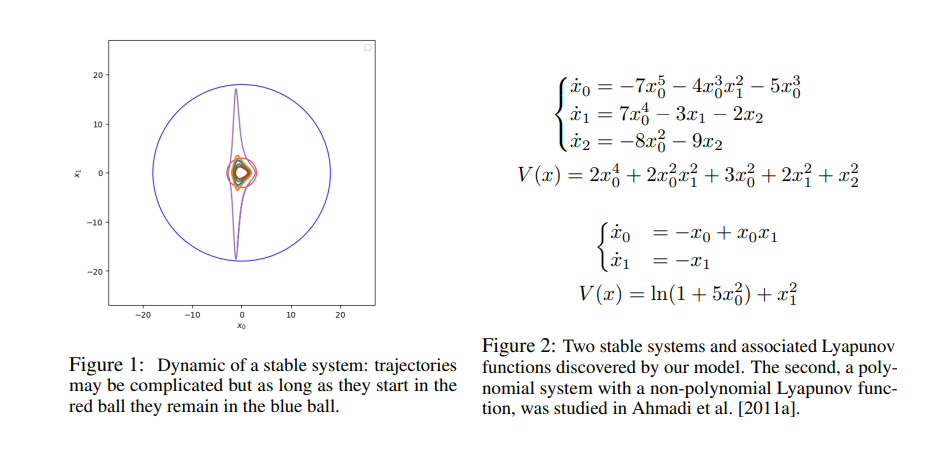

The Lyapunov function can determine whether a dynamic system is stable. For example, it can be used to predict the long-term stability of the three-body problem, that is, the long-term trajectory of three celestial bodies under the influence of gravity. However, no general method has yet been found to derive the Lyapunov function, and its corresponding function is known for only a few systems.

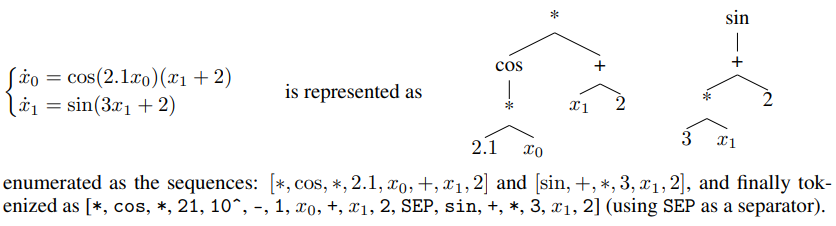

To solve this problem, researchers at Meta trained a sequence-to-sequence Transformer model to predict the Lyapunov function of a given system. They innovatively used an "inverse generation" approach to create a large training data set containing stable dynamical systems and their corresponding Lyapunov functions.

The traditional "forward generation" method starts from a randomly generated system and tries to calculate its Lyapunov function. This method is inefficient and can only handle certain types of simple systems. The "reverse generation" method first randomly generates Lyapunov functions and then builds a stable system corresponding to them, thereby bypassing the problem of calculating Lyapunov functions and generating more diverse training data.

The researchers found that the Transformer model trained on the "inverse generation" dataset achieved near-perfect accuracy on the test set (99%) and also performed well on the out-of-distribution test set (73%). Even more surprising is that by adding a small number (300) of simple examples of "forward generation" to the training set, the accuracy of the model can be further improved to 84%, which shows that even a small number of known solutions can significantly improve the accuracy of the model. Improve the generalization ability of the model.

To test the model's ability to discover new Lyapunov functions, the researchers generated tens of thousands of random systems and used the model to make predictions. The results show that the model is ten times more successful at finding Lyapunov functions on polynomial systems than the state-of-the-art methods, and can also find Lyapunov functions on non-polynomial systems, something that no current algorithm can do. a little.

The researchers also compared the model with human mathematicians. They invited 25 mathematics master's students to conduct a test, and the results showed that the model's accuracy was much higher than that of humans.

This research shows that Transformer models can be trained to solve complex mathematical reasoning problems and that "inverse generation" methods can effectively create training data sets that overcome the limitations of traditional methods. In the future, the researchers plan to apply this method to other mathematical problems and explore more possibilities of AI in scientific discovery.

Paper address: https://arxiv.org/pdf/2410.08304

All in all, Meta's research provides new ideas and methods for AI to solve complex scientific problems, and also indicates that AI will play an increasingly important role in the field of scientific research. The editor of Downcodes will continue to pay attention to the latest developments in the field of AI and bring more exciting reports to readers!