With the rapid development of AIGC technology, image tampering has become increasingly rampant. The traditional image tampering detection and localization method (IFDL) faces the challenge of "black box" nature and insufficient generalization ability. The editor of Downcodes learned that a Peking University research team proposed a multi-modal framework called FakeShield, which aims to solve these problems. FakeShield cleverly takes advantage of the powerful capabilities of large language models (LLM), especially multimodal large language models (M-LLM), by building a multimodal tamper description dataset (MMTD-Set) and fine-tuning the model to achieve It effectively detects and locates various tampering techniques and provides interpretable analysis results.

With the rapid development of AIGC technology, image editing tools have become increasingly powerful, making image tampering easier and more difficult to detect. Although existing image tampering detection and localization methods (IFDL) are generally effective, they often face two major challenges: first, the "black box" nature and unclear detection principles; second, limited generalization ability and difficulty in dealing with multiple tampering methods ( Such as Photoshop, DeepFake, AIGC editing).

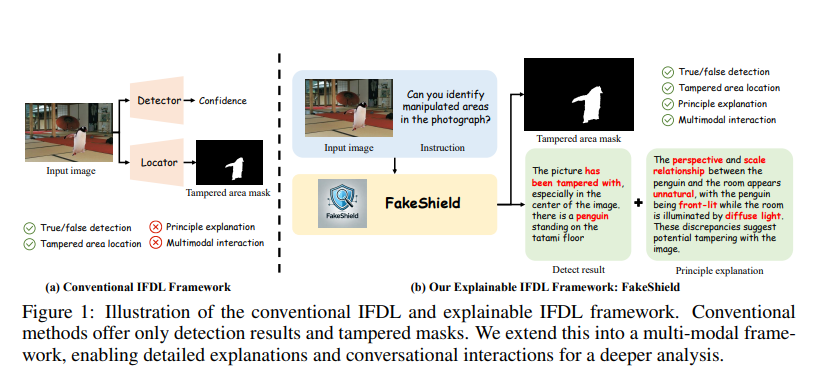

To solve these problems, the Peking University research team proposed the interpretable IFDL task and designed FakeShield, a multi-modal framework capable of evaluating the authenticity of images, generating tampered area masks, and based on pixel-level and image Level tampering clues provide a basis for judgment.

The traditional IFDL method can only provide the authenticity probability and tampering area of the image, but cannot explain the detection principle. Due to the limited accuracy of existing IFDL methods, manual subsequent judgment is still required. However, since the information provided by the IFDL method is insufficient to support manual evaluation, users still need to reanalyze suspicious images by themselves.

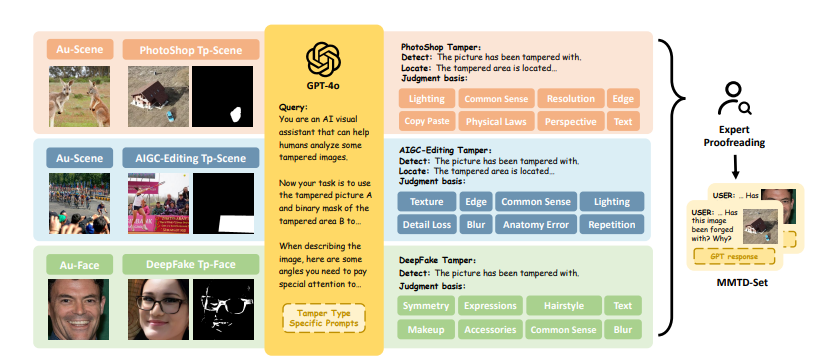

In addition, in real-life scenarios, there are various types of tampering, including Photoshop (copy move, splice and remove), AIGC editing, DeepFake, etc. Existing IFDL methods usually can only handle one of the techniques and lack comprehensive generalization capabilities. This forces users to identify different tampering types in advance and apply specific detection methods accordingly, greatly reducing the usefulness of these models.

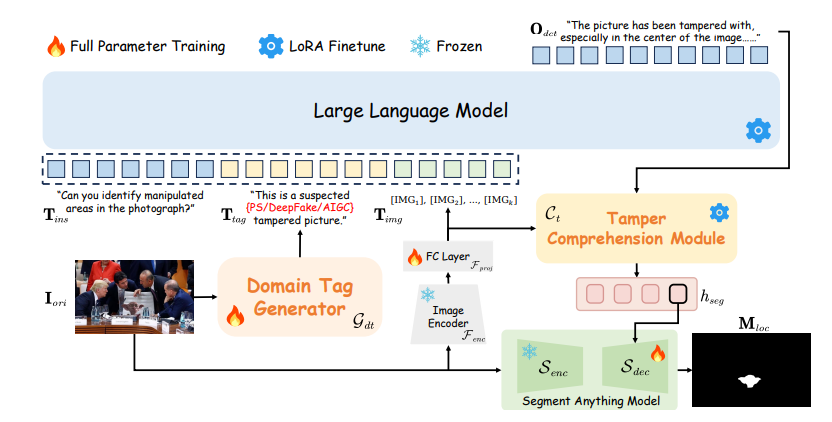

To solve these two major problems of existing IFDL methods, the FakeShield framework leverages the powerful capabilities of large language models (LLMs), especially multimodal large language models (M-LLMs), which are able to align visual and textual features, thereby empowering LLM has stronger visual understanding capabilities. Because LLMs are pre-trained on massive and diverse corpus of world knowledge, they have great potential in many application fields such as machine translation, code completion, and visual understanding.

The core of the FakeShield framework is the Multimodal Tamper Description Dataset (MMTD-Set). This dataset utilizes GPT-4o to enhance the existing IFDL dataset and contains triples of tampered images, modified region masks, and edited region detailed descriptions. By leveraging MMTD-Set, the research team fine-tuned the M-LLM and visual segmentation models so that they can provide complete analysis results, including detecting tampering and generating accurate tampered area masks.

FakeShield also includes the Domain Label Guided Interpretable Forgery Detection Module (DTE-FDM) and the Multimodal Forgery Localization Module (MFLM), which are respectively used to solve various types of tamper detection interpretation and implement forgery localization guided by detailed text descriptions.

Extensive experiments show that FakeShield can effectively detect and locate various tampering techniques, providing an interpretable and superior solution compared with previous IFDL methods.

This research result is the first attempt to apply M-LLM to interpretable IFDL, marking significant progress in this field. FakeShield is not only good at tamper detection, but also provides comprehensive explanations and precise localization, and demonstrates strong generalization capabilities to various tamper types. These features make it a versatile utility tool for a variety of real-world applications.

In the future, this work will play a vital role in multiple areas, such as helping to improve laws and regulations related to the manipulation of digital content, providing guidance for the development of generative artificial intelligence, and promoting a clearer and more trustworthy online environment. . In addition, FakeShield can assist with evidence collection in legal proceedings and help correct misinformation in public discourse, ultimately helping to improve the integrity and reliability of digital media.

Project homepage: https://zhipeixu.github.io/projects/FakeShield/

GitHub address: https://github.com/zhipeixu/FakeShield

Paper address: https://arxiv.org/pdf/2410.02761

The emergence of FakeShield has brought new breakthroughs to the field of image tampering detection. Its interpretability and strong generalization capabilities make it have great potential in practical applications. It is worth looking forward to its future use in maintaining network security and improving the credibility of digital media. play a greater role. The editor of Downcodes believes that this technology will have a positive impact on the authenticity and reliability of digital content.