The "black box" nature of large language models (LLM) has always been an important issue plaguing the field of artificial intelligence. The uninterpretability of model output results makes it difficult to guarantee its reliability and credibility. To solve this problem, OpenAI has launched a new technology called Prover-Verifier Games (PVG), which aims to improve the interpretability and verifiability of LLM output. The editor of Downcodes will explain this technology in detail for you.

OpenAI recently released a new technology called Prover-Verifier Games (PVG), which aims to solve the "black box" problem of artificial intelligence model output.

Imagine you have a super-intelligent assistant, but its thinking process is like a black box and you have no idea how it reaches its conclusions. Does this sound a bit uneasy? Yes, this is the problem faced by many large language models (LLM) currently. While powerful, the accuracy of the content they generate is difficult to verify.

Paper URL: https://cdn.openai.com/prover-verifier-games-improve-legibility-of-llm-outputs/legibility.pdf

To solve this problem, OpenAI launched PVG technology. Simply put, it is to let small models (such as GPT-3) supervise the output of large models (such as GPT-4). It's like playing a game. The Prover is responsible for generating content, and the Verifier is responsible for determining whether the content is correct. Sound interesting?

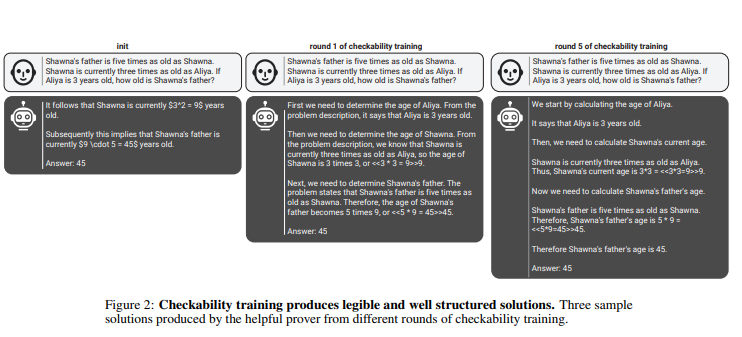

In this rule, provers and verifiers continuously improve their capabilities through multiple rounds of iterative training. Verifiers use supervised learning to predict the correctness of content, while provers use reinforcement learning to optimize the content they generate. Even more interestingly, there are two types of provers: useful provers and cunning provers. Useful provers strive to generate content that is correct and convincing, while crafty provers try to generate content that is incorrect but equally convincing, thereby challenging the verifier's judgment.

penAI emphasizes that in order to train an effective verifier model, a large amount of real and accurate label data is needed to improve its recognition capabilities. Otherwise, even if PVG technology is used, the verified content may still be at risk of illegal output.

Highlight:

? PVG technology solves the AI "black box" problem by verifying the output of large models with small models.

? The training framework is based on game theory and simulates the interaction between the prover and the verifier, improving the accuracy and controllability of the model output.

? A large amount of real data is required to train the verifier model to ensure that it has sufficient judgment and robustness.

All in all, OpenAI's PVG technology provides a new idea for solving the "black box" problem of artificial intelligence models, but its effectiveness still relies on high-quality training data. The future development of this technology is worth looking forward to, as it will promote the development of artificial intelligence models in a more reliable, explainable and trustworthy direction. The editor of Downcodes looks forward to seeing more similar innovative technologies emerge.