Stable AI's video generation platform Stable Video has officially launched public beta. The editor of Downcodes will take you to experience this exciting new tool! Stable Video is based on the SVD model and adds lens control functions, allowing you to create videos more flexibly. During the public beta period, users can generate 15 videos for free every day, with a total quota of 150. This article will provide the public beta address and detailed usage tutorials of Stable Video to help you get started quickly and create wonderful video works. Come and explore the infinite possibilities of AI video generation!

Recently, stable video, the official SVD video generation platform of Stable AI, has officially launched open beta for the public, and all users can experience it. It is understood that the function of this platform is to add lens control capabilities based on the SVD model, allowing users to generate videos more flexibly. During the public beta phase, users can enjoy 150 free credits every day, which can be used to generate 15 videos.

This article will share with you the stable video public beta address and the detailed usage tutorial of stable video.

The stable video video generation platform supports two formats: text generation video and image generation video. Let's take image generation as an example to see how to use stable video in detail.

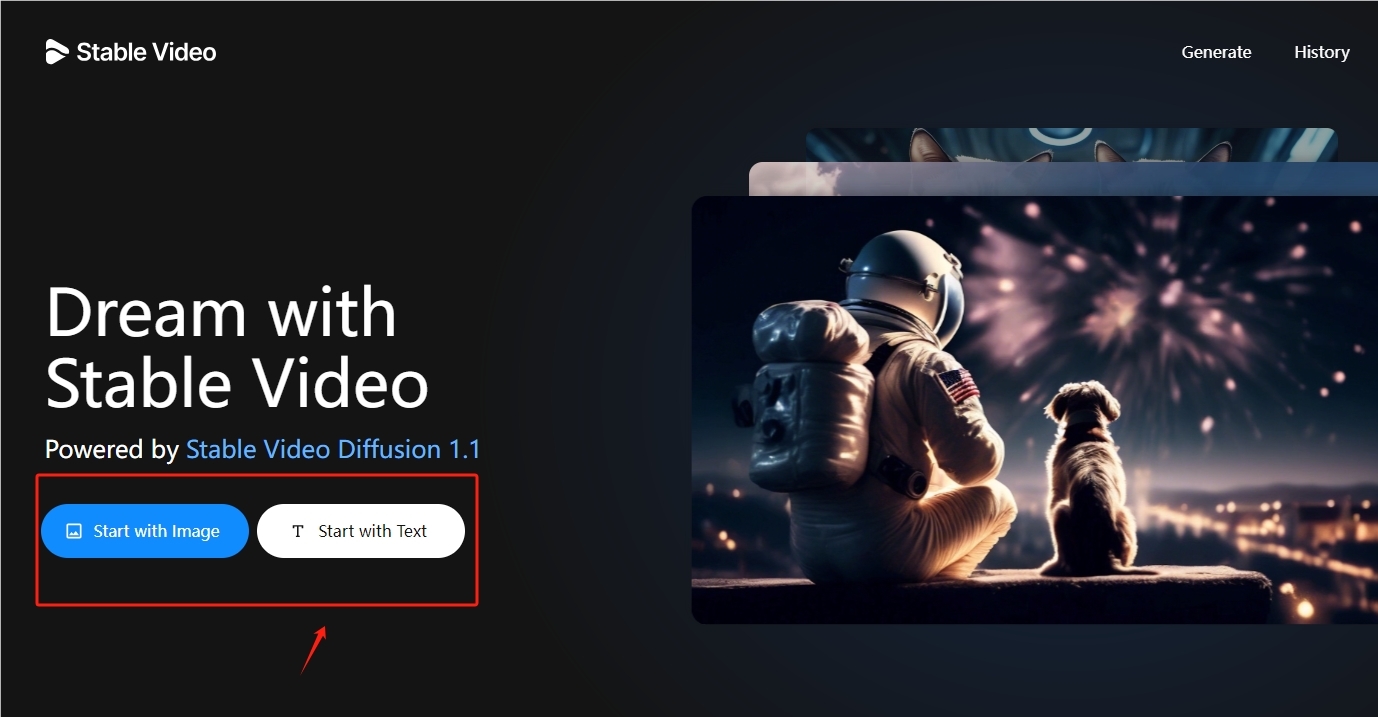

The first step is to select the video generation mode

After entering the stable video official website, select Start with Image to directly enter the Tusheng video mode. If you want to generate a video from text, select Start with text.

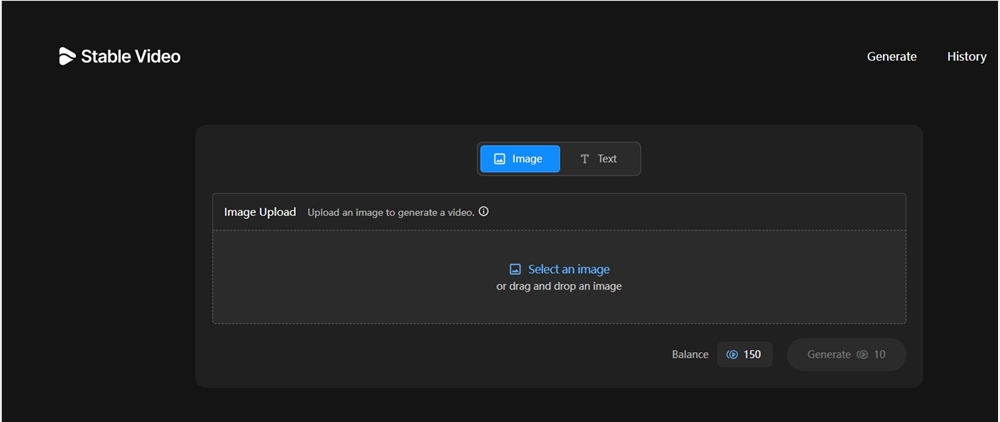

The second step is to upload pictures

After selecting the picture mode, directly upload a picture, and then click Generate to generate a video from the picture.

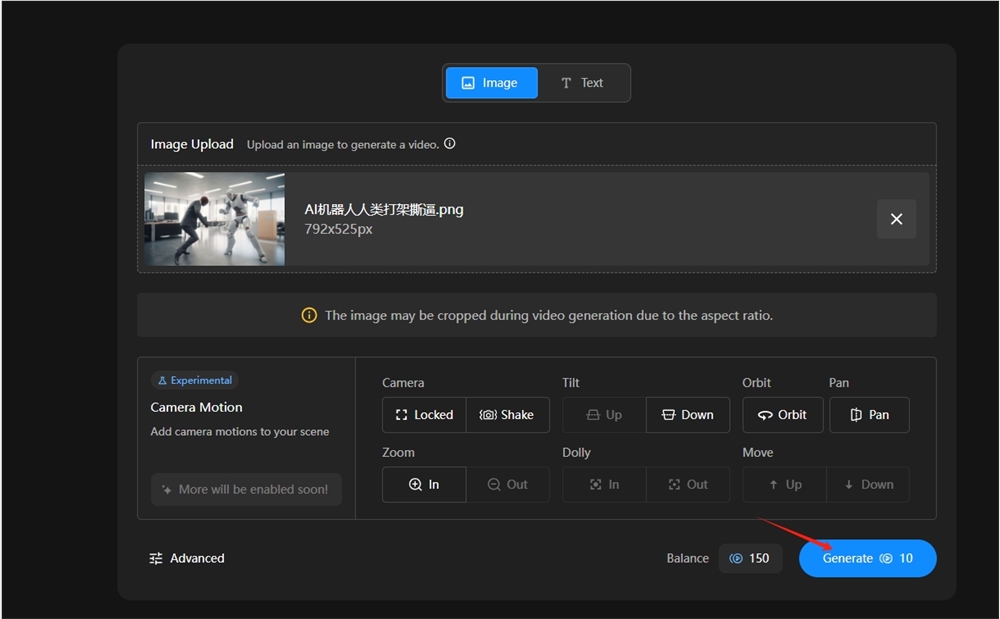

For example, in the picture below, a photo of an AI robot fighting is uploaded, and the video effect can be automatically generated without adjusting the camera motion parameters.

If you want to generate a more ideal video, you need to adjust the lens control parameters, including zoom orbit, tilt, etc. .

Lens control (Camera Motion) parameters refer to the control of the lens effect of the generated video. The parameters are roughly described as follows:

Camera, you can choose lens lock or pan mode.

Tilt

Orbit

Pan (Tile)

Zoom (zoom), can expand or reduce the mode.

Dolly (sliding mode)

Move

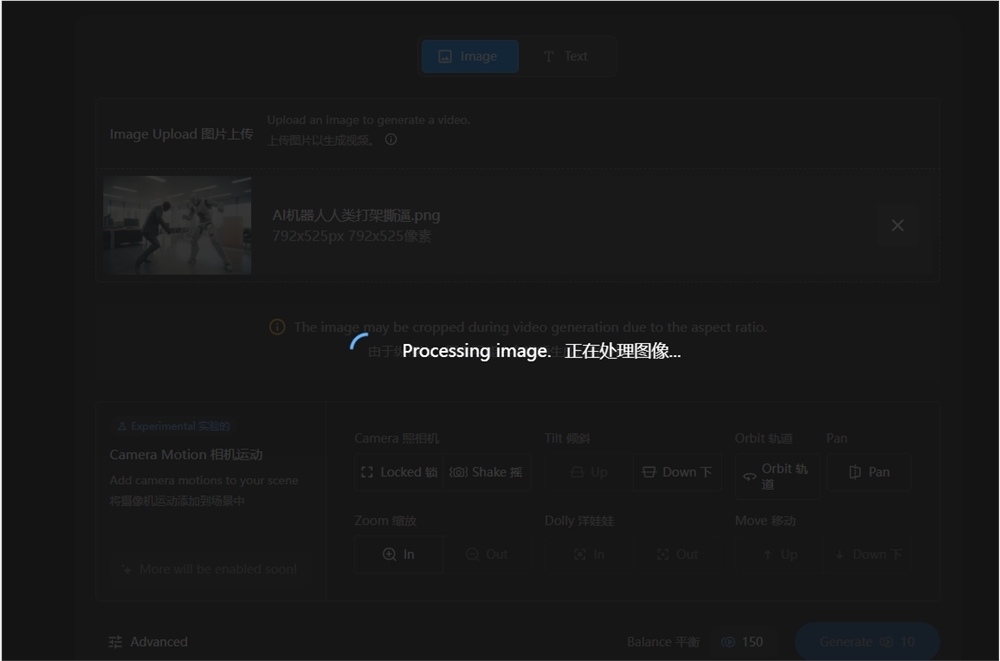

The third step is to generate video

Upload the image, adjust the parameters, click generate, and wait for the platform to generate the video.

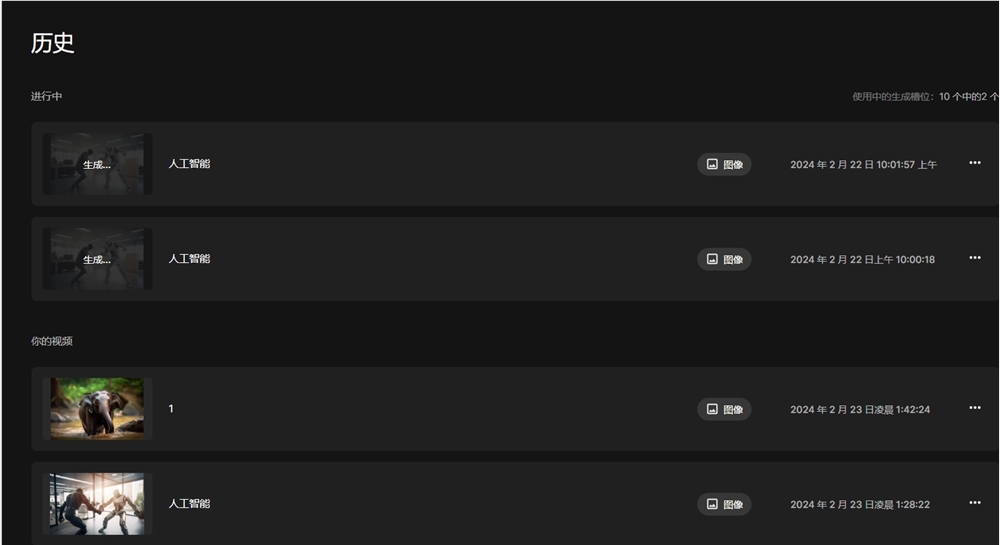

The generated video will be in the history list of the personal account.

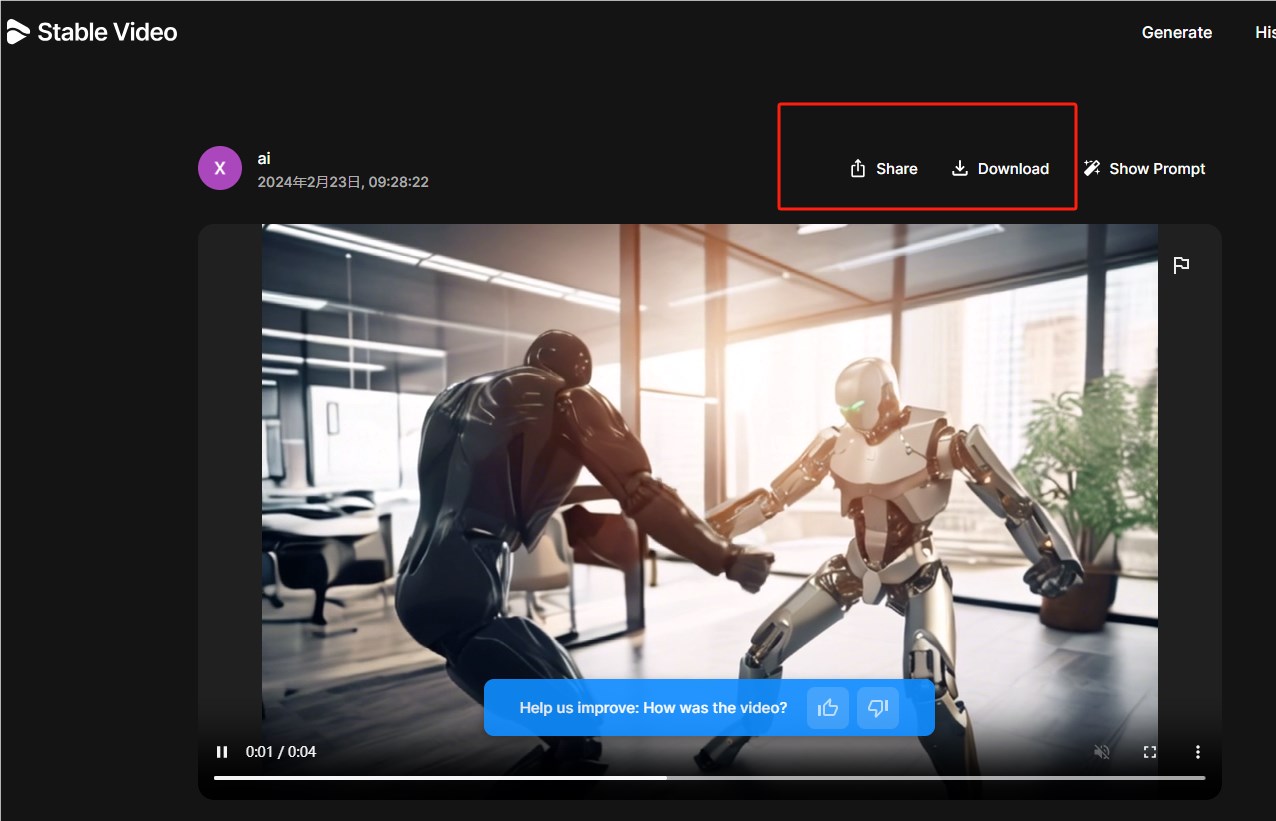

Step 5: Video download and sharing

In the history list, click on the target video to share or download the AI-generated video.

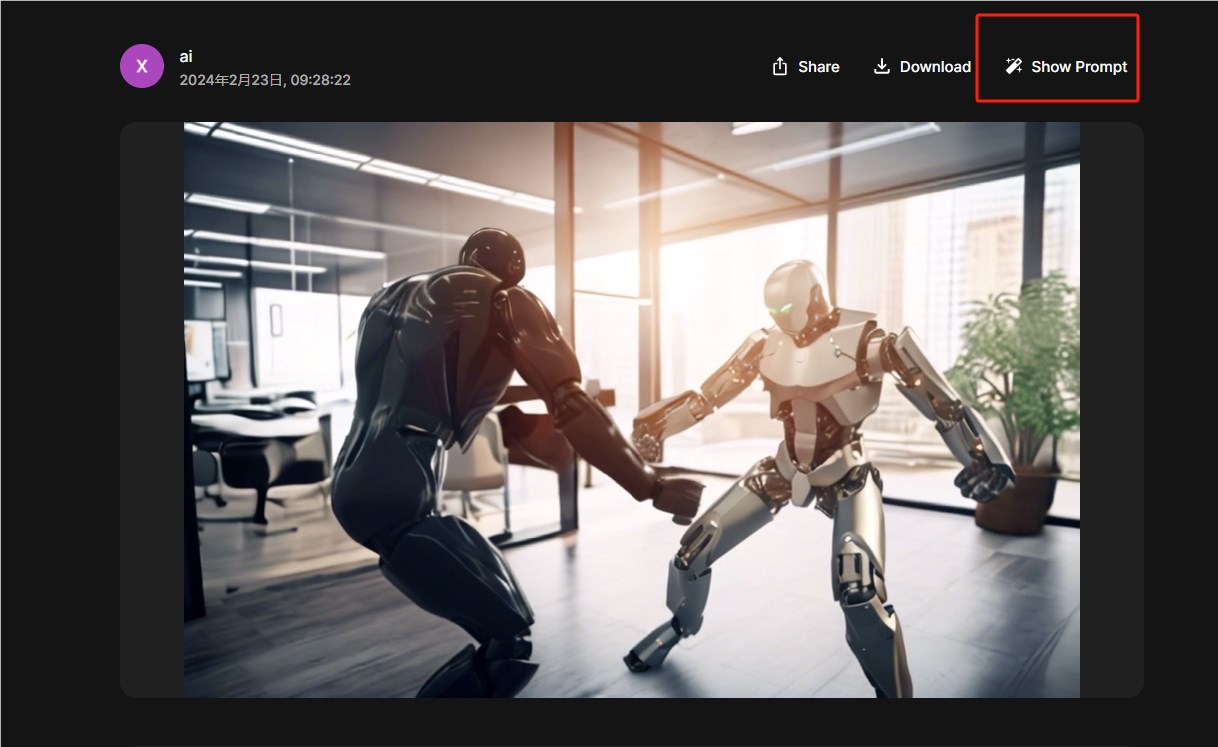

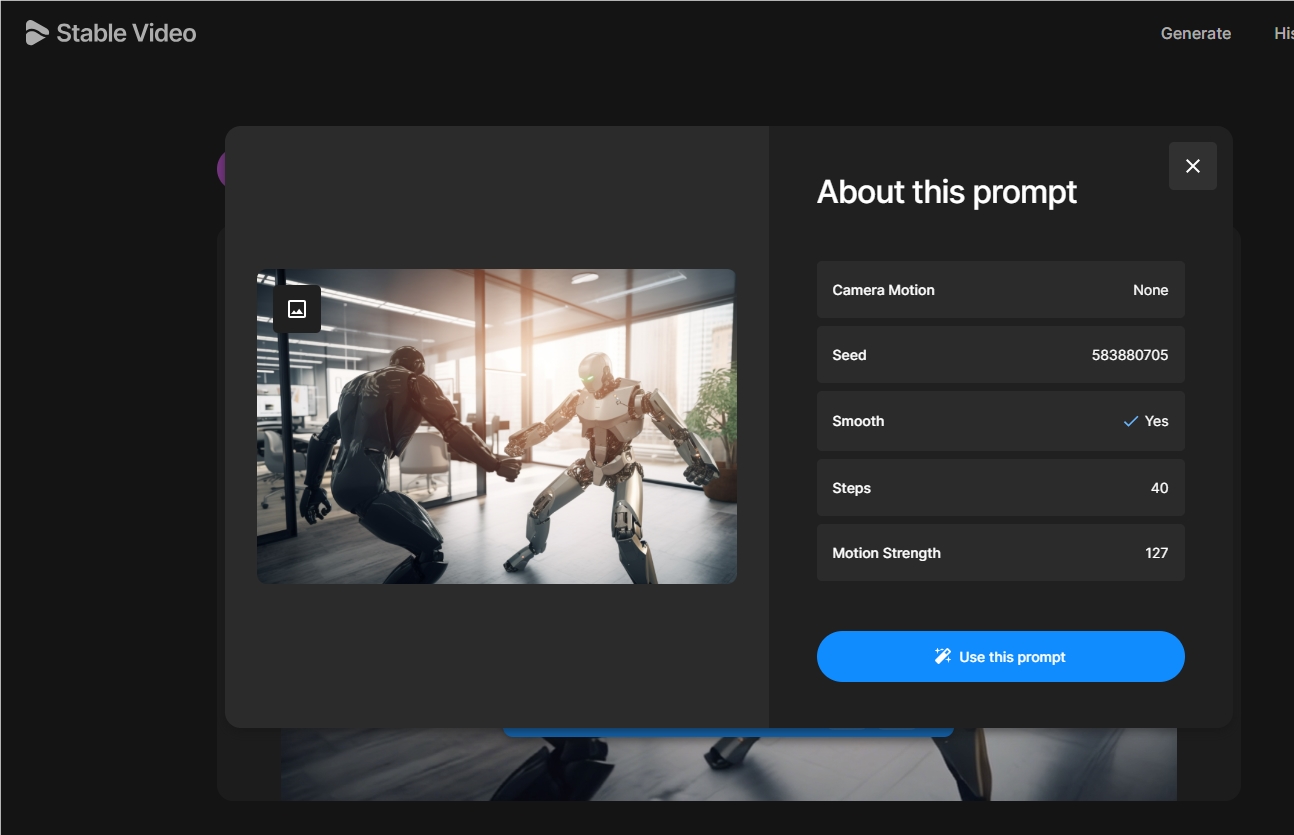

Step 6: Obtain the prompt word parameter template

After generating the video, you can also view the prompt word parameters you used in the video interface. If you like this effect, you can directly select this parameter template to continue generating other videos.

Similarly, you can also use the prompt word parameter template in the official display case to generate a video.

The above is a detailed introduction to the use of stable video.

Public beta address entrance: https://top.aibase.com/tool/stable-video

I hope this tutorial can help you quickly get started with Stable Video and create video works that you are satisfied with! For more AI tool skills, please continue to follow the editor of Downcodes!