Today, with the rapid development of AI technology, video generation technology has also made significant progress. The editor of Downcodes will introduce you to Snap Video, an innovative model that can automatically generate high-quality videos through text descriptions. It breaks through the bottleneck of traditional video generation technology and achieves a more efficient, realistic, and scalable video creation experience. Snap Video not only makes breakthroughs in technology, but also optimizes user experience, bringing users unprecedented convenience in video creation.

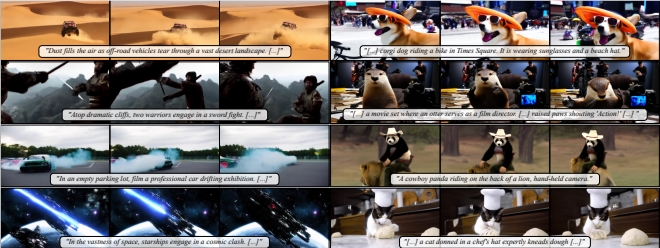

In the age of digital media, video has become the primary way we express ourselves and share our stories. But the creation of high-quality videos often requires specialized skills and expensive equipment. Now, with Snap Video, you only need to describe the scene you want with text, and the video will be automatically generated.

Current image generation models have demonstrated remarkable quality and diversity. Inspired by this, researchers began to apply these models to video generation. However, the high redundancy of video content makes directly applying image models to the field of video generation, which will reduce the authenticity, visual quality and scalability of actions.

Snap Video is a video-centric model that systematically addresses these challenges. First, it extends the EDM framework to consider redundant pixels in space and time, naturally supporting video generation. Secondly, it proposes a novel transformer-based architecture that is 3.31 times faster in training and 4.5 times faster in inference than U-Net. This enables Snap Video to efficiently train text-to-video models with billions of parameters, achieve state-of-the-art results for the first time, and generate videos with higher quality, temporal consistency, and significant motion complexity.

Technical Highlights:

Joint spatiotemporal modeling: Snap Video is able to synthesize coherent videos with large-scale motion while retaining the semantic control of large-scale text-to-video generators.

High-resolution video generation: A two-stage cascade model is used to first generate low-resolution video and then perform high-resolution upsampling to avoid potential temporal inconsistency issues.

FIT-based architecture: Snap Video utilizes the FIT (Far-reaching Interleaved Transformers) architecture to achieve efficient joint modeling of spatio-temporal computing by learning compressed video representations.

Snap Video is evaluated on widely adopted datasets such as UCF101 and MSR-VTT, showing particular advantages in generating action quality. User studies also show that Snap Video outperforms state-of-the-art methods in terms of video text alignment, number of actions, and quality.

The paper also discusses other research efforts in the field of video generation, including methods based on adversarial training or autoregressive generation techniques, and recent advances in employing diffusion models in text-to-video generation tasks.

Snap Video systematically solves common problems of diffusion processes and architectures in text-to-video generation by treating videos as first-class citizens. Its proposed modified EDM diffusion framework and FIT-based architecture significantly improve the quality and scalability of video generation.

Paper address: https://arxiv.org/pdf/2402.14797

All in all, Snap Video has made remarkable achievements in the field of text-to-video generation, and its efficient architecture and excellent performance provide new possibilities for future video creation. The editor of Downcodes believes that this technology will have a profound impact on the field of video creation.