The editor of Downcodes will take you to learn about MotionClone - a technology that subverts video creation! With the AI wave sweeping the world, text-generated videos are no longer a distant dream. However, how to accurately capture and reproduce motion has always been a technical bottleneck in this field. MotionClone comes into being, which cleverly uses reference video to clone motion and seamlessly applies it to new text descriptions to generate stunning video content. This technology breaks through the limitations of traditional methods and brings revolutionary changes to video creation. Let us explore the technical mysteries behind it.

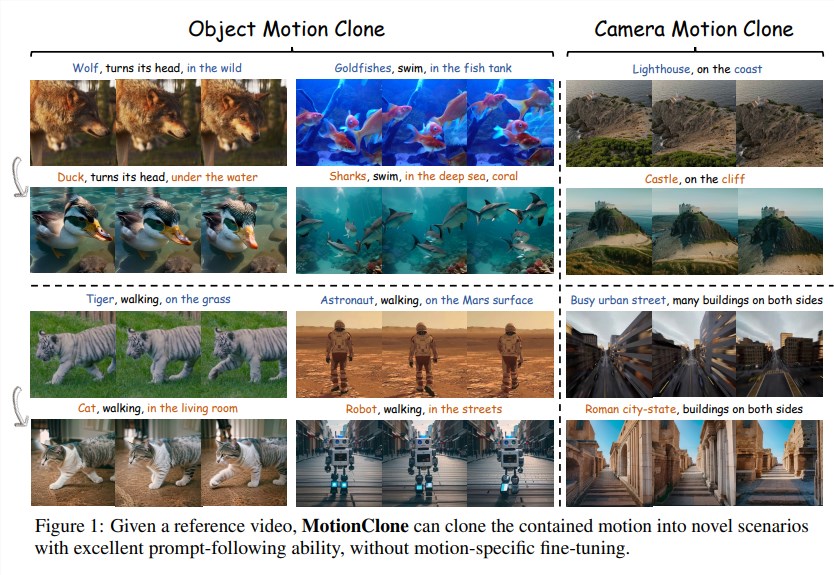

In the field of digital content creation, technology that can generate videos based on text descriptions has always been a hot research topic. How exciting it would be if we could clone motion from a reference video and then seamlessly apply it to new text descriptions to create brand new video content! This is the miracle that MotionClone technology achieves.

Although existing text-to-video (T2V) generation models have made certain progress, they still face challenges in action synthesis. Traditional methods often require training or fine-tuning models to encode action cues, but these methods often perform poorly when dealing with unseen action types.

MotionClone proposes a training-free framework that clones actions directly from reference videos to control text-to-video generation. This framework utilizes a temporal attention mechanism to capture actions in reference videos and introduces primary temporal attention guidance to reduce the impact of noise or small movements on attention weights. In addition, in order to help the generative model synthesize reasonable spatial relationships and enhance its ability to follow cues, the researchers proposed a position-aware semantic guidance mechanism.

Technical Highlights:

Temporal attention mechanism: Representing actions in reference videos through video inversion.

Main temporal attention guidance: Only the main components in the temporal attention weight are used for action-guided video generation.

Position-aware semantic guidance: Leveraging rough foreground locations in reference videos and raw classifier-free guided features to guide video generation.

Through extensive experiments, MotionClone has demonstrated excellent capabilities in global camera motion and local object motion, with significant advantages in motion fidelity, text alignment, and temporal consistency.

The advent of MotionClone technology has brought revolutionary changes to the field of video creation. It can not only improve the quality of video content generation, but also greatly improve creation efficiency. As this technology continues to develop and improve, we have reason to believe that future video creation will be more intelligent and personalized, and even be able to realize the creative vision of "what you want is what you get".

Project address: https://top.aibase.com/tool/motionclone

With its unique technical advantages, MotionClone brings new possibilities to video creation. Its efficient and convenient features will greatly improve the efficiency of content creation. It is worth looking forward to its future development and application!