The field of artificial intelligence is changing with each passing day, and causal reasoning has become a hot research topic in recent years. Traditional machine learning models often fall short in logical reasoning and find it difficult to understand the causal relationship behind events. Today, the editor of Downcodes will introduce a research paper from institutions such as Microsoft and MIT, which proposes a breakthrough machine learning training strategy that significantly improves the logical reasoning capabilities of large models, and even small Transformer models. It is comparable to GPT-4 in reasoning capabilities. Let’s take a closer look at this impressive research result.

In this era of information explosion, we deal with smart devices every day. Have you ever wondered how these seemingly smart guys know to bring an umbrella because it’s raining? Behind this is actually a profound revolution in causal reasoning.

A group of researchers from renowned academic institutions, including Microsoft and MIT, have developed a groundbreaking machine learning training strategy. This strategy not only overcomes the shortcomings of large machine learning models in logical reasoning, but also achieves significant improvements through the following steps:

Unique training method: The researchers used a novel training method that may differ from conventional machine learning training techniques.

Improvements in logical reasoning: Their approach significantly improves the logical reasoning capabilities of large models, solving previously existing challenges.

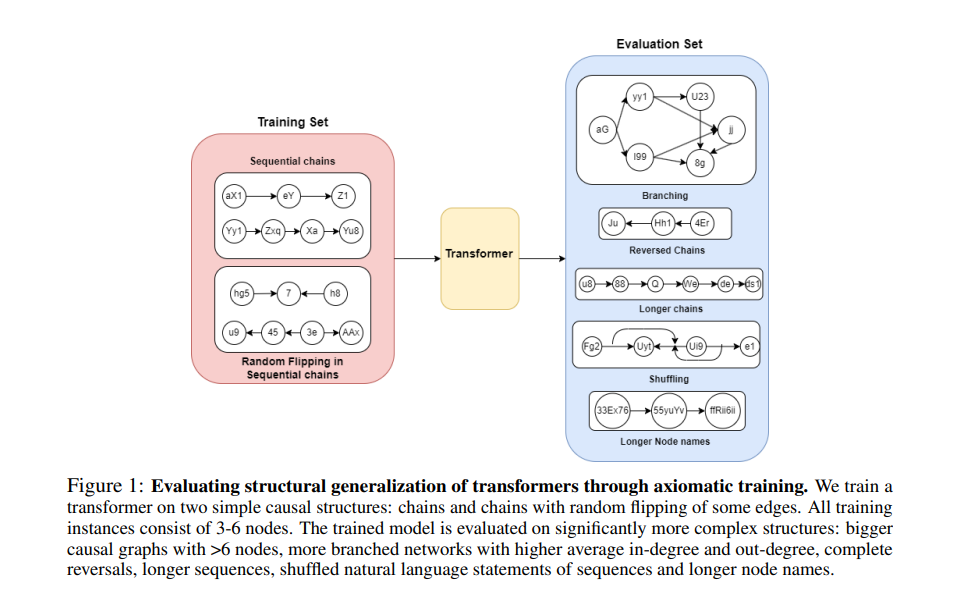

Use causality to construct a training set: The research team uses a causality model to construct a training data set. This model can reveal the causal connection between variables and help train a model that can understand the causal logic behind the data.

Teach the basic axioms of the model: They directly teach the basic premises in logic and mathematics to the model to help the model perform better logical reasoning.

The amazing performance of the small Transformer model: Although the model parameters are only 67 million, the Transformer model trained through this method is comparable to GPT-4 in terms of reasoning capabilities.

Causal reasoning may sound like the preserve of philosophers, but in fact it has already penetrated into every aspect of our lives. For artificial intelligence, mastering causal reasoning is like learning to explain the world using "because...so...". But AI is not born with this, they need to learn, and this learning process is the story of this paper.

Axiom training method:

Imagine you have a student who is very smart but has no clue about cause and effect in the world. How do you teach it? Researchers came up with a solution - axiom training. This is like giving AI a "causality manual" and letting it learn how to identify and apply causal rules through this manual.

The researchers conducted experiments with the transformer model and found that this training method really works! Not only did the AI learn to identify causal relationships on small-scale graphs, but it was also able to apply this knowledge to larger graphs, even if It has never seen such a big picture before.

The contribution of this research is that it provides a new method for AI to learn causal inference from passive data. This is like giving AI a new way of "thinking" so that it can better understand and explain the world.

This research not only allows us to see the possibility of AI learning causal reasoning, but also opens the door for us to see possible application scenarios of AI in the future. Perhaps in the near future, our smart assistants will be able to not only answer questions but also tell us why something is happening.

Paper address: https://arxiv.org/pdf/2407.07612v1

All in all, this research has brought significant improvements to the causal reasoning capabilities of artificial intelligence and provided new directions and possibilities for future AI development. The editor of Downcodes looks forward to the application of this technology in more fields, allowing AI to better understand and serve humans.