Image-to-video (I2V) generation technology is rapidly evolving with the goal of creating more realistic and controlled videos. The editor of Downcodes will introduce a new framework called Motion-I2V today, which has made a significant breakthrough in the field of I2V generation through explicit motion modeling. Developed by researchers such as Xiaoyu Shi and Zhaoyang Huang, this framework is innovative in that it decomposes the image-to-video conversion process into two stages and cleverly combines motion field prediction and motion enhancement timing layers to achieve higher quality. , more consistent and controllable video generation.

With the rapid development of artificial intelligence technology, image-to-video (I2V) generation technology has become a hot research topic. Recently, a team composed of researchers such as Xiaoyu Shi and Zhaoyang Huang introduced a new framework called Motion-I2V, which achieves more consistent and controllable image-to-video generation through explicit motion modeling. This technological breakthrough not only improves the quality and consistency of video generation, but also provides users with an unprecedented control experience.

In the field of image-to-video generation, how to maintain the coherence and controllability of generated videos has always been a technical problem. Traditional I2V methods directly learn the complex mapping of images to videos, while the Motion-I2V framework innovatively decomposes this process into two stages and introduces explicit motion modeling in both stages.

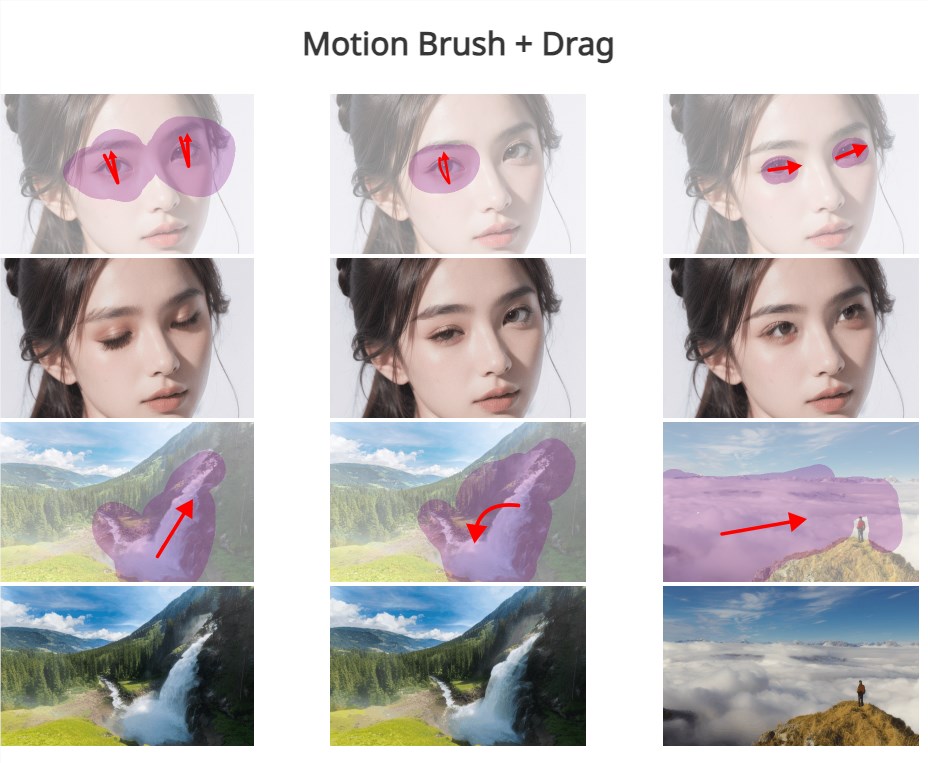

In the first stage, Motion-I2V proposes a diffusion-based motion field predictor that focuses on deriving the trajectories of reference image pixels. The key to this stage is to predict the motion field map between the reference frame and all future frames using the reference image and text cues. The second stage is responsible for propagating the content of the reference image into the composite frame. By introducing a novel motion-augmented temporal layer, 1-D temporal attention is enhanced, the temporal receptive field is expanded, and the complexity of directly learning complex spatiotemporal patterns is alleviated.

In comparison with existing methods, Motion-I2V shows clear advantages. Whether in scenarios such as "a fast-moving tank", "a blue BMW driving fast", "three clear ice cubes" or a "crawling snail", Motion-I2V produces more consistent video, even in High-quality output can be maintained under a wide range of motion and viewing angle changes.

In addition, Motion-I2V also supports users to precisely control motion trajectories and motion areas through sparse trajectories and area annotations, providing more control capabilities than relying solely on text instructions. This not only improves the user's interactive experience, but also provides the possibility for customization and personalization of video generation.

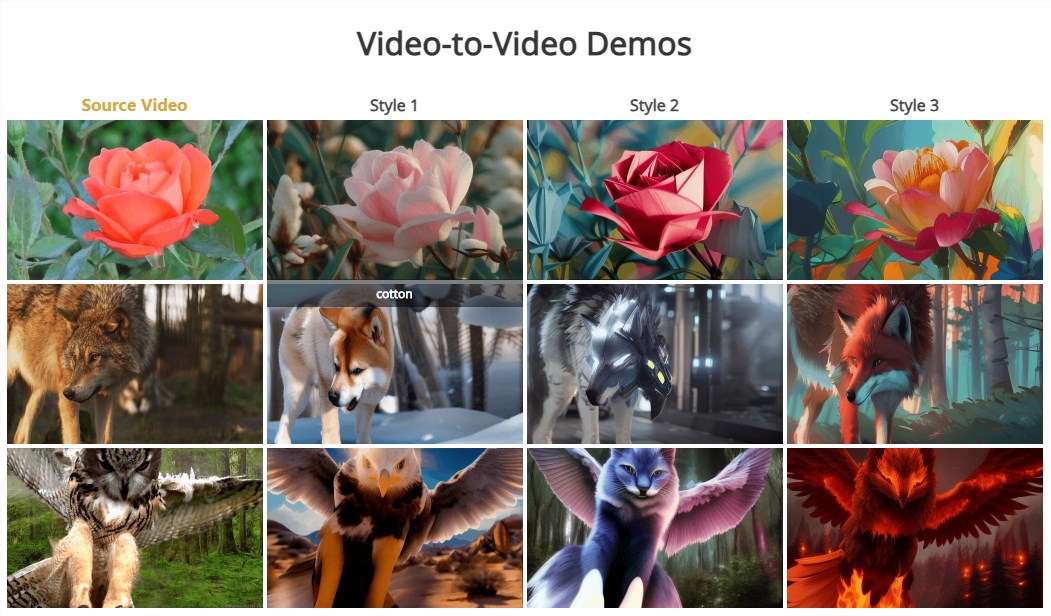

It is worth mentioning that the second stage of Motion-I2V also naturally supports zero-sample video-to-video conversion, which means that video conversion of different styles or contents can be achieved without training samples.

The launch of the Motion-I2V framework marks a new stage in image-to-video generation technology. It not only achieves significant improvements in quality and consistency, but also shows great potential in user control and personalization. As the technology continues to mature and improve, we have reason to believe that Motion-I2V will play an important role in film and television production, virtual reality, game development and other fields, bringing people a richer and more vivid visual experience.

Document address: https://xiaoyushi97.github.io/Motion-I2V/

github address: https://github.com/GUN/Motion-I2V

The emergence of the Motion-I2V framework has brought new possibilities to I2V technology, and its improvements in video generation quality, consistency and user control are worth looking forward to. In the future, with the further development of technology, I believe that Motion-I2V will be applied in more fields and bring us a more exciting visual experience. Look forward to more innovative applications based on this framework.