The Depth Anything V2 model led by ByteDance interns has been included in Apple's Core ML model library, once again proving the strong strength of China's younger generation in the field of artificial intelligence. This model has received widespread attention in the industry for its excellent monocular depth estimation capabilities and wide application prospects in multiple fields. The editor of Downcodes will give you an in-depth understanding of this eye-catching project and the wonderful stories of the interns behind it.

ByteDance's large model team has made another contribution. Their Depth Anything V2 model has been included in Apple's Core ML model library. This achievement is not only a breakthrough in technology, but what is even more remarkable is that the leader of this project turned out to be an intern.

Depth Anything V2 is a monocular depth estimation model that can estimate the depth information of a scene from a single image. From the V1 version in early 2024 to the current V2, the number of parameters of this model has expanded from 25M to 1.3B. Its application range covers video special effects, autonomous driving, 3D modeling, augmented reality and other fields.

This model has received 8.7k Stars on GitHub, the V2 version has 2.3k Stars shortly after its release, and the V1 version has received 6.4k Stars. Such an achievement is worthy of pride for any technical team, not to mention that the main force behind it is an intern.

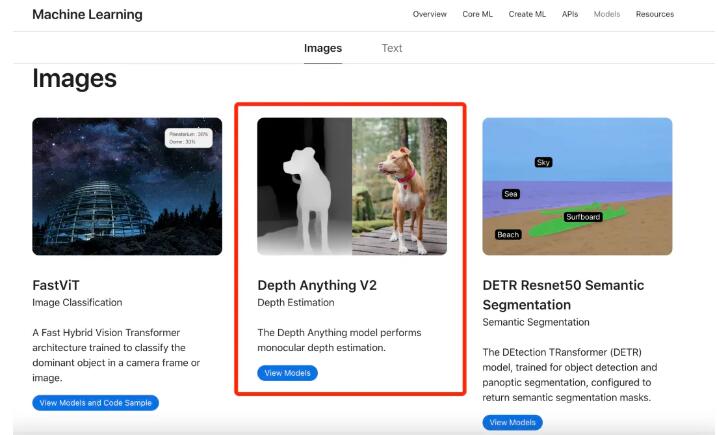

Apple has included Depth Anything V2 into the Core ML model library, which is a high recognition of the model’s performance and application prospects. Core ML, as Apple's machine learning framework, enables machine learning models to run efficiently on devices such as iOS and MacOS, and can perform complex AI tasks even without an Internet connection.

The Core ML version of Depth Anything V2 uses a model of at least 25M. After optimization by HuggingFace official engineering, the inference speed on iPhone12Pro Max reaches 31.1 milliseconds. This, together with other selected models such as FastViT, ResNet50, YOLOv3, etc., covers multiple fields from natural language processing to image recognition.

In the wave of large models, the value of Scaling Laws is recognized by more and more people. The Depth Anything team chose to build a simple yet powerful base model to achieve better results on a single task. They believe that using Scaling Laws to solve some basic problems is more practical. Depth estimation is one of the important tasks in the field of computer vision. Inferring the distance information of objects in the scene from images is crucial for applications such as autonomous driving, 3D modeling, and augmented reality. Depth Anything V2 not only has broad application prospects in these fields, but can also be integrated into video platforms or editing software as middleware to support special effects production, video editing and other functions. One of the candidates for the Depth Anything project was an intern on the team. Under the guidance of Mentor, this rising star completed most of the work from project conception to thesis writing in less than a year. The company and team provide a free research atmosphere and sufficient support, encouraging interns to delve into more difficult and essential problems.

The growth of this intern and the success of Depth Anything V2 not only demonstrate personal efforts and talents, but also reflect ByteDance’s in-depth exploration and talent cultivation in visual generation and large model-related fields.

Project address: https://top.aibase.com/tool/depth-anything-v2

The success of Depth Anything V2 lies not only in its technological breakthroughs, but also in the training model of the team behind it and its emphasis on talents. This provides valuable experience for other companies to explore in the field of artificial intelligence, and also indicates that more outstanding talents will emerge in the future. I hope more young people can be inspired by this story, bravely pursue their dreams, and create their own glory.