The editor of Downcodes will give you an in-depth understanding of the secrets of the Transformer model! Recently, a paper titled "Transformer Layers as Painters" vividly explained the working mechanism of the middle layer of the Transformer model from a "painter's" perspective. Through clever metaphors and experiments, this paper reveals how the Transformer hierarchy operates, providing new ideas for us to understand the internal operations of large language models. In the paper, the author compares each layer of the Transformer to a painter, working together to create a grand language picture, and verified this view through a series of experiments.

In the world of artificial intelligence, there is a special group of painters - the hierarchical structure in the Transformer model. They are like magical paintbrushes, painting a colorful world on the canvas of language. Recently, a paper called Transformer Layers as Painters provides a new perspective for us to understand the working mechanism of the Transformer middle layer.

Transformer model, as the most popular large-scale language model at present, has billions of parameters. Each layer of it is like a painter, working together to complete a grand language picture. But how did these painters work together? How did the brushes and paints they used differ? This paper attempts to answer these questions.

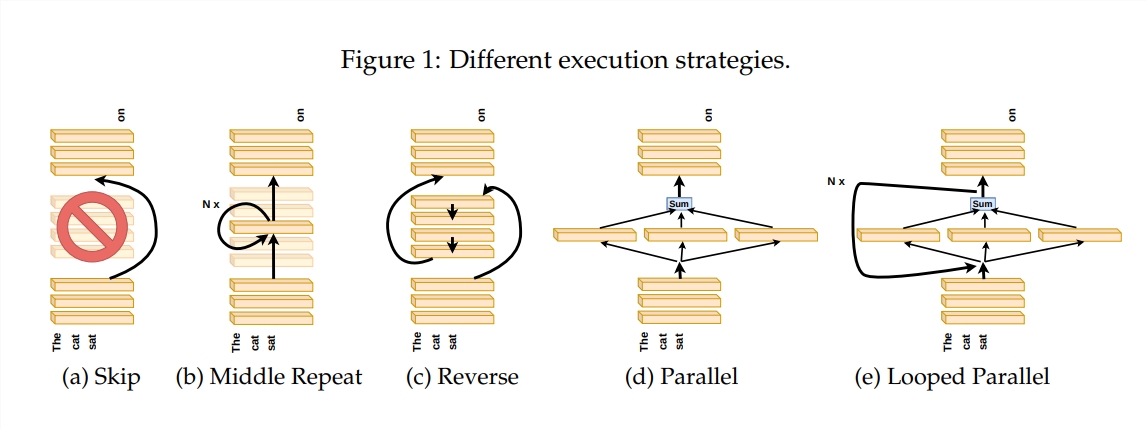

In order to explore how the Transformer layer works, the author designed a series of experiments, including skipping certain layers, changing the order of layers, or running layers in parallel. These experiments are like setting different painting rules for painters to see if they can adapt.

In the "painter's pipeline" metaphor, the input is viewed as a canvas, and the process of passing through the intermediate layers is like the passing of the canvas on the assembly line. Each "painter", that is, each layer of the Transformer, will modify the painting according to its own expertise. This analogy helps us understand the parallelism and scalability of the Transformer layer.

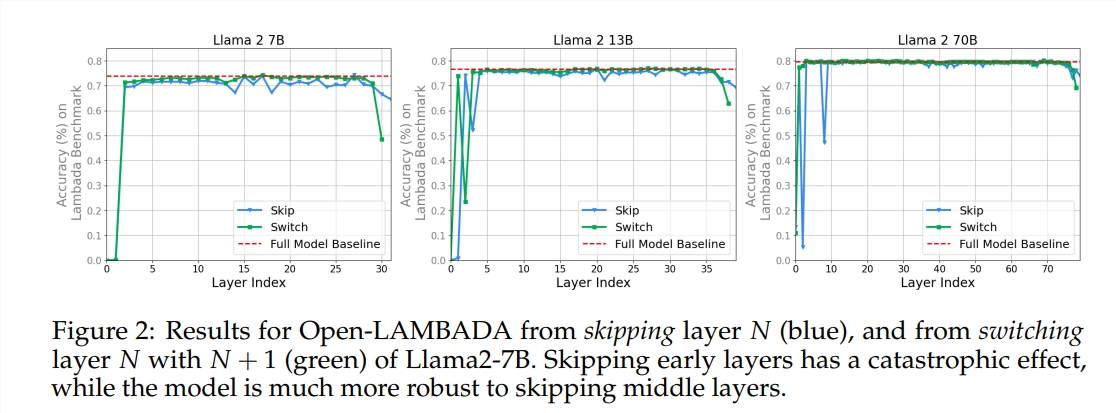

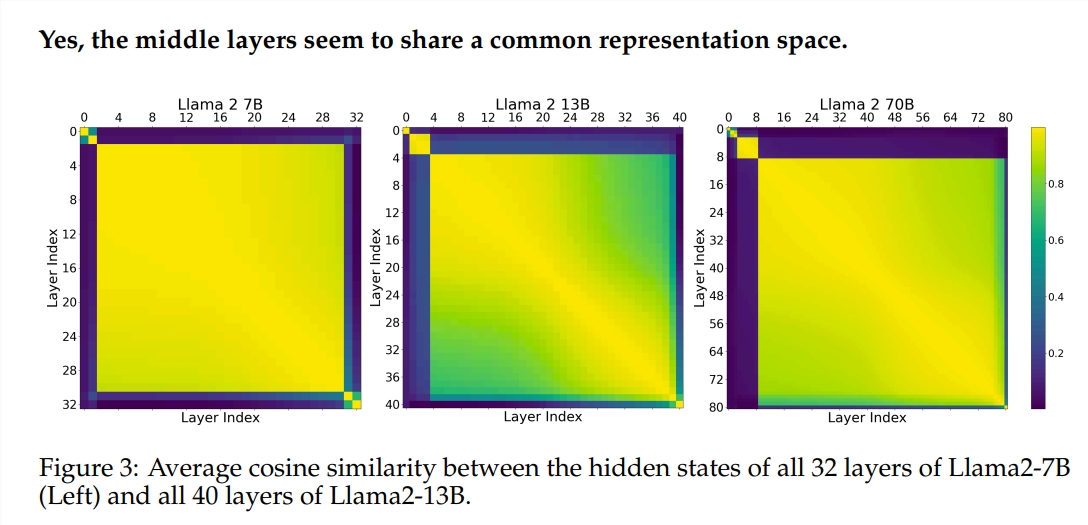

The experiment used two pre-trained large language models (LLM): Llama2-7B and BERT. The study found that painters in the middle tiers seemed to share a common paint box—representing space—different from those in the first and last tiers. Painters who skip certain intermediate layers have little impact on the entire painting, indicating that not all painters are required.

Although the painters in the middle layer use the same paint box, they use their own skills to paint different patterns on the canvas. If you simply reuse a certain painter's technique, the painting will lose its original charm.

The order in which you draw is particularly important for mathematical and reasoning tasks that require strict logic. For tasks that rely on semantic understanding, the impact of order is relatively small.

The research results show that the Transformer's middle layer has a certain degree of consistency but is not redundant. For mathematical and reasoning tasks, the order of layers is more important than for semantic tasks.

The study also found that not all layers are necessary and that intermediate layers can be skipped without catastrophically affecting model performance. Furthermore, although the intermediate layers share the same representation space, they perform different functions. Changing the execution order of layers resulted in performance degradation, indicating that order has an important impact on model performance.

On the road to exploring the Transformer model, many researchers are trying to optimize it, including pruning, reducing parameters, etc. These works provide valuable experience and inspiration for understanding the Transformer model.

Paper address: https://arxiv.org/pdf/2407.09298v1

All in all, this paper provides a new perspective for us to understand the internal mechanism of the Transformer model and provides new ideas for future model optimization. The editor of Downcodes recommends that interested readers read the full article to gain an in-depth understanding of the mysteries of the Transformer model!