The editor of Downcodes learned that OpenAI has released the experimental model gpt-4o-64k-output-alpha. Its biggest feature is that it can output a 64K length token at one time, which means it can generate richer and more detailed content. But it also means higher API prices. This model is provided to Alpha participants and can be used in scenarios such as writing, programming, and complex data analysis to provide users with more comprehensive and detailed support. However, the high cost also requires users to consider carefully. After all, the price per million output tokens is as high as $18.

OpenAI has opened a new experimental model: gpt-4o-64k-output-alpha. The biggest highlight of this new model is that it can output a 64K length token at one time. This means that it can generate richer and more detailed content in one request, but the API price is higher.

Alpha participants can access the GPT-4o long output effect using the "gpt-4o-64k-output-alpha" model name. This model can not only meet users' needs for longer texts, whether they are writing, programming, or performing complex data analysis, GPT-4o can provide more comprehensive and detailed support.

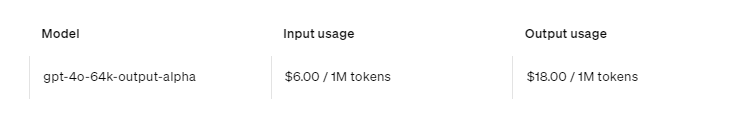

In terms of price, using a model with a longer output also means a corresponding increase in expenses. OpenAI makes it clear that long text generation is more expensive, so its price per million output tokens is $18. In comparison, the price per million tokens entered is $6. This pricing strategy is designed to match higher computing costs while also encouraging users to take advantage of this powerful tool.

All in all, the emergence of the GPT-4o-64k-output-alpha model provides new options for users who need to process and generate ultra-long text, but the high cost also requires users to carefully consider according to their own needs. The editor of Downcodes recommends that you choose the appropriate model according to the actual situation and make rational use of resources.