The editor of Downcodes will help you interpret the global research report released by Checkmarx, which reveals the current status of the widespread use of AI programming tools in the field of software development and the security risks it brings. Report data shows that even though some companies have explicitly banned the use of AI programming tools, their adoption rates remain high, highlighting the challenges enterprises face in managing generative AI. The report also provides an in-depth discussion of enterprises’ AI tool governance, security risk assessment, and developers’ trust in AI tools, providing an important reference for enterprises to effectively utilize AI tools.

According to a global study released by cloud security company Checkmarx, although 15% of companies explicitly prohibit the use of AI programming tools, almost all development teams (99%) still use these tools. This phenomenon reflects the difficulty of controlling the use of generative AI in the actual development process.

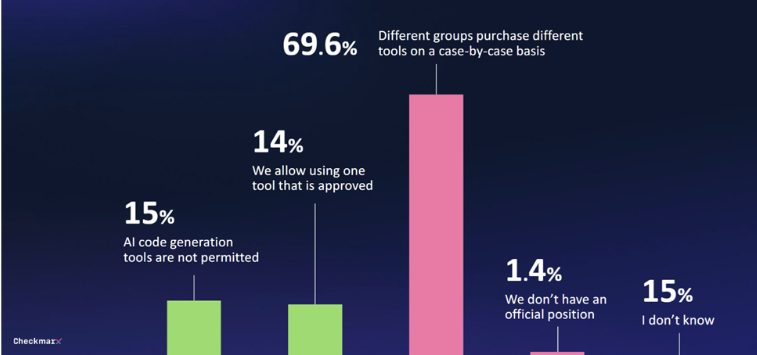

The study found that only 29% of companies have established any form of governance system for generative AI tools. 70% of companies do not have a central strategy, and procurement decisions of various departments are often temporary and lack unified management.

As more and more developers use AI programming tools, security issues are becoming a growing concern. 80% of the respondents are worried about the potential threats that developers may bring when using AI, and 60% of them are particularly worried about possible "hallucinations" caused by AI.

Despite these concerns, many people remain interested in AI's potential. 47% of respondents are willing to let AI make code changes without supervision, while only 6% express complete distrust of AI security measures in software environments.

Kobi Tzruya, chief product officer at Checkmarx, noted: “Responses from CISOs around the world reveal that developers are using AI for application development, even though these AI tools cannot reliably create secure code, which means security teams are dealing with a large number of new and potentially malicious code. Vulnerable code."

Microsoft's Work Trend Index report also shows that many employees use their own AI tools without providing them, and often do not discuss this openly, which hinders the systematic implementation of generative AI in business processes.

Highlight:

1. ? **15% of companies ban AI programming tools, but 99% of development teams still use them**

2. ? **Only 29% of companies have established a governance system for generative AI tools**

3. ? **47% of respondents are willing to let AI make unsupervised code changes**

All in all, the report highlights the need for enterprises to strengthen the management and security policies of AI programming tools and balance the convenience brought by AI with the potential risks. Enterprises should formulate a unified strategy and pay attention to the security vulnerabilities that AI may bring, so that they can better utilize AI technology to improve development efficiency. The editor of Downcodes recommends paying attention to Checkmarx’s follow-up reports to learn more about developments in the field of AI security.