OpenAI has been making frequent moves recently. Following the open source of Llama 3.1, it has released another big news: from now until September 23, developers can get 2 million training tokens for free every day to fine-tune the model! This move not only demonstrates the generosity of OpenAI, but also marks a new stage in the development of AI technology. The editor of Downcodes will give you an in-depth understanding of the advantages of GPT-4o mini and the meaning behind this free fine-tuning event.

While the news of Llama 3.1 being open source is still ringing in our ears, OpenAI is here to steal the limelight again. From now on, 2 million training tokens will be used to fine-tune the model for free every day until September 23. This is not only a generous donation to developers, but also a bold push for the advancement of AI technology.

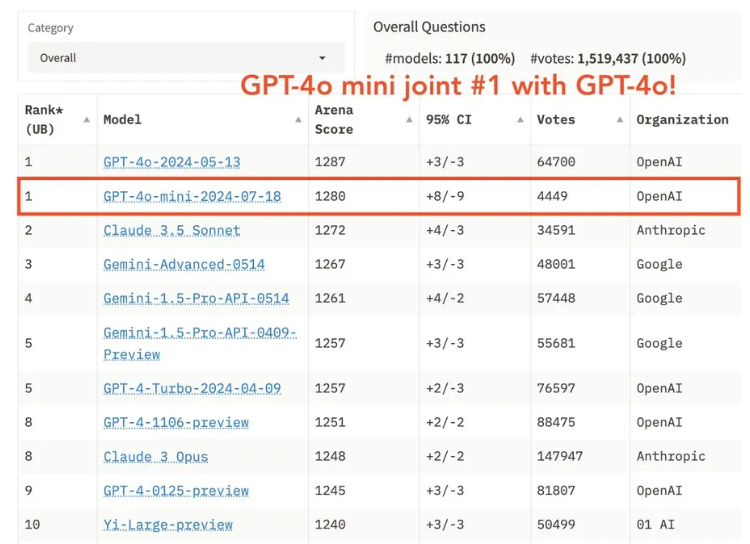

The advent of GPT-4o mini has excited countless developers. It ranks first with GPT-4o in the LMSYS ranking of the large model arena. It has powerful performance but the price is only 1/20 of GPT-4o. This move by OpenAI is undoubtedly a major benefit to the AI field.

Developers who received the email rushed to tell each other excitedly that such a big piece of wool must be harvested as soon as possible. OpenAI announced that from July 23 to September 23, developers can use 2 million training tokens for free. The excess will be charged at USD 3 million tokens.

More affordable: GPT-4o mini’s input token fee is 90% lower than GPT-3.5Turbo’s, and output token fee is 80% lower. Even after the free period ends, the training cost of GPT-4o mini is half that of GPT-3.5Turbo.

Longer context: The training context length of GPT-4o mini is 65k Tokens, which is 4 times that of GPT-3.5Turbo, and the inference context length is 128k Tokens, which is 8 times that of GPT-3.5Turbo.

Smarter and more capable: GPT-4o mini is smarter than GPT-3.5Turbo and supports visual features (although fine-tuning is currently limited to text).

The GPT-4o mini fine-tuning function will be open to enterprise customers, as well as Tier4 and Tier5 developers, and access will be gradually expanded to all levels of users in the future. OpenAI has released a fine-tuning guide to help developers get started quickly.

Some netizens are not optimistic about this. They believe that OpenAI is collecting data, training and improving AI models. Other netizens believe that GPT-4o mini’s victory is substantial evidence that AI has become smart enough to fool us.

The release of GPT-4o mini and the free fine-tuning policy will undoubtedly promote the further development and popularization of AI technology. For developers, this is a golden opportunity to build more powerful applications at a lower cost. As for AI technology itself, does this mean a new milestone? Let us wait and see.

Fine-tuning documentation: https://platform.openai.com/docs/guides/fine-tuning/fine-tuning-integrations

The free fine-tuning activities of GPT-4o mini provide developers with excellent learning and practice opportunities. Its low-cost and high-performance characteristics also indicate the future development direction of AI applications. Let us look forward to the vigorous development of AI technology!