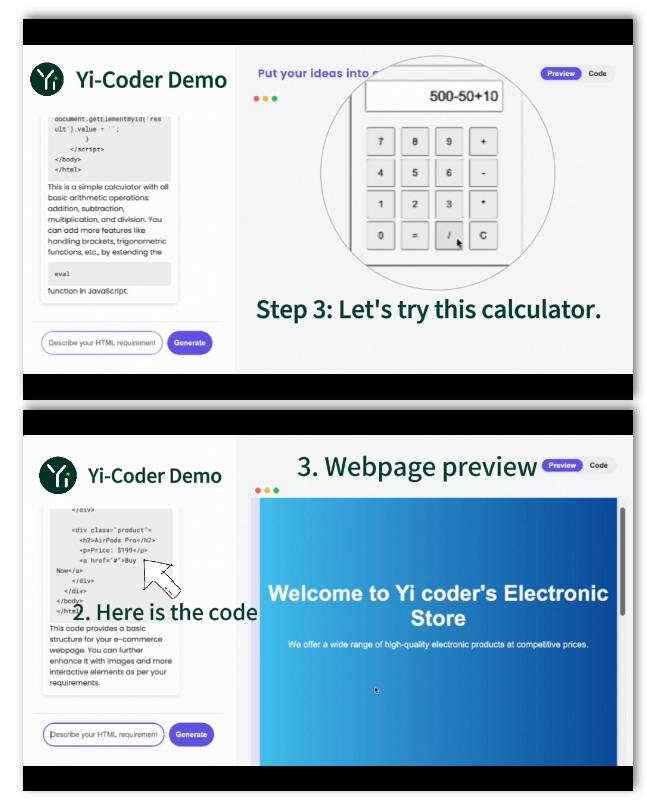

Beijing Zero-One Technology Co., Ltd. has open sourced its Yi-Coder series of programming models, which is a major milestone. The Yi-Coder series includes models with two parameter sizes of 1.5B and 9B. Of particular note is Yi-Coder-9B, which has demonstrated leading performance in code generation, understanding, debugging and completion. The model supports multiple programming languages and is able to handle contexts up to 128K tokens, making it capable of handling complex project-level code.

The Yi-Coder series models have achieved excellent results in benchmark tests such as LiveCodeBench and CodeEditorBench, surpassing many similar models in terms of code generation efficiency and code editing capabilities, and even taking the lead among models with parameter amounts below 10B. In addition, Yi-Coder has also demonstrated strong capabilities in long sequence modeling and mathematical reasoning.

In addition, Yi-Coder is equally strong in code editing and completion capabilities, and achieved excellent results in the CodeEditorBench evaluation. In terms of long sequence modeling and mathematical reasoning capabilities, Yi-Coder has also demonstrated its powerful performance, with excellent performance in both long text understanding and mathematical problem solving.

Project address: https://github.com/01-ai/Yi-Coder

The open source of the Yi-Coder series models provides developers with powerful tools and also promotes the further development of artificial intelligence in the field of programming. Its excellent performance and wide application prospects make it an excellent open source project worthy of attention. It is expected that Yi-Coder can be used in more scenarios in the future and continue to be improved and perfected.