In recent years, the technology of seamlessly integrating virtual objects into real scenes has been a difficult problem in the field of digital image processing. This article introduces an innovative technology called DiPIR (Diffusion-Guided Inverse Rendering), which enables realistic insertion of virtual objects into real scenes under various lighting conditions by combining large-scale diffusion models and physically-based inverse rendering. . The breakthrough of DiPIR is that it can accurately restore scene lighting information and automatically adjust the material and lighting of virtual objects to perfectly integrate with the environment, significantly improving the authenticity and consistency of image synthesis effects.

In the field of digital image processing, an innovative technology called DiPIR (diffusion-guided inverse rendering) is attracting widespread attention. This latest method proposed by researchers aims to solve the long-standing technical problem of seamlessly inserting virtual objects into real scenes.

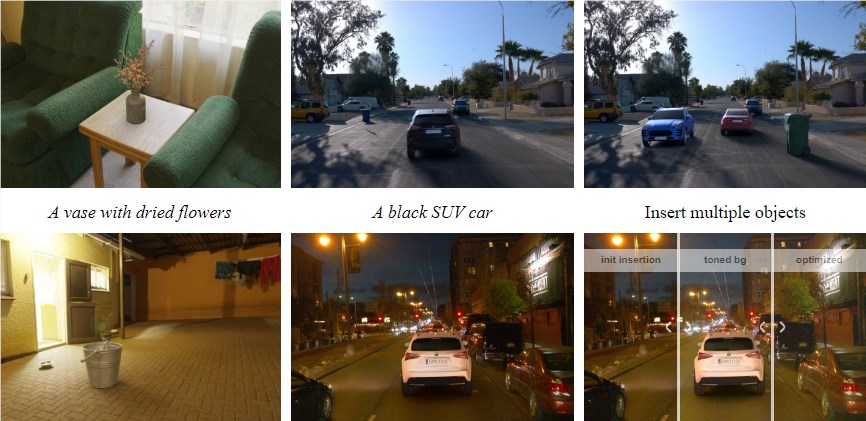

The core of DiPIR lies in its unique working principle. It combines a large-scale diffusion model and a physically based inverse rendering process to accurately recover scene lighting information from a single image. This groundbreaking method not only inserts any virtual object into an image, it also automatically adjusts the object's material and lighting so that it blends naturally with its surroundings.

The workflow of this technology first builds a virtual 3D scene based on input images, and then uses a differentiable renderer to simulate the interaction of virtual objects with the environment. In each iteration, the rendering results are processed through a diffusion model, continuously optimizing the ambient light map and tone mapping curve, ultimately ensuring that the generated image matches the lighting conditions of the real scene.

The advantage of DiPIR is its wide applicability. Whether indoors or outdoors, day or night, scenes under different lighting conditions can be effectively processed. Experimental results show that DiPIR performs well in multiple test scenarios, and the generated images are extremely realistic, successfully solving the shortcomings of the current model in terms of lighting effect consistency.

It’s worth noting that DiPIR has applications far beyond still images. It also supports the insertion of objects in dynamic scenes and the synthesis of virtual objects in multiple perspectives. These characteristics make DiPIR have broad application prospects in the fields of virtual reality, augmented reality, synthetic data generation and virtual production.

Project address: https://research.nvidia.com/labs/toronto-ai/DiPIR/

The emergence of DiPIR technology provides new solutions for the integration of virtual objects and real scenes. Its application potential in various fields is huge and worthy of further research and exploration. In the future, we can look forward to DiPIR technology bringing more surprising applications in virtual reality, augmented reality and other fields.