Recently, the user experience of Anthropic’s AI chatbot Claude has sparked heated discussions. A large number of user feedback appeared on Reddit, saying that Claude's performance has declined, specifically manifested as memory loss and declining coding ability. This incident triggered widespread discussions about performance evaluation and user experience of large language models, and once again highlighted the challenges faced by AI companies in maintaining model stability.

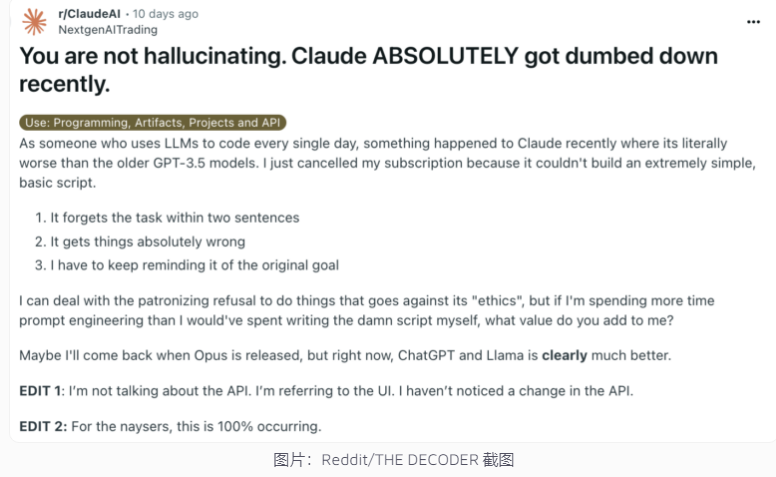

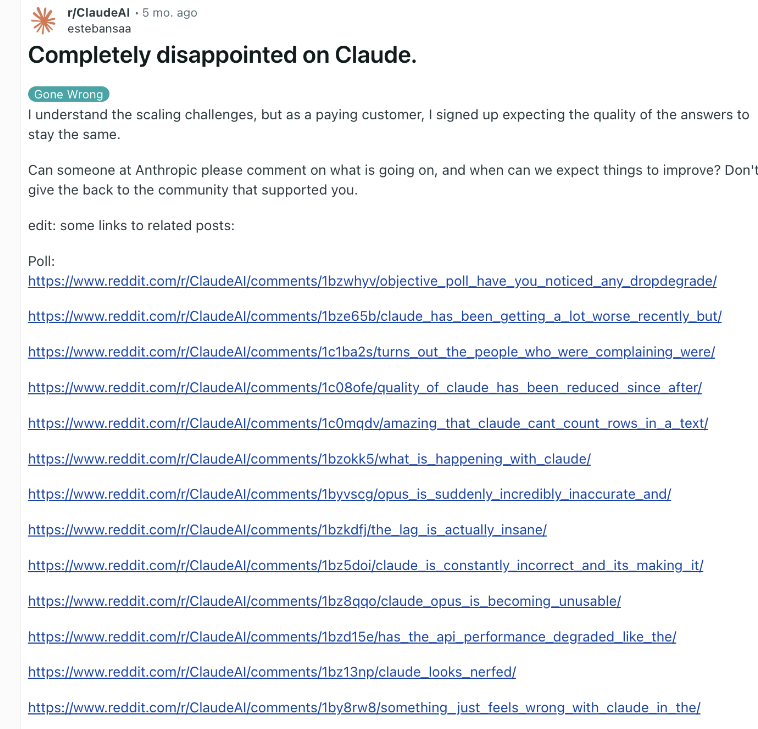

Recently, Anthropic’s AI chatbot Claude has once again been involved in a performance controversy. A post on Reddit claiming that Claude had become much stupider recently attracted widespread attention, with many users reporting that they had experienced a decline in Claude's abilities, including memory loss and a decline in coding ability.

In response, Anthropic executive Alex Albert responded that the company’s investigation did not find any common problems and confirmed that no changes were made to the Claude3.5Sonnet model or inference pipeline. To improve transparency, Anthropic has published the system prompts of the Claude model on its official website.

This is not the first time that users have reported AI degradation and the company has denied it. At the end of 2023, OpenAI’s ChatGPT also faced similar doubts. According to industry insiders, the reasons for this phenomenon may include: user expectations increasing over time, natural changes in AI output, temporary computing resource limitations, etc.

However, these factors may still lead to user-perceived performance degradation even if the underlying model does not undergo significant changes. OpenAI once pointed out that AI behavior itself is unpredictable, and maintaining and evaluating large-scale generative AI performance is a huge challenge.

Anthropic said it will continue to pay attention to user feedback and work hard to improve Claude's performance stability. The incident highlights the challenges AI companies face in maintaining model consistency, as well as the importance of increasing transparency in AI performance evaluation and communication.

The Claude performance controversy reflects the complexity of the development and deployment of large language models, as well as the gap between user expectations and actual applications. In the future, AI companies need to further improve model stability and maintain more effective communication with users to ensure that AI technology can continue to provide services to users reliably.