Sapiens, the latest AI model released by Meta Reality Labs, has made a significant breakthrough in processing human visual tasks. It can accurately analyze human postures, movements, and subtle body part details in images and videos, maintaining high accuracy even in complex environments or when data is scarce. Sapiens' training data set contains more than 300 million human images and uses advanced visual transformer architecture and multi-task learning technology to give it strong generalization capabilities and robustness. It has a wide range of application prospects, covering fields such as video surveillance, virtual reality, medical care and social media, and is expected to revolutionize human-computer interaction methods and data analysis capabilities in these fields.

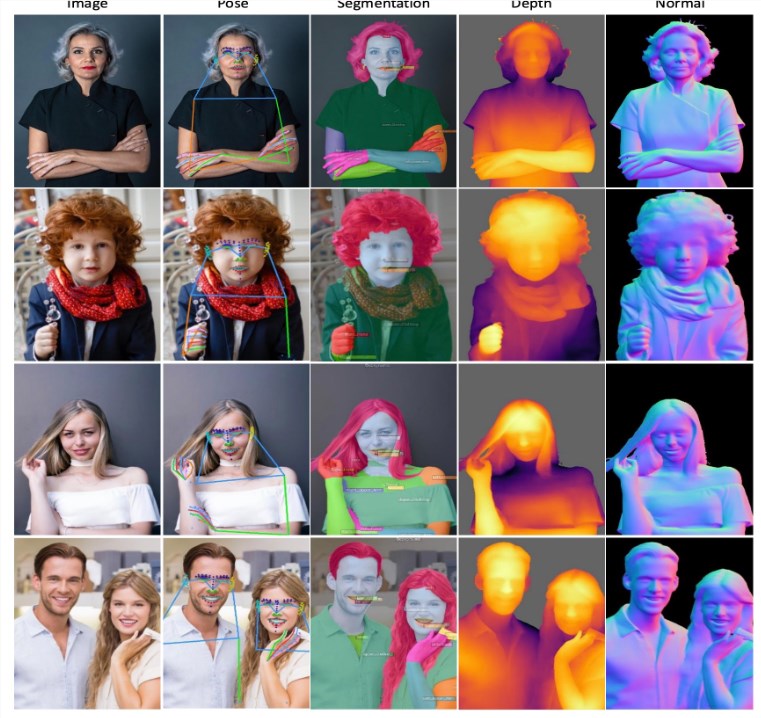

The core functions of the Sapiens model include 2D pose estimation, body part segmentation, depth estimation, and surface normal prediction. Through these functions, Sapiens can accurately recognize human posture, finely distinguish various body parts, and predict depth information and object surface orientation in images. Outperforming existing state-of-the-art methods on multiple tasks, demonstrating high accuracy and consistency. Its powerful performance and broad application prospects make it an important breakthrough in the field of artificial intelligence. The project address and thesis address are attached, welcome to visit for more information.

From a technical perspective, Sapiens employs several advanced methods. First, it is pre-trained based on a large-scale dataset containing 300 million images, which provides the model with strong generalization capabilities. Second, Sapiens adopts a visual transformer architecture that is capable of processing high-resolution inputs and performing fine-grained reasoning. In addition, through masked autoencoder pre-training and multi-task learning, Sapiens is able to learn robust feature representations and handle multiple complex tasks simultaneously.

The application prospects of Sapiens are very broad. In the fields of video surveillance and virtual reality, it can analyze human movements and postures in real time, providing support for motion capture and human-computer interaction. In the medical field, Sapiens can assist medical professionals in patient monitoring and rehabilitation guidance through precise posture and part analysis. For social media platforms, Sapiens can be used to analyze images uploaded by users to provide a richer interactive experience. In the fields of virtual reality and augmented reality, it helps create more realistic human images and enhances the user's immersive experience.

Experimental results show that Sapiens outperforms existing state-of-the-art methods on multiple tasks. Sapiens has demonstrated high accuracy and consistency in keypoint detection of the whole body, face, hands and feet, as well as in body part segmentation, depth estimation and surface normal prediction tasks.

Project address: https://about.meta.com/realitylabs/codecavatars/sapiens

Paper address: https://arxiv.org/pdf/2408.12569

All in all, the Sapiens model represents a major progress in the field of human visual understanding of artificial intelligence, and its powerful performance and wide application potential provide new possibilities for future technological innovation. We look forward to Sapiens playing a role in more fields and promoting technological progress.