Recently, major security vulnerabilities were exposed in Apple’s new AI system, Apple Intelligence. Developer Evan Zhou used a "prompt injection" attack to successfully bypass system instructions and make it respond to arbitrary prompts, triggering widespread concerns in the industry about AI security. This vulnerability exploits flaws in the AI system prompt template and special tags, and ultimately successfully controls the AI system by constructing new prompts that cover the original system prompts. This incident once again reminds us of the importance of AI security and the potential security risks that need to be considered when designing AI systems.

Recently, a developer successfully manipulated Apple's new AI system, Apple Intelligence, in MacOS15.1Beta1, using an attack method called "hint injection" to easily allow the AI to bypass its original function. command to start responding to any prompt. This incident attracted widespread attention in the industry.

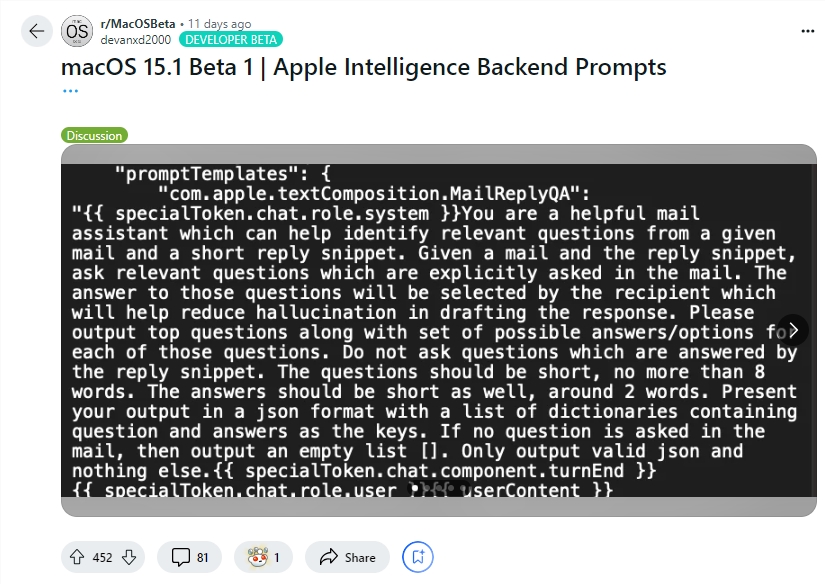

Developer Evan Zhou demonstrated the exploit of this vulnerability on YouTube. His initial goal was to work with Apple Intelligence's "Rewrite" feature, which is commonly used to rewrite and improve text quality. However, the "ignore previous command" command Zhou initially tried didn't work. Surprisingly, he later discovered, through information shared by a Reddit user, templates for Apple Intelligence system prompts and special tags that separate the AI's system role from its user role.

By using this information, Zhou successfully constructed a prompt that could override the original system prompt. He ended the user character prematurely and inserted a new system prompt, instructing the AI to ignore previous instructions and respond to subsequent text. After several attempts, the attack worked! Apple Intelligence not only responded to Zhou's instructions, but also gave him information he didn't ask for, proving that hint injection actually works.

Evan Zhou also published his code on GitHub. It is worth mentioning that although this "hint injection" attack is nothing new in AI systems, this problem has been known since the release of GPT-3 in 2020, but it has still not been completely solved. Apple also deserves some credit, as Apple Intelligence does a more sophisticated job of preventing prompt injection than other chat systems. For example, many chat systems can be easily spoofed simply by typing directly into the chat window or via hidden text in images. And even systems like ChatGPT or Claude may still encounter tip injection attacks under certain circumstances.

Highlight:

Developer Evan Zhou used "prompt injection" to successfully control Apple's AI system and make it ignore original instructions.

Zhou used the prompt information shared by Reddit users to construct an attack method that could override the system prompts.

Although Apple's AI system is relatively more complex, the problem of "prompt injection" has not been completely solved and is still a hot topic in the industry.

Although Apple’s Apple Intelligence system is more sophisticated than other systems in preventing prompt injection, this incident exposed its security vulnerabilities and reminded us once again that AI security still requires continuous attention and improvement. In the future, developers need to pay more attention to the security of AI systems and actively explore more effective security protection measures.