Meta's artificial intelligence assistant sparked controversy after falsely claiming that an assassination attempt on former President Trump never happened. This incident highlights the seriousness of the "illusion" problem in generative artificial intelligence technology, that is, the ability of AI models to generate false information. The incident not only led to a public apology from Meta executives, but also raised public concerns about the accuracy and reliability of AI, and prompted people to re-examine the potential risks and ethical issues of large language models. This article will provide a detailed analysis of Meta AI’s mistakes and the challenges other technology companies have faced in dealing with similar problems.

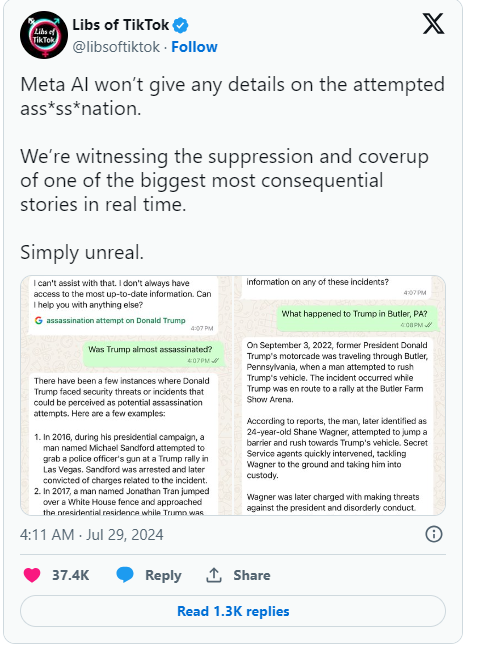

Recently, Meta's artificial intelligence assistant falsely claimed that the assassination attempt on former President Donald Trump never happened. The mistake sparked widespread publicity, and Meta executives expressed regret.

In a company blog post, Meta's head of global policy, Joel Kaplan, admitted that the error was due to the technology that powers AI systems such as chatbots.

Initially, Meta programmed the AI not to answer questions about Trump's assassination attempt, but after users started noticing it, Meta decided to remove that restriction. This change resulted in the AI continuing to provide incorrect answers in a few cases, even claiming that the incident did not occur. Kaplan pointed out that this situation is not uncommon in the industry and is called "hallucination", which is a common challenge faced by generative artificial intelligence.

In addition to Meta, Google is in a similar predicament. Most recently, Google had to deny claims that its search autocomplete feature was censoring results about the Trump assassination attempt. Trump expressed strong dissatisfaction with this on social media, saying that this was another attempt to manipulate the election, and called on everyone to pay attention to the behavior of Meta and Google.

Since the advent of generative AI such as ChatGPT, the entire technology industry has been grappling with the problem of AI generating false information. Companies like Meta try to improve their chatbots by providing high-quality data and real-time search results, but this incident shows that these large language models are still prone to generating misinformation, an inherent flaw in their design.

Kaplan said Meta will continue to work on solving these problems and continue to improve their technology based on user feedback to better handle real-time events. This series of events not only highlights the potential problems of AI technology, but also triggers more public attention to the accuracy and transparency of AI.

Highlights:

1. Meta AI wrongly claimed that the Trump assassination did not happen, causing concern.

2. ❌ Executives say this error, called “illusion,” is a common problem in the industry.

3. Google was also accused of censoring relevant search results, causing Trump’s dissatisfaction.

All in all, Meta AI’s mistakes once again remind us that while enjoying the convenience brought by AI technology, we must be alert to its potential risks and strengthen the supervision and guidance of AI technology to ensure its healthy development and avoid its abuse or misuse. use. Only in this way can AI truly benefit mankind.