In the field of software development, the application of AI coding tools is setting off a silent revolution. Development teams widely use AI-assisted coding. However, company management lacks effective supervision and control over the use of AI tools. This triggered a "cat-and-mouse game" between developers and management regarding the application of AI tools, and the resulting security risks.

In the world of technology, there is a cat-and-mouse game going on between developers and management. The protagonists of this game are those AI coding tools that are explicitly banned by the company but are still quietly used by developers.

Although 15% of companies explicitly ban the use of AI coding tools, almost all development teams (99%) are using them, according to new global research from cloud security company Checkmarx. This phenomenon reveals the challenges in controlling the use of generative AI.

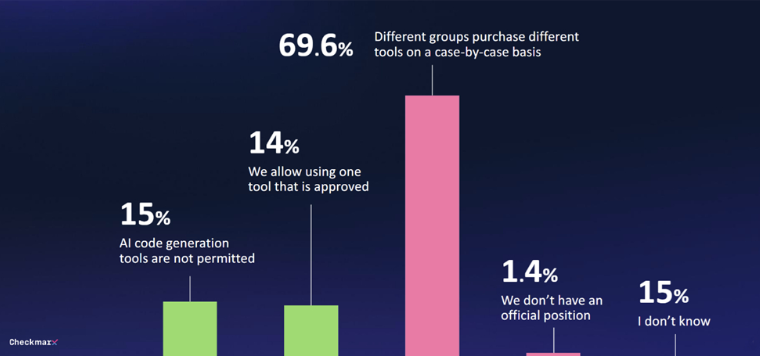

Only 29% of companies have some form of governance in place for generative AI tools. In 70% of cases, there is no unified strategy and purchasing decisions are made ad hoc by various departments. This situation makes it difficult for management to control the use of AI coding tools.

With the popularity of AI coding tools, security issues have become increasingly prominent. 80% of the respondents are worried about the potential threats that developers may bring when using AI, especially 60% who express concerns about the "hallucinations" caused by AI.

Despite concerns, interest in AI's potential remains strong. 47% of respondents are open to allowing AI to make unsupervised code changes. Only 6% said they would not trust AI security measures in software environments.

"The responses from these global CISOs reveal the reality that developers are using AI in application development even if they cannot reliably create secure code using AI, which means security teams need to contend with a flood of new, vulnerable attacks," Tzruya said. code."

A recent report from Microsoft's Work Trends Index showed similar findings, with many employees using their own AI tools when none are provided. Often, they do not discuss this use, which hinders the systematic integration of generative AI into business processes.

Despite explicit prohibitions, 99% of development teams still use AI tools to generate code. Only 29% of companies have established governance mechanisms for the use of generative AI. In 70% of cases, decisions about the use of AI tools by various departments are made on an ad hoc basis. At the same time, security concerns are growing. 47% of respondents are open to allowing AI to make unsupervised code changes. Security teams are faced with the challenge of dealing with large amounts of potentially vulnerable AI-generated code.

This "cat-and-mouse game" between developers and management continues, and we'll have to wait and see where the future of AI coding tools goes.

The application of AI coding tools has become a trend, but the security risks it brings cannot be ignored. Companies need to establish a sound governance mechanism to balance the efficiency improvements brought by AI with potential security risks in order to better adapt to this technological wave.