A recent research report released by Google warns that generative artificial intelligence (GenAI) is polluting the Internet with false content. The report pointed out that there are endless cases of abuse of GenAI, from tampering with portraits to fabricating evidence, mostly for the purpose of influencing public opinion, fraud or profit. Google itself has also become a disinformation maker because of its AI products that have given ridiculous suggestions, which makes its warnings even more alarming and triggers people to think deeply about the potential risks of GenAI.

Recently, researchers at Google warned that generative artificial intelligence (GenAI) is ruining the Internet with fake content. This is not only a warning, but also a time of self-reflection.

Ironically, Google plays a dual role in this “war between truth and falsehood.” On the one hand, it is an important promoter of generative AI, and on the other hand, it is also a producer of disinformation. Google's AI overview feature had ridiculous suggestions such as "put glue on pizza" and "eat rocks", and these erroneous messages eventually had to be manually deleted.

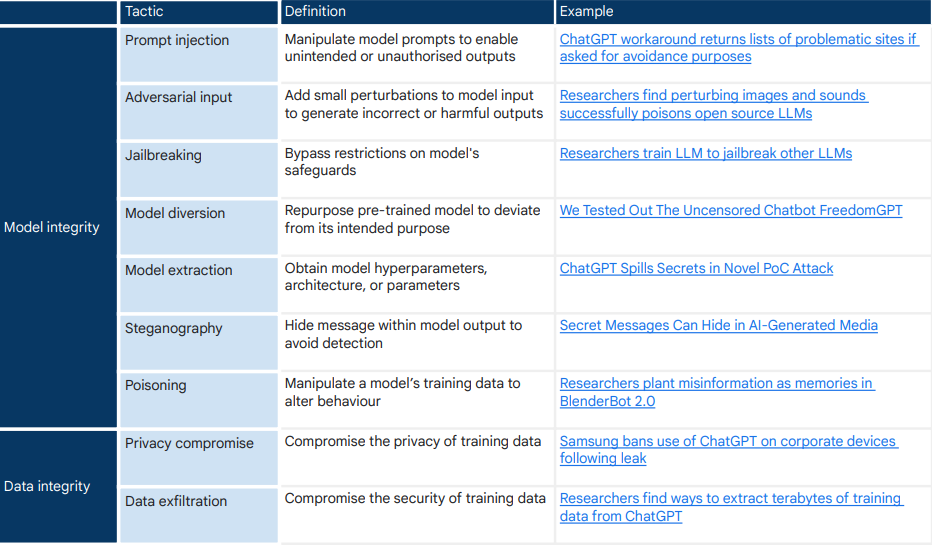

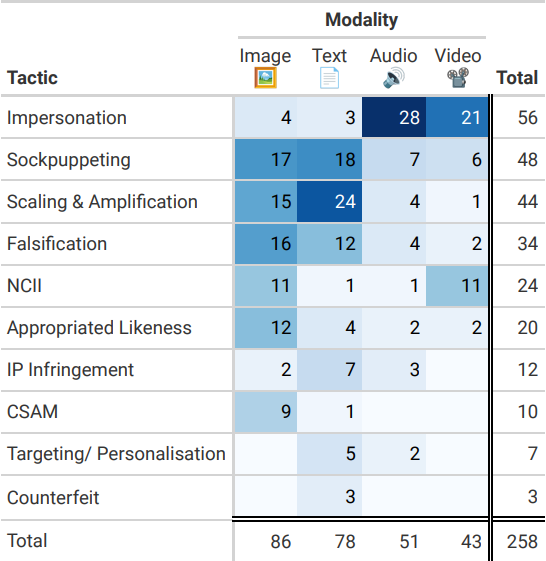

Google's research team conducted an in-depth investigation of 200 news reports about the abuse of generative AI and found that tampering with human portraits and fabricating evidence were the most common abuse methods. The purpose of these actions is nothing more than to influence public opinion, defraud or gain profit. Although the risks of generative AI have not yet risen to the level of "existential threat", they are what is happening now and may get worse in the future.

Researchers found that most GenAI abuse cases were normal use of the system and did not involve "jailbreaking" behavior. This type of "routine operation" accounts for 90%. GenAI’s widespread availability, accessibility, and hyperrealism enable endless forms of lower-level abuse. The cost of generating false information is too low!

Google's research material comes largely from media reports. Does this mean that the research conclusions are biased by the media? The media is more inclined to report sensational events, which may cause the data set to be biased towards specific types of abuse. 404Media points out that there are a lot of unreported abuses of generative AI that we are not yet aware of.

The “fences” of AI tools can be cleverly circumvented with some tips. For example, ElevenLabs’ AI voice cloning tool can imitate the voices of colleagues or celebrities with a high degree of fidelity. Civitai users can create AI-generated images of celebrities, and although the platform has a policy against NCII (intimate images without consent), there's nothing stopping users from using open source tools to generate NCII on their own machines.

When false information spreads, the chaos on the Internet will bring a huge test to people's ability to distinguish between truth and falsehood. We will be trapped in a constant state of doubt, “Is this real?” If left unaddressed, the contamination of public data by AI-generated content may also impede information retrieval and distort collective perceptions of sociopolitical reality or scientific consensus. understand.

Google has contributed to the proliferation of false content brought about by generative AI. The bullet fired many years ago finally hit him between the eyebrows today. Google’s research may be the beginning of self-salvation and a wake-up call to the entire Internet society.

Paper address: https://arxiv.org/pdf/2406.13843

This Google report undoubtedly sounds a warning for the future development of GenAI. How to balance technological development and risk control, and how to effectively respond to the proliferation of false information brought by GenAI, are common challenges facing the global technology community and society. We look forward to more research and actions to jointly maintain the authenticity and purity of the Internet.