The latest open source RWKV-CLIP model of Green Deep Learning has demonstrated strong performance in the field of visual language representation learning with its innovative design integrating Transformer and RNN architecture. This model effectively solves the problem of noisy data and significantly improves the model's robustness and downstream task performance by cleverly combining a twin-tower architecture, spatial mixing and channel mixing modules, and a diverse description generation framework. It has made breakthrough progress in image-text matching and understanding, providing a new direction for the research and application of visual language models.

Gelingshentong has open sourced the RWKV-CLIP model, which is a visual language representation learner that combines the advantages of Transformer and RNN. The model significantly improves the performance of visual and language tasks by extending the dataset using image-text pairs obtained from websites through image and text pre-training tasks.

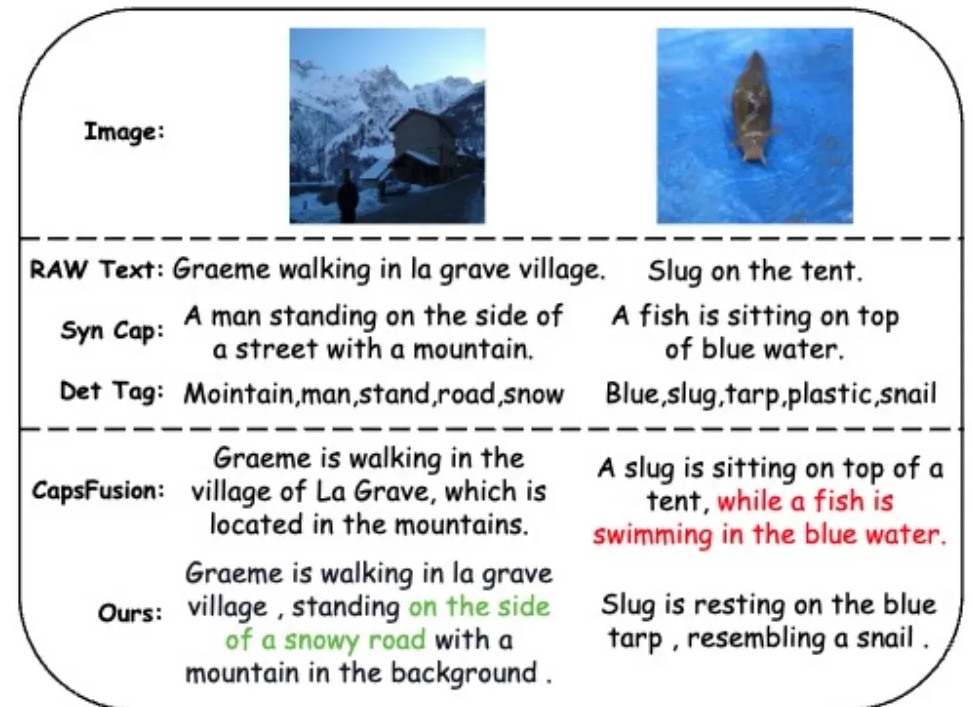

To address the noisy data problem and improve data quality, the research team introduced a diverse description generation framework that leverages large language models (LLMs) to synthesize and refine content from web-based text, synthesized subtitles, and detected tags.

The RWKV-CLIP model adopts a twin-tower architecture, integrating the effective parallel training of Transformer and the efficient inference of RNN. The model is stacked by multiple spatial blending and channel blending modules, which enable in-depth processing of input images and text. In the spatial mixing stage, the model uses the attention mechanism to perform global linear complexity calculation and strengthen the interaction of features at the channel level. The channel blending stage further refines the feature representation. In terms of input enhancement, the RWKV-CLIP model enhances the robustness of the model by randomly selecting original text, synthetic subtitles, or generated descriptions as text input.

Experimental results show that RWKV-CLIP achieves state-of-the-art performance in multiple downstream tasks, including linear detection, zero-shot classification, and zero-shot image text retrieval. Compared with the baseline model, RWKV-CLIP achieves significant performance improvements.

Cross-modal analysis of the RWKV-CLIP model shows that its learned representations exhibit clearer discriminability within the same modality and closer distances in the image-text modality space, indicating cross-modality Better alignment performance.

Model address: https://wisemodel.cn/models/deepglint/RWKV-CLIP

All in all, the RWKV-CLIP model shows great potential in the field of visual language, and its open source also provides valuable resources for related research. Interested developers can visit the provided link to download the model and conduct further research and application.