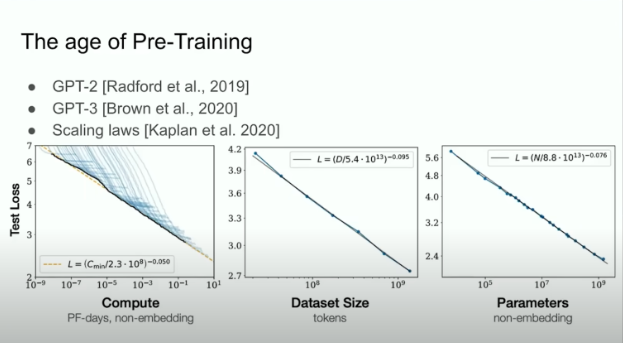

OpenAI co-founder Ilya Suzkvir gave a speech at the NeurIPS 2024 conference, pointing out that the era of artificial intelligence pre-training is coming to an end and predicting the rise of artificial intelligence super intelligence. He believes that existing AI models are as dependent on data as they are on fossil fuels. The growth rate of the total amount of data can no longer meet the expansion of model needs, so new methods must be explored to expand machine intelligence.

OpenAI co-founder Ilya Sutskever recently said that artificial intelligence researchers must find new ways to expand machine intelligence to overcome existing limitations.

Suzkwer gave a speech at the 2024 Neural Information Processing Systems (NeurIPS) conference in Vancouver, Canada, in which he believed that the era of artificial intelligence pre-training is coming to an end and predicted the rise of artificial intelligence superintelligence.

Suzkowir believes that improvements in computing power through better hardware, software and machine learning algorithms are outpacing the amount of data available for training AI models. The AI researcher likens data to fossil fuels that will one day be exhausted. Suzkowir said:

“Data is not going to grow because we only have one internet. You could even say that data is the fossil fuel of AI. It was created in some way and now we use it and we have reached peak data and there will be no more in the future. There’s more data – we have to deal with the data we have.”

The OpenAI co-founder predicts that agent AI, synthetic data and inference time computing will be the next evolution direction of artificial intelligence, and will ultimately lead to the birth of artificial intelligence superintelligence.

AI agents may subvert existing models

The capabilities of AI agents go beyond current chatbot models and they are able to make decisions without human intervention. With the rise of large language models (LLM) such as AI meme coins and Truth Terminal, AI agents have become a hot topic in the cryptocurrency field.

Truth Terminal quickly became popular after it began promoting a meme coin called Goatseus Maximus (GOAT). The memecoin eventually reached a market capitalization of $1 billion, attracting the attention of retail investors and venture capitalists.

Google's DeepMind Artificial Intelligence Laboratory has released Gemini 2.0 - an artificial intelligence model that will power AI agents.

According to Google, agents built using the Gemini 2.0 framework will be able to assist with complex tasks such as coordination and logical reasoning between websites.

Advances in AI agents that can act and reason independently will lay the foundation for AI to overcome the data illusion.

AI hallucinations arise due to incorrect data sets, and AI pre-training increasingly relies on using old LLMs to train new LLMs, which degrades performance over time.

Data bottlenecks and the future of AI

Suzkowir's point highlights the huge challenge facing the development of AI: as the scale of AI models continues to expand, so does the demand for data. However, the reality is that the amount of available data is not growing as fast as the model’s demand for data. This forces researchers to explore new methods to overcome data bottlenecks.

AI agents, synthetic data and inference time computing may become new directions for the future development of AI. These technologies are expected to help AI models reduce their reliance on massive data and improve their reasoning and decision-making capabilities. The emergence of AI super intelligence indicates that AI technology may usher in a new era, which may completely change our existing lifestyle and work patterns.

However, the emergence of AI superintelligence has also raised concerns about AI ethics and safety. How to ensure the controllability and safety of AI technology while enjoying the convenience brought by AI technology will be a question we need to seriously consider.

All in all, Suzkwer's speech pointed out a new path for the future development direction of artificial intelligence. It also reminded us to pay attention to the ethical and safety challenges brought by AI superintelligence, and we need to move forward cautiously and actively explore solutions.