Emoticons, from static pictures to dynamic videos, have become an important part of online culture. However, it is not easy to produce high-quality emoticon videos. Existing methods have problems such as low efficiency and poor generalization ability. This article introduces a tool called HelloMeme, which can easily and efficiently generate vivid, interesting, high-fidelity emoticon videos, adding new fun to short video creation.

Dear "surfing experts", do you still remember the emoticons we followed in those years? From "The old man on the subway looking at his mobile phone" to "The Golden Curator's panda head", they not only made us laugh, but also became a kind of Unique internet cultural symbol. Nowadays, short videos are popular all over the world, and emoticons are also "advancing with the times", evolving from static pictures to dynamic videos, which are crazy on all major platforms.

However, it is not easy to create a high-quality emoticon video. First of all, emoticons are characterized by exaggerated expressions and large movements, which poses a considerable challenge to video generation technology. Secondly, many existing methods require parameter optimization of the entire model, which is not only time-consuming and labor-intensive, but may also lead to a decrease in the generalization ability of the model, making it difficult to be compatible with other derived models.

So, is there a way for us to easily create emoticon videos that are both lively, interesting and high-fidelity? The answer is: of course! HelloMeme is here to save you!

HelloMeme is like a "plug-in" tool for large models. It allows the model to learn the "new skill" of making emoticon videos without changing the original model. Its secret weapon is to optimize the attention mechanism related to the two-dimensional feature map, thereby enhancing the performance of the adapter. To put it simply, a pair of "see-through glasses" is put on the model so that it can more accurately capture the details of expressions and movements.

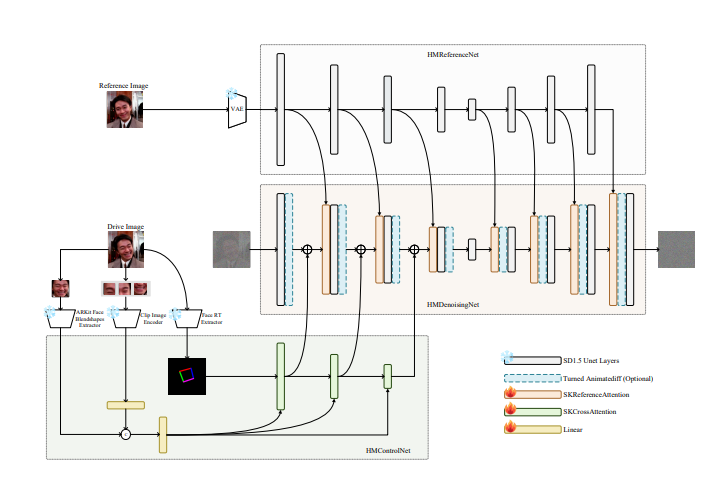

How HelloMeme works is also interesting. It consists of three small partners: HMReferenceNet, HMControlNet and HMDenoisingNet.

HMReferenceNet is like a master who has "read countless pictures" and can extract high-fidelity features from reference images. This is like providing the model with an "Emoji Pack Making Guide" so that it knows what kind of expressions are "simple" enough.

HMControlNet is like a "motion capture master" that can extract head posture and facial expression information. This is equivalent to installing a "motion capture system" on the model, allowing it to accurately capture every subtle change in expression.

HMDenoisingNet is the "video editor", which is responsible for integrating the information provided by the previous two friends to generate the final emoticon video. It's like an experienced editor who can perfectly blend various materials together to create a video that makes people laugh.

In order to allow these three friends to work better together, HelloMeme also uses a magic called "space weaving attention mechanism". This mechanism is like knitting a sweater, interweaving different feature information together, thus retaining the structural information in the two-dimensional feature map. In this way, the model does not need to relearn these basic knowledge and can focus more on the "artistic creation" of emoticon production.

The most powerful thing about HelloMeme is that it completely retains the original parameters of the SD1.5UNet model during the training process and only optimizes the parameters inserted into the adapter. ** This is like giving the model a "patch" rather than performing "major surgery" on it. ** The advantage of this is that it not only retains the powerful functions of the original model, but also gives it new capabilities. It can be said to kill two birds with one stone.

HelloMeme has achieved great results on the task of generating emoticon videos. The videos it generates not only have vivid expressions and smooth movements, but also have high picture definition, which is comparable to professional production levels. More importantly, HelloMeme also has good compatibility with SD1.5 derivative models, which means we can take advantage of other models to further improve the quality of emoticon videos.

Of course, HelloMeme still has a lot of room for improvement. For example, the video it generates is slightly inferior to some GAN-based methods in terms of frame continuity, and its style expression ability also needs to be enhanced. However, HelloMeme’s research team has stated that they will continue to work hard to improve the model to make it more powerful and more “sand sculpture”.

I believe that in the near future, HelloMeme will become the best tool for us to make emoticon videos, allowing us to unleash our "sand sculpture" imagination and use emoticons to dominate the short video era!

Project address: https://songkey.github.io/hellomeme/

All in all, HelloMeme provides an efficient and convenient emoticon video generation solution, and its innovative technology and excellent effects are worth looking forward to. In the future, with the continuous advancement of technology, I believe HelloMeme will become an indispensable tool in the field of emoticon creation, allowing more people to easily create wonderful video works.