ByteDance Doubao Big Model Team has released a new code big model evaluation benchmark - FullStack Bench. This benchmark covers 11 real-life scenarios, 16 programming languages and 3374 questions. Compared with previous evaluation standards, FullStack Bench can perform better Comprehensive and more accurate assessment of code development capabilities for large models. It screens data from Stack Overflow and is cross-validated by AI and humans to ensure the reliability and breadth of the data. At the same time, the team also open sourced the code sandbox tool SandboxFusion to facilitate developers to conduct large model testing.

On December 5, the Byte Doubao large model team launched the latest large code model evaluation benchmark - FullStack Bench, which covers more than 11 types of real scenarios, supports 16 programming languages, and contains 3374 questions. This benchmark can more accurately evaluate the code development capabilities of large models in a wider range of programming fields than previous evaluation standards, and promotes the optimization of models in real-world programming tasks.

Current mainstream code evaluation benchmarks, such as HumanEval and MBPP, usually focus on basic and advanced programming problems, while DS-1000 focuses on data analysis and machine learning tasks, and only supports Python. xCodeEval focuses on advanced programming and mathematics, and has large application scenarios and language coverage limitations. In contrast, FullStack Bench has significantly enhanced data coverage, covering more than 11 application areas and covering more complex and diverse programming scenarios.

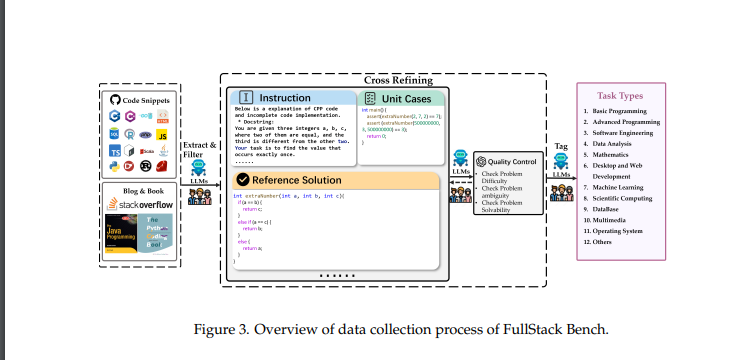

The FullStack Bench data set comes from Stack Overflow, the world's largest programming Q&A platform. The research team selected the top 88.1% of application fields from 500,000 questions, ensuring the breadth and robustness of the data set. Each question includes a detailed problem description, reference solutions and unit test cases to ensure assessment accuracy. The team also conducted a cross-assessment of data quality through AI and manual review to further improve the reliability of the data.

In order to facilitate developers to use this data set, the Byte Doubao team has also open sourced the code sandbox tool SandboxFusion to support the efficient execution of multi-language programming tasks. SandboxFusion is compatible with more than 10 widely used code evaluation data sets and supports 23 programming languages, helping developers easily test large models in different environments.

In addition, the Byte Doubao large model team also demonstrated its self-developed large code model - Doubao-Coder for the first time, and evaluated the programming capabilities of more than 20 large code models around the world. Byte's continuous progress in the field of AI programming, especially through its self-developed code base model MarsCode, contributes millions of codes to users every month, demonstrating its leading position in this field.

Data set open source address: https://huggingface.co/datasets/ByteDance/FullStackBench

Sandbox open source address: https://github.com/bytedance/SandboxFusion

Paper address: https://arxiv.org/pdf/2412.00535v2

The release of FullStack Bench and the open source of related tools mark ByteDance’s significant progress in the field of AI code and have made important contributions to promoting the evaluation and development of large code models. Developers can use these resources to better improve the performance of their own models and promote the advancement of AI code technology.