Visual language models (VLMs) have made significant progress in the field of artificial intelligence, but still face challenges in processing high-resolution images and diverse texts. Existing models often use static visual encoders, which are inefficient and lack accuracy on different datasets. The lack of diversity and task specificity in the training data set also limits its performance, especially in specialized domain tasks such as graph interpretation.

With the rapid development of artificial intelligence, the integration of visual and language capabilities has led to breakthrough progress in visual language models (VLMs). These models are designed to process and understand visual and text data simultaneously, and are widely used in scenarios such as image description, visual question answering, optical character recognition, and multi-modal content analysis.

VLMs have played an important role in developing autonomous systems, enhanced human-computer interaction, and efficient document processing tools, successfully bridging the gap between these two data modalities. However, many challenges still exist in processing high-resolution visual data and diverse text inputs.

Current research has partially addressed these limitations, but the static visual encoders adopted by most models lack adaptability at high resolutions and variable input sizes. At the same time, the combination of pre-trained language models with visual encoders often results in inefficiencies because they are not optimized for multi-modal tasks. Although some models introduce sparse computing techniques to manage complexity, the accuracy on different data sets is still insufficient. Furthermore, the training data sets of existing models often lack diversity and task specificity, which further limits their performance. For example, many models perform poorly in specialized tasks such as chart interpretation or dense document analysis.

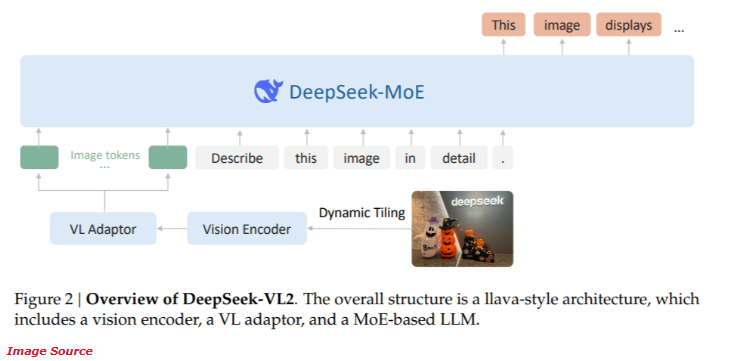

Recently, DeepSeek-AI launched the new DeepSeek-VL2 series of open source hybrid experts (MoE) visual language models. This series of models combines cutting-edge innovative technologies, including dynamic slicing of visual encoding, multi-head latent attention mechanism, and DeepSeek-MoE framework.

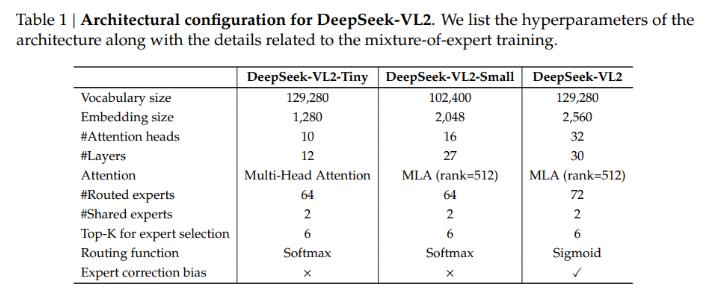

DeepSeek-VL2 series provides three different parameter configurations:

- DeepSeek-VL2-Tiny: 3.37 billion parameters (1 billion activation parameters)

- DeepSeek-VL2-Small: 16.1 billion parameters (2.8 billion activation parameters)

- DeepSeek-VL2: 27.5 billion parameters (4.5 billion activation parameters)

This scalability ensures its ability to adapt to different application needs and computing budgets.

DeepSeek-VL2's architecture is designed to optimize performance while reducing computational requirements. The dynamic slicing method ensures that high-resolution images are processed without losing critical details, making it ideal for document analysis and visual localization tasks. Furthermore, the multi-head latent attention mechanism enables the model to efficiently process large amounts of text data, reducing the computational overhead typically associated with processing dense language input. DeepSeek-VL2's training covers diverse multi-modal data sets, allowing it to perform well in a variety of tasks such as optical character recognition, visual question answering, and chart interpretation.

According to performance tests, the Small configuration achieved an accuracy of 92.3% in the optical character recognition task, significantly surpassing existing models. In the visual positioning benchmark test, the model improved accuracy by 15% compared to the previous generation product.

At the same time, DeepSeek-VL2 reduces computing resource requirements by 30% while maintaining state-of-the-art accuracy. These results demonstrate the superiority of this model in high-resolution image and text processing.

Project entrance: https://huggingface.co/collections/deepseek-ai/deepseek-vl2-675c22accc456d3beb4613ab

Highlights:

The DeepSeek-VL2 series provides a variety of parameter configurations to adapt to different application needs.

Dynamic slicing technology improves the efficiency of high-resolution image processing and is suitable for complex document analysis.

The model performs well on optical character recognition and visual localization tasks, with significantly improved accuracy.

DeepSeek-VL2 series models have brought new breakthroughs to the field of visual language models with their innovative architecture and excellent performance. Its advantages in high-resolution image and complex text processing make it show great potential in many application scenarios and deserve further attention and research.