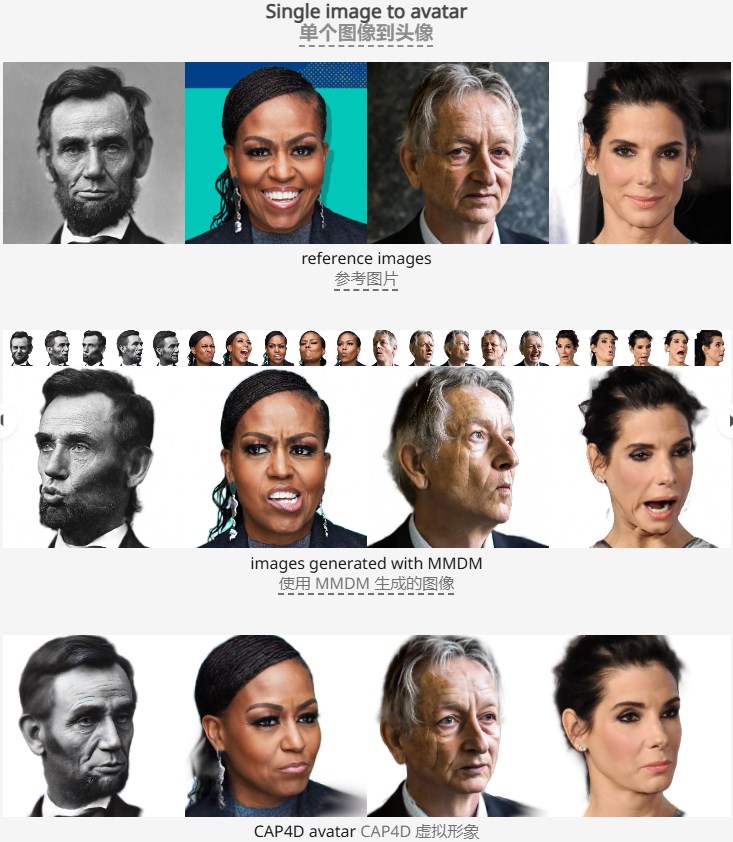

The research team from the University of Toronto and the Vector Institute recently released the CAP4D model, a breakthrough 4D avatar generation technology. It is based on the deformable multi-view diffusion model (MMDM) and can generate realistic, real-time controllable 4D avatars from any number of reference images, significantly improving the avatar reconstruction effect and detail presentation. This technology can not only process a single or a small number of reference images, but can even generate avatars from text prompts or artworks, demonstrating its strong adaptability and generative capabilities. Its two-stage method first uses MMDM to generate images with different perspectives and expressions, and then combines reference images to reconstruct 4D avatars. It supports combination with existing image editing models and voice-driven animation models to achieve richer interactions and dynamic effects, and provides virtual avatars. Applications open up new possibilities.

The model adopts a two-stage method, first using MMDM to generate images with different perspectives and expressions, and then combining these generated images with reference images to reconstruct a 4D avatar that can be controlled in real time. Users can input any number of reference images, and the model will use these image information to generate high-quality 4D avatars, and supports combination with voice-driven animation models to achieve audio-driven dynamic effects. The emergence of the CAP4D model marks significant progress in 4D avatar generation technology, which has broad application prospects in fields such as virtual reality, games, and the metaverse.

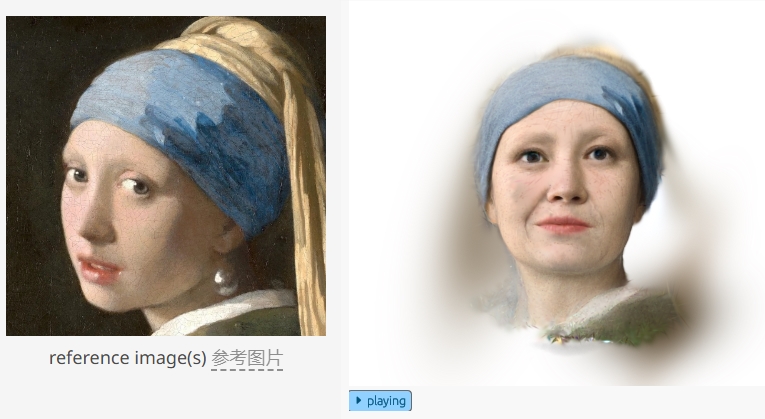

The research team demonstrated a variety of avatars generated by CAP4D, covering a single reference image, a small number of reference images, and the more challenging scenario of generating avatars from text prompts or artwork. By using multiple reference images, the model is able to recover details and geometries that cannot be seen in a single image, improving reconstruction performance. In addition, CAP4D also has the ability to be combined with existing image editing models, allowing users to edit the appearance and lighting of the generated avatar.

In order to further improve the expressiveness of the avatar, CAP4D can combine the generated 4D avatar with the voice-driven animation model to achieve audio-driven animation effects. This allows the avatar to not only display static visual effects, but also dynamically interact with users through sound, creating a new field of virtual avatar applications.

Highlight:

The CAP4D model can generate high-quality 4D avatars from any number of reference images, using a two-stage workflow.

This technology can generate avatars from a variety of different perspectives, significantly improving the image reconstruction effect and detail presentation.

CAP4D is combined with the voice-driven animation model to realize audio-driven dynamic avatars and expand the application scenarios of virtual avatars.

All in all, the CAP4D model has made a significant breakthrough in the field of 4D avatar generation, and its efficient, realistic and multi-functional features have brought new possibilities to fields such as virtual reality and digital entertainment. In the future, this technology is expected to develop further to provide users with a more convenient and realistic virtual interactive experience.