In recent years, AI virtual avatar technology has developed rapidly, but its interactivity is still a key bottleneck restricting its application. Many AI virtual avatars behave stiffly in conversations, lack realism, and cannot achieve natural interaction with users. To this end, a new technology called INFP came into being. It aims to solve the current problem of insufficient interaction between AI virtual avatars in two-person conversations, allowing virtual characters to express emotions and actions as naturally and smoothly as real people during the conversation. Completely change the human-computer interaction experience.

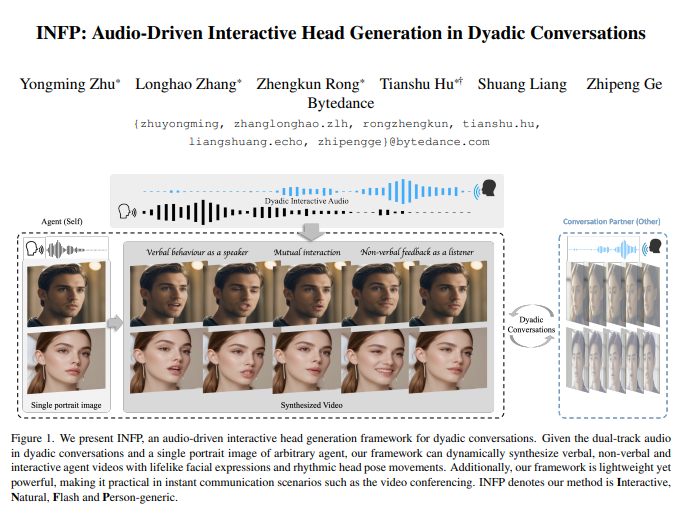

Recently, a new technology called INFP (Interactive, Natural, Flash and Person-generic) has attracted widespread attention. This technology aims to solve the problem of insufficient interaction between current AI virtual avatars in two-person conversations, allowing virtual characters to interact like real people during conversations, dynamically adjusting their expressions and movements based on the conversation content.

Say goodbye to "stand-up comedy" and welcome "double chorus"

In the past, AI avatars could only talk to themselves, like a "stand-up comedian" actor, or they could only listen stupidly without any feedback, like a "wooden person." However, our human conversations are not like this! When we talk, we look at each other, nod, frown, and even make jokes from time to time. This is real interaction!

The emergence of INFP is to completely change this embarrassing situation! It is like a "double chorus" conductor, which can dynamically adjust the expressions and movements of the AI avatar based on the conversation audio between you and the AI, making you feel like It's like talking to a real person!

INFP’s “unique secrets”: two tricks, one is indispensable!

The reason why INFP is so powerful is mainly due to its two "unique secrets":

Motion-Based Head Imitation:

It will first learn human expressions and movements from a large number of real conversation videos, like a "master of action imitation", compressing these complex behaviors into "action codes".

In order to make the movements more realistic, it will also pay special attention to the two "expressions" of the eyes and mouth, just like giving them a "close-up".

It will also use facial key points to assist in the generation of expressions to ensure the accuracy and naturalness of movements.

Then, it applies these "action codes" to a static avatar, making the avatar "alive" instantly, just like magic!

Audio-Guided Motion Generation:

This "generator" is even more powerful. It can understand the conversation audio between you and the AI, just like a master who can "identify the location by listening to the sound".

It will analyze who is speaking and who is listening in the audio, and then dynamically adjust the status of the AI avatar so that it can switch freely between "speaking" and "listening" without having to manually switch roles at all.

It is also equipped with two "memory banks" that store various actions when "speaking" and "listening" respectively, just like two "treasure boxes" to extract the most appropriate actions at any time.

It can also adjust the mood and attitude of the AI avatar according to your voice style, making the conversation more lively and interesting.

Finally, it also uses a technology called "diffusion model" to turn these movements into smooth and natural animations so that you don't feel any lag.

DyConv: A huge conversation data set full of "gossip"!

In order to train INFP, the "super AI", the researchers also specially collected a very large conversation data set called DyConv!

There are more than 200 hours of conversation videos in this data set. People in it come from all over the world, and the content of the conversations is also diverse. It is simply a "gossip concentration camp."

The video quality of the DyConv dataset is very high, ensuring that everyone’s face is clearly visible.

The researchers also used the most advanced speech separation model to extract each person's voice separately to facilitate AI learning.

INFP's "Eighteen Martial Arts": Not only can you talk, but you can also...

INFP can not only show its talents in two-person conversations, but also shine in other scenarios:

"Listening Head Generation" mode: It can make corresponding expressions and actions based on what the other party is saying, just like a good student who "listens carefully".

"Talking Head Generation" mode: It can make the avatar make realistic mouth shapes based on audio, just like a "ventriloquist" master.

In order to prove the power of INFP, researchers conducted a large number of experiments, and the results showed:

In various indicators, INFP has crushed other similar methods, such as video quality, lip synchronization and action diversity, and has achieved excellent results.

In terms of user experience, participants also agreed that the video generated by INFP is more natural and vivid, and matches the audio better.

The researchers also conducted ablation experiments to prove that every module in INFP is essential.

Project address: https://grisoon.github.io/INFP/

The breakthrough in INFP technology has brought revolutionary changes to the interactive experience of AI virtual avatars, making it closer to real human interaction methods. In the future, INFP technology is expected to be widely used in many fields such as virtual assistants, online education, and entertainment, bringing users a more natural, vivid, and immersive interactive experience.