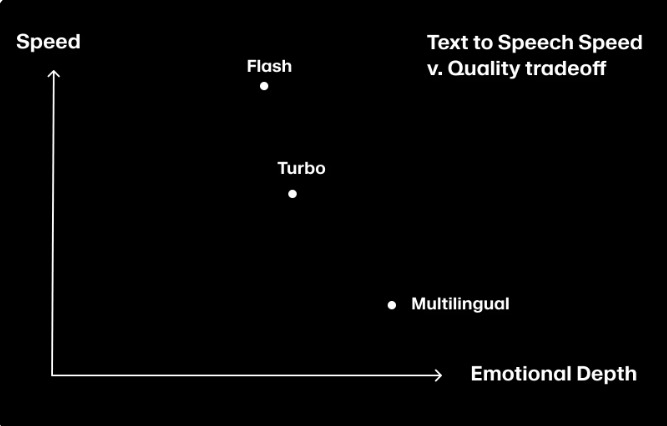

ElevenLabs has released a new speech synthesis model, Flash, which is currently the fastest text-to-speech (TTS) solution with its ultra-low latency - only 75 milliseconds. This breakthrough development is particularly suitable for conversational AI applications that require real-time interaction, significantly improving the smoothness and naturalness of human-computer interaction. The Flash model is available in two versions: Flash v2 (supports English) and Flash v2.5 (supports 32 languages). Users can experience it directly through ElevenLabs’ conversational AI platform and API. Although slightly inferior to the Turbo model in terms of sound quality and emotional expression, the Flash was clearly ahead in terms of speed and came out on top in the blind test.

The Flash model is divided into two versions, Flash v2 only supports English, and Flash v2.5 supports 32 languages. When using both models, users will spend 1 point for every two characters they generate. Although the Flash model is slightly inferior to the Turbo model in terms of sound quality and emotional depth, its low-latency performance allowed it to leapfrog the rest of its class in blind tests, making it the fastest option in its class.

The technical team of ElevenLabs said that the launch of Flash models will greatly promote the smoothness and naturalness of human-computer interaction. Developers can directly call the model IDs "eleven_flash_v2" and "eleven_flash_v2_5" through the API. Specific API reference materials can be found on the ElevenLabs official website. Through this innovation, ElevenLabs hopes to open up more low-latency, humanized dialogue and interaction scenarios.

ElevenLabs also provides a variety of products and solutions, including customized voice assistants, audio production tools, and dubbing studios, designed to help users and developers in different fields achieve high-quality AI audio creation. In addition, ElevenLabs is also actively conducting research and development and continues to improve the technical level of its products to meet the growing needs of users.

Highlights:

The latency of the Flash model to generate speech is only 75 milliseconds, which is suitable for low-latency conversational voice assistants.

Flash v2.5 supports 32 languages, and each two characters generated by the user costs 1 point.

In blind tests, the Flash model outperformed other similar products, becoming the fastest text-to-speech solution.

All in all, ElevenLabs’ Flash model brings new possibilities to conversational AI applications with its ultra-low latency and multi-language support, and also indicates that human-computer interaction will be smoother and more natural in the future. Its advantage in speed makes it one of the leading text-to-speech solutions on the market and deserves the attention of developers and users.