Neural machine translation (NMT) faces huge challenges when processing literary works, especially in accurately conveying expressions rich in cultural and emotional connotations such as metaphors and metaphors. Traditional NMT systems often fall short of their capabilities. In order to solve this problem, Tencent's research team developed a new translation system DRT-o1, which is designed to improve the accuracy and fluency of translation of literary works and better capture the cultural connotations and emotional nuances of the works. The DRT-o1 system contains two versions, namely DRT-o1-7B and DRT-o1-14B, which is built on Qwen2.5 and introduces an innovative multi-agent framework.

As globalization continues to deepen, neural machine translation (NMT) technology plays an increasingly important role in cross-language communication. Although current translation tools perform well when processing technical documents and simple texts, they still face many challenges when translating literary texts. Literary works often contain expressions rich in cultural and emotional connotations such as metaphors and metaphors, and it is often difficult for traditional translation systems to accurately convey their deeper meanings.

In order to make up for this shortcoming, Tencent's research team launched a new translation system called DRT-o1. The system contains two versions: DRT-o1-7B and DRT-o1-14B. These two models are built on Qwen2.5 and introduce a new multi-agent framework specifically optimized for the translation of metaphors and metaphors. The research team collected about 400 public domain English books from Project Gutenberg, extracted 577,600 sentences, and screened out 63,000 sentences containing metaphors and metaphors as training data.

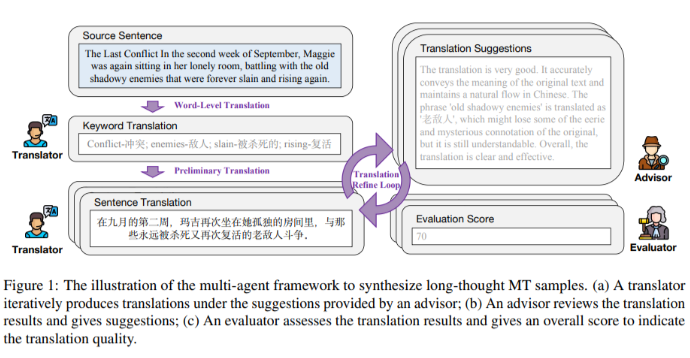

The DRT-o1 system uses a collaborative approach consisting of three roles: translator, consultant and evaluator. The workflow of this multi-agent framework starts with the identification and one-by-one translation of key terms in the source sentence, ensuring contextual accuracy. After an initial translation is generated, it goes through multiple rounds of refinement and evaluation, resulting in a smooth and easy-to-understand translation. This system can better capture the cultural connotation and emotional nuances of literary works when translating them.

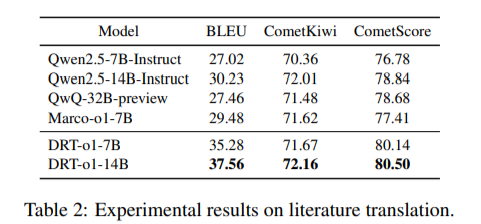

Experimental results show that the BLEU score of DRT-o1-7B has increased by 8.26 points, and the COMET score has increased by 3.36 points, which is better than its predecessor Qwen2.5-7B-Instruct. DRT-o1-14B also performed well, with the BLEU score increasing by 7.33 points and the COMET score increasing by 1.66 points. These results show that DRT-o1 outperforms existing models in literary translation, and in particular its 7B version outperforms even the larger QwQ-32B model.

The DRT-o1 system brings breakthrough progress to the field of neural machine translation by introducing a multi-agent framework and long-chain reasoning methods. It not only improves the accuracy and fluency of translation, but also provides new solutions for the translation of complex literary texts.

Project entrance: https://github.com/krystalan/DRT-o1

Highlight:

The DRT-o1 system consists of two versions (7B and 14B) and uses a multi-agent framework to optimize the translation of metaphors and metaphors.

The research team extracted and screened 63,000 literary sentences from 400 public domain books as training data.

DRT-o1 has significantly improved its BLEU and COMET scores, demonstrating its strong literary translation capabilities.

In short, the DRT-o1 system has achieved remarkable results in the field of literary translation, and its multi-agent framework and large amounts of training data provide an effective way to improve translation quality. The open source of this project also provides valuable resources for future research and is expected to further promote the development of neural machine translation technology and make greater contributions to cross-cultural communication.