Imagine being able to generate high-quality music or sound effects with just a few hums or beats. This is no longer a distant dream. Sketch2Sound, the result of groundbreaking AI research, achieves high-quality audio generation by combining sound imitation and text prompts. It cleverly utilizes the three key control signals of loudness, brightness and pitch extracted from sound imitation, and integrates them into the potential diffusion model of text to audio, thereby guiding AI to generate sounds that meet specific requirements, bringing great benefits to the field of sound creation. revolutionary changes.

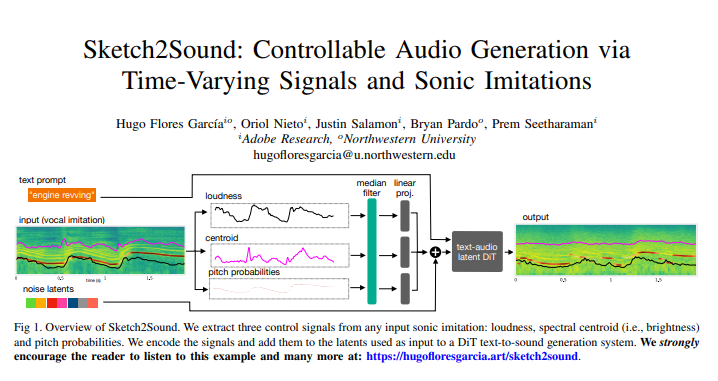

The core technology of Sketch2Sound is its ability to extract three key, time-varying control signals from any sound imitation (such as a vocal imitation or a reference sound): loudness, brightness (spectral centroid) and pitch. Once these control signals are encoded, they are added to the underlying diffusion model used for text-to-sound generation, thereby guiding the AI to generate sounds that meet specific requirements.

The most impressive thing about this technology is its lightweight and efficiency. Sketch2Sound is built on the existing text-to-audio latent diffusion model, requiring only 40,000 steps of fine-tuning, and requiring only one linear layer for each control signal, making it more concise and efficient than other methods (such as ControlNet). To enable the model to synthesize from "sketch"-like sound imitations, the researchers also applied a stochastic median filter to the control signal during training, allowing it to adapt to control signals with flexible temporal characteristics. Experimental results show that Sketch2Sound can not only synthesize sounds that conform to the input control signal, but also maintain compliance with text prompts and achieve audio quality comparable to the plain text baseline.

Sketch2Sound provides sound artists with a new way to create. They can exploit the semantic flexibility of textual prompts, combined with the expressiveness and precision of vocal gestures or imitations, to create unprecedented sound compositions. This is similar to traditional Foley artists who create sound effects by manipulating objects, while Sketch2Sound guides sound generation through sound imitation, bringing a "humanized" touch to sound creation and improving the artistic value of sound works.

Sketch2Sound is able to overcome its limitations compared to traditional text-to-audio interaction methods. In the past, sound designers needed to spend a lot of time adjusting the temporal characteristics of generated sounds to synchronize them with visual effects. Sketch2Sound can naturally achieve this synchronization through sound imitation, and is not limited to human voice imitation, any type of Sound imitation can be used to drive this generative model.

The researchers also developed a technique to adjust the temporal details of the control signal by applying median filters of different window sizes during training. This allows sound artists to control how well the generative model adheres to the timing accuracy of the control signal, thereby improving the quality of sounds that are difficult to imitate perfectly. In practical applications, users can find a balance between strictly adhering to sound imitation and ensuring audio quality by adjusting the size of the median filter.

The working principle of Sketch2Sound is to first extract three control signals of loudness, spectrum centroid and pitch from the input audio signal. These control signals are then aligned with the latent signals in the text-to-sound model, and the latent diffusion model is tuned through a simple linear projection layer to ultimately generate the desired sound. Experimental results show that conditioning the model through time-varying control of the signal can significantly improve compliance with this signal, while having minimal impact on audio quality and text compliance.

Notably, the researchers also found that control signals can manipulate the semantics of the generated signals. For example, when using the text prompt "forest ambience", if random loudness bursts are added to the sound imitation, the model can synthesize bird calls in these loudness bursts without the additional prompt "birds", indicating that the model has learned Correlation between loudness bursts and bird presence.

Of course, there are some limitations to Sketch2Sound, such as the fact that the center of mass control may incorporate the room tones modeled by the input sounds into the generated audio, possibly because the room tones are encoded by the center of mass when there are no sound events in the input audio.

All in all, Sketch2Sound is a powerful generative sound model that can generate sounds through text prompts and time-varying controls (loudness, brightness, pitch). It can generate sounds through sound imitation and "sketch" control curves, and is lightweight and efficient. It provides sound artists with a controllable, gesture-based and expressive tool that can generate sounds with flexible timing. Any sound with unique characteristics will have broad application prospects in the fields of music creation and game sound design in the future.

Paper address: https://arxiv.org/pdf/2412.08550

The emergence of Sketch2Sound heralds a new era in the field of sound creation. It provides artists with unprecedented creative freedom and possibilities, and also brings unlimited imagination space to music, games, movies and other fields. I believe that in the near future, this technology will be more widely used and bring us a more colorful sound world.