Chinese artificial intelligence startup DeepSeek has released its latest ultra-large model DeepSeek-V3, which has become the focus of the industry with its open source code and powerful performance. With 671B parameters and an expert hybrid architecture, DeepSeek-V3 surpasses leading open source models in multiple benchmarks and even performs similarly to some closed source models. Its innovation lies in its auxiliary lossless load balancing strategy and multi-token prediction technology, which significantly improves model training efficiency and running speed. The release of DeepSeek-V3 marks a major breakthrough in open source AI technology, further narrowing the gap with closed source AI and paving the way for the development of artificial general intelligence (AGI).

On December 26, 2024, Chinese artificial intelligence startup DeepSeek released its latest ultra-large model DeepSeek-V3, which is known for its open source technology and innovative challenges leading AI vendors. DeepSeek-V3 has 671B parameters and uses a mixture-of-experts architecture to activate specific parameters to handle a given task accurately and efficiently. According to benchmarks provided by DeepSeek, this new model has surpassed leading open source models including Meta’s Llama3.1-405B, and has similar performance to closed models from Anthropic and OpenAI.

The release of DeepSeek-V3 marks a further narrowing of the gap between open source AI and closed source AI. DeepSeek, which started out as an offshoot of Chinese quant hedge fund High-Flyer Capital Management, hopes these developments will pave the way for artificial general intelligence (AGI), where models will be able to understand or learn any intellectual task a human can perform.

The main features of DeepSeek-V3 include:

Like its predecessor DeepSeek-V2, the new model is based on the basic architecture of multi-head latent attention (MLA) and DeepSeekMoE, ensuring efficient training and inference.

The company also launched two innovations: an auxiliary lossless load balancing strategy and Multi-Token Prediction (MTP), which allows models to predict multiple future tokens simultaneously, improving training efficiency and allowing models to run three times faster, per Generate 60 tokens per second.

In the pre-training phase, DeepSeek-V3 trained on 14.8T high-quality and diverse tokens and performed two-stage context length expansion, and finally performed post-training with supervised fine-tuning (SFT) and reinforcement learning (RL), to align the model with human preferences and further unlock its potential.

In the training phase, DeepSeek uses a variety of hardware and algorithm optimizations, including the FP8 mixed-precision training framework and the DualPipe algorithm for pipeline parallelization, reducing training costs. The entire training process of DeepSeek-V3 is claimed to be completed in 2788K H800 GPU hours or approximately $5.57 million, which is far less than the hundreds of millions of dollars typically spent on pre-training large language models.

DeepSeek-V3 has become the strongest open source model on the market. Multiple benchmarks conducted by the company showed it outperforming the closed-source GPT-4o in most benchmarks, except English-focused SimpleQA and FRAMES, where the OpenAI model led with scores of 38.2 and 80.5 respectively. (DeepSeek-V3 scores are 24.9 and 73.3 respectively). DeepSeek-V3 performed particularly well on the Chinese and math benchmarks, scoring 90.2 on the Math-500 test, followed by Qwen's 80.

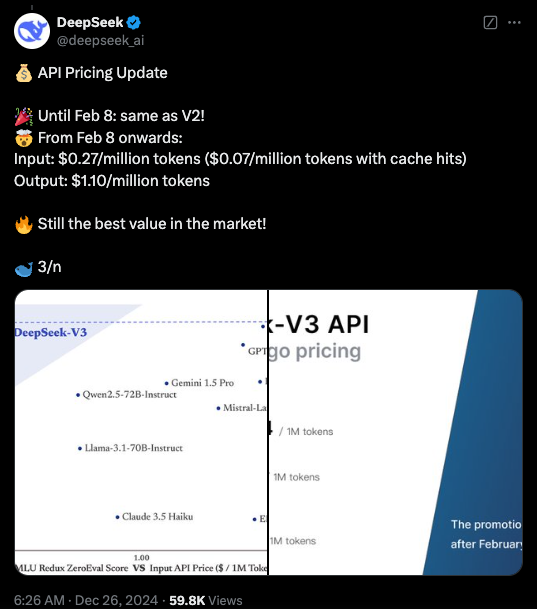

Currently, the code for DeepSeek-V3 is available under an MIT license on GitHub, and the model is provided under the company's model license. Enterprises can also test new models through DeepSeek Chat, a platform similar to ChatGPT, and access APIs for commercial use. DeepSeek will provide the API at the same price as DeepSeek-V2 until February 8. After that, fees of $0.27 per million input tokens ($0.07 per million tokens for cache hits) and $1.10 per million output tokens will be charged.

Highlight:

DeepSeek-V3 is released, with performance surpassing Llama and Qwen.

Adopt 671B parameters and expert hybrid architecture to improve efficiency.

Innovations include lossless load balancing strategies and multi-token prediction for improved speed.

Training costs are significantly reduced, promoting the development of open source AI.

The open source and high performance of DeepSeek-V3 will have a profound impact on the field of artificial intelligence, promote the development of open source AI technology, and promote its application in various fields. DeepSeek will continue to work on developing more advanced AI models and contribute to the realization of AGI. In the future, we have reason to expect more breakthroughs from DeepSeek.