OpenAI’s latest o-series AI models are designed to improve the security of AI systems through deeper rule understanding and reasoning capabilities. Different from relying solely on example learning in the past, this model can proactively understand and apply security guidelines to effectively block harmful requests. The article details the three-stage training process of the o1 model and its performance beyond other mainstream AI systems in security tests. However, even with the improved o1 model, there is still the possibility of manipulation, which highlights the ongoing challenges in the field of AI safety.

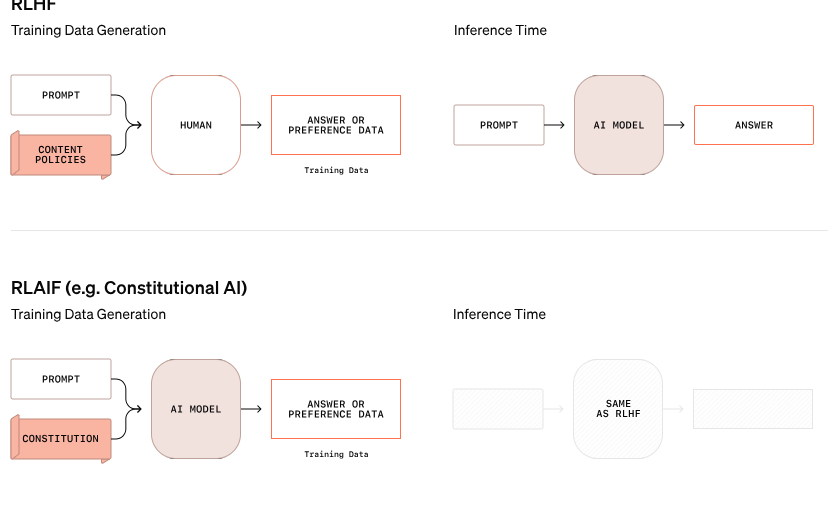

OpenAI has announced a new approach to AI security that aims to improve the security of AI systems by changing the way they handle security rules. This new O-Series model no longer relies solely on learning good and bad behavior from examples, but is able to understand and actively reason about specific safety guidelines.

In one example from OpenAI's research, when a user attempted to obtain instructions for illegal activity via encrypted text, the model successfully decoded the information but rejected the request, citing specifically the security rule that would be violated. This step-by-step reasoning process shows how effectively the model follows relevant safety guidelines.

The training process of this o1 model is divided into three stages. First, the model learns how to help. Next, through supervised learning, the model studies specific safety guidelines. Finally, the model uses reinforcement learning to practice applying these rules, a step that helps the model truly understand and internalize these safety guidelines.

In OpenAI's tests, the newly launched o1 model performed significantly better than other mainstream systems in terms of security, such as GPT-4o, Claude3.5Sonnet and Gemini1.5Pro. The test, which included how the model rejected harmful requests and allowed suitable ones through, showed that the o1 model achieved top scores in both accuracy and resistance to jailbreak attempts.

OpenAI co-founder Wojciech Zaremba said on social platforms that he is very proud of this "thoughtful alignment" work and believes that this kind of reasoning model can be aligned in a completely new way, especially in When developing artificial general intelligence (AGI), ensuring that the system is consistent with human values is a major challenge.

Despite OpenAI's claims of progress, a hacker named "Pliny the Liberator" showed that even the new o1 and o1-Pro models can be manipulated to breach security guidelines. Pliny successfully got the model to generate adult content and even share instructions for making Molotov cocktails, although the system initially rejected these requests. These incidents highlight the difficulty of controlling these complex AI systems because they operate based on probabilities rather than strict rules.

Zaremba said OpenAI has about 100 employees dedicated to AI safety and consistency with human values. He raised questions about rivals' approaches to security, particularly Elon Musk's xAI, which prioritizes market growth over security measures and Anthropic, which recently launched an AI agent without proper safeguards. , Zaremba believes that this will bring "huge negative feedback" to OpenAI.

Official blog: https://openai.com/index/deliberative-alignment/

Highlights:

OpenAI's new o-series models can proactively reason about security rules and improve system security.

The o1 model outperforms other mainstream AI systems in rejecting harmful requests and accuracy.

Despite improvements, new models can still be manipulated, and security challenges remain severe.

All in all, OpenAI's o-series models have made significant progress in the field of AI security, but they have also exposed the complexity and ongoing challenges of large language model security. In the future, more efforts will need to be continued to truly and effectively deal with AI security risks.