Kunlun Wanwei Yan Shuicheng's team collaborated with Nanyang Technological University in Singapore to release a breakthrough project called Q*, which aims to significantly improve the reasoning capabilities of small language models. Unlike other large language models on the market, Q* focuses on enhancing the performance of small models, making its reasoning capabilities comparable to models with dozens or even hundreds of times larger parameters. This research result is expected to change the limitations of small models in practical applications and bring new development opportunities to the field of artificial intelligence. Through innovative algorithms, Q* has significantly improved the reasoning capabilities of small models, and achieved excellent results surpassing large models in multiple benchmark tests.

Recently, the domestic Kunlun Wanwei Yan Shuicheng team and the research team from Nanyang Technological University in Singapore released a project called Q*, aiming to improve the reasoning capabilities of small models. This project is different from OpenAI, but it can enable small models to achieve the reasoning capabilities of models with parameters that are dozens or even hundreds of times larger than it.

The research team achieved remarkable results through the experimental performance of the Q* algorithm: on the GSM8K data set, Q* helped Llama-2-7b improve to an accuracy of 80.8%, surpassing ChatGPT.

On the MATH data set, Q* helped DeepSeek-Math-7b improve to an accuracy of 55.4%, surpassing Gemini Ultra.

On the MBPP data set, Q * helped CodeQwen1.5-7b-Chat increase the accuracy to 77.0%, narrowing the programming level gap with GPT-4. These results show the potential of the Q* algorithm in improving the reasoning capabilities of small models.

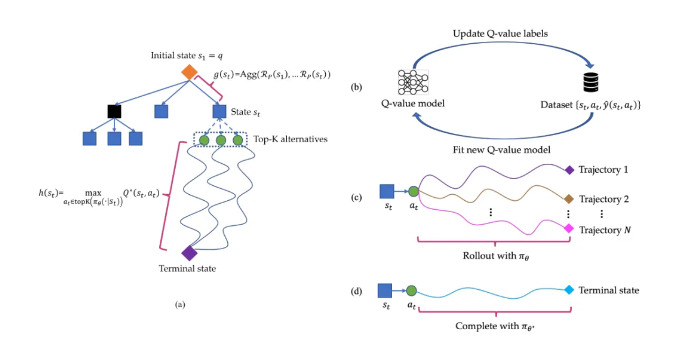

The working principle of the Q* algorithm is to decompose the reasoning trajectory of the large language model into several states, conduct overall planning for each state, and use the A* search algorithm to achieve priority search for complex reasoning tasks. At the same time, they also trained an agent Q-value model through supervised learning to obtain the optimal Q-value of the state-action pair, thereby improving the performance of the model.

Highlight:

The Q* project is not released by OpenAI. Through the algorithm of the research team, the reasoning ability of the small model has been greatly improved.

The project achieved remarkable experimental results on multiple data sets, demonstrating the potential and effectiveness of the Q* algorithm.

Paper link: https://arxiv.org/abs/2406.14283

The research results of the Q* project provide a new direction for the development of small models. Its efficient algorithm and significant improvement effect are worthy of attention. In the future, this algorithm is expected to be applied in more fields and promote the advancement of artificial intelligence technology. A link to the paper has been provided for interested readers to learn more details.