Research teams from Nanjing University, Fudan University and Huawei's Noah's Ark Laboratory have made major breakthroughs in the field of 3D digital humans, solving the problems of insufficient multi-view consistency and emotional expressiveness in existing methods. They have developed a new method that enables the synthesis of 3D talking avatars with controllable emotions and achieves significant improvements in lip synchronization and rendering quality. The research results are based on the newly constructed EmoTalk3D dataset, which contains calibrated multi-view videos, emotional annotations and frame-by-frame 3D geometric information, and has been made public for non-commercial research use. Through the mapping framework of "from speech to geometry to appearance", this method accurately captures subtle facial expressions and achieves high-fidelity rendering under free viewing angles.

Product entrance: https://nju-3dv.github.io/projects/EmoTalk3D/

They collected the EmoTalk3D dataset with calibrated multi-view videos, emotion annotations, and frame-by-frame 3D geometry. And a new method for synthesizing 3D talking avatars with controllable emotions is proposed, with significant improvements in lip synchronization and rendering quality.

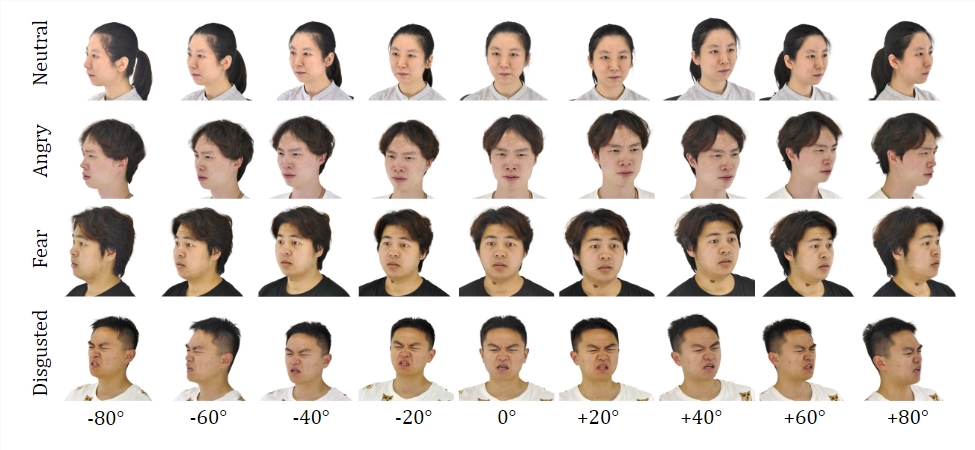

Dataset:

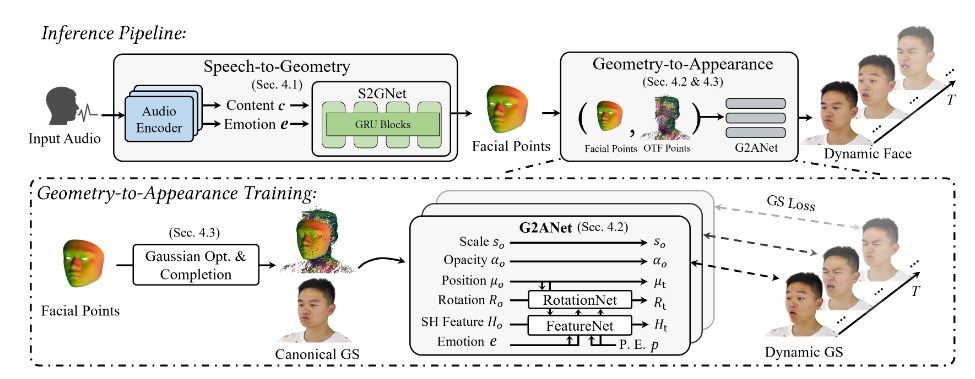

By training on the EmoTalk3D dataset, the research team built a mapping framework "from speech to geometry to appearance." A realistic 3D geometric sequence is first predicted from audio features, and then the appearance of a 3D talking head represented by a 4D Gaussian is synthesized based on the predicted geometry. Appearances are further decomposed into standard and dynamic Gaussians, learned from multi-view videos, and fused to render free-view talking head animations.

The model enables controllable emotions in generated talking avatars and renders them across a wide range of viewing angles. Demonstrated improved rendering quality and stability in lip motion generation while capturing dynamic facial details such as wrinkles and subtle expressions. In the example of the generated results, the happy, angry, and frustrated expressions of the 3D digital human are accurately displayed.

Its overall process contains five modules:

The first is an emotional content decomposition encoder, which parses content and emotional features from input speech; the second is a speech-to-geometry network, which predicts dynamic 3D point clouds from features; the third is a Gaussian optimization and completion module to establish a standard appearance; the fourth is geometry-to-appearance. The network synthesizes facial appearance based on dynamic 3D point cloud; the fifth is the rendering module, which renders dynamic Gaussian into free-view animation.

Additionally, they built the EmoTalk3D dataset, an emotion-annotated multi-view talking head dataset with frame-by-frame 3D facial shapes, which will be made available to the public for non-commercial research purposes.

Highlight:

Propose a new method to synthesize digital humans with controllable emotions.

Build a mapping framework “from speech to geometry to appearance.”

The EmoTalk3D data set is established and ready to be opened.

This research provides a new direction for the development of 3D digital human technology. The methods and data sets proposed will provide valuable resources for future research and promote the development of 3D digital human technology that is more realistic and emotionally expressive. The opening of the EmoTalk3D data set also promotes cooperation and exchanges in the academic community.