Deepfakes, as a rapidly developing adversarial artificial intelligence, are posing an increasingly serious threat to the global economy and security. The economic damage caused by it is expected to grow significantly, with the banking and financial services industry being a major target. This article will analyze the rapid development trend of deepfake technology, the risks and challenges it brings to enterprises and individuals, and explore the dilemmas enterprises face when dealing with this emerging threat.

As one of the fastest growing forms of adversarial artificial intelligence, losses related to deepfakes are expected to soar from $12.3 billion in 2023 to $40 billion in 2027, a compound annual growth rate of an astonishing 32% . Deloitte expects deepfakes to surge in the coming years, with banking and financial services being a major target.

Deepfakes are at the forefront of adversarial AI attacks, growing 3,000% in the last year alone. Deepfakes are expected to increase by 50% to 60% by 2024, with 140,000-150,000 such incidents expected globally this year.

The latest generation of generative AI applications, tools, and platforms give attackers everything they need to create deepfake videos, impersonated voices, and fraudulent documents quickly and cost-effectively. Pindrops’ 2024 Speech Intelligence and Security Report estimates that deepfake fraud targeting contact centers costs an estimated $5 billion annually. Their report highlights the serious threat deepfakes pose to banking and financial services

Bloomberg reported last year that "an entire cottage industry has emerged on the dark web selling scammers priced from $20 to thousands of dollars." More recently, an infographic based on Sumsub's 2023 Identity Fraud Report Provides a global perspective on the rapid growth of AI fraud.

One-third of enterprises have no strategy to deal with the risk of adversarial AI attacks, which are most likely to begin with deepfakes of their key executives. New research from Ivanti found that 30% of enterprises have no plan to identify and defend against adversarial AI attacks.

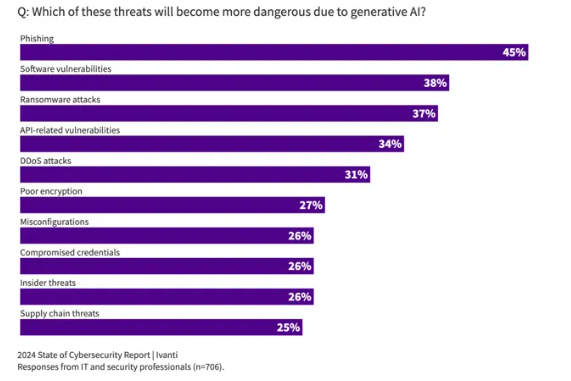

Ivanti's 2024 State of Cybersecurity Report found that 74% of companies surveyed have seen evidence of AI threats. An overwhelming majority (89%) believe the AI threat is just beginning. Of the majority of CISOs, CIOs and IT leaders Ivanti interviewed, 60% were concerned that their organizations were not prepared to defend against AI threats and attacks. The use of deepfakes as part of an orchestrated strategy that includes phishing, software exploits, ransomware, and API-related vulnerabilities is becoming increasingly common. This is consistent with threats that security professionals expect will become more dangerous due to the new generation of artificial intelligence.

Faced with the rapid development of deep forgery technology and the huge risks it brings, companies need to actively take countermeasures, strengthen security protection, and improve employees' risk awareness in order to effectively reduce losses and ensure their own safety. The advancement of technology also needs to be developed simultaneously with the improvement of security measures to prevent technology from being abused.