Adobe, as a giant in the field of creative software, has caused huge controversy by updating its terms of service. This update allows Adobe to access user works to improve its AI model Firefly, a move that has been widely criticized as an "overlord clause" that violates users' copyright and privacy. This article will provide an in-depth analysis of the controversy caused by the update of Adobe's terms of service, explore the reasons behind it, and the increasingly tense relationship between technology companies and users.

Adobe, a well-known name in the creative industries, is known as the "Copyright Guardian" for its stance on copyright protection. But recently, the company has been caught in the vortex of public opinion because of a quietly updated terms of service.

In February of this year, Adobe quietly updated its product terms of service, adding an eye-catching addition: users must agree that Adobe can access their works through automated and manual means, including those protected by non-disclosure agreements. . Adobe will use these works to improve its services and software through technologies such as machine learning. If users do not agree to these new terms, they will not be able to use Adobe's software.

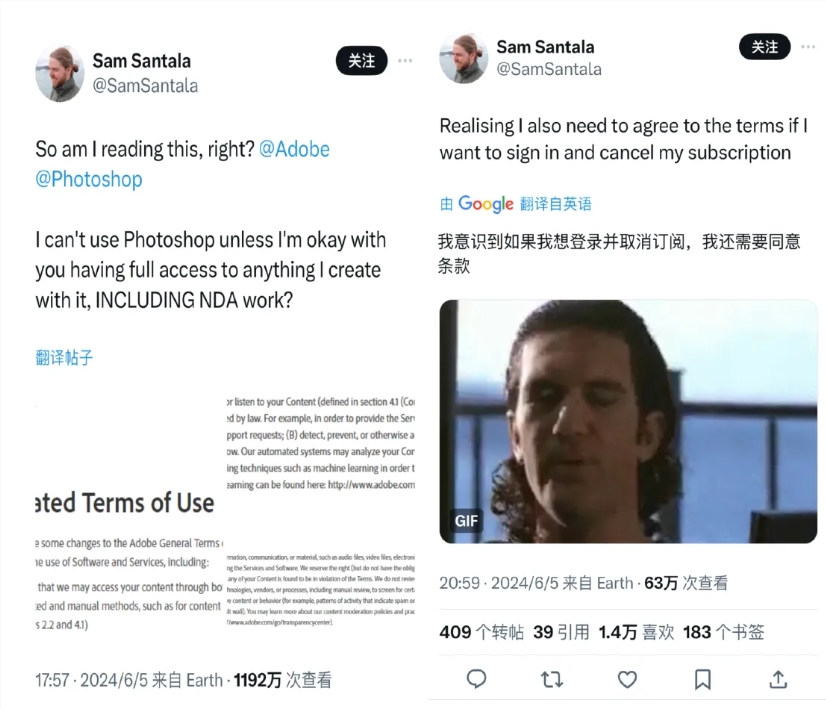

The change was recently revealed, sparking a backlash from Adobe's core users including creatives, digital artists and designers. They believe that this is a compulsory authorization, essentially a "overlord clause", and its real purpose is to train Adobe's generative AI model "Firefly". A blogger named Sam Santala questioned this provision on Twitter, and his tweet has received tens of millions of views.

Many users have expressed concerns about their privacy and copyright and have chosen to stop using Adobe's products. At the same time, Meta has taken similar measures and updated its privacy policy to allow the use of information shared by users on Meta products and services to train AI. If users do not agree to the new privacy policy, they should consider stopping using social media products such as Facebook and Instagram.

With the rapid development of AI technology, the battle between technology companies and users over data privacy, content ownership and control has become increasingly fierce. Adobe claims that the training data for its Firefly model comes from hundreds of millions of images in the Adobe image library, some publicly licensed images, and public images whose copyright protection has expired. However, other AI image generation tools, such as Stability AI's Stable Diffusion, OpenAI's Dall-E2 and Midjourney, have been controversial due to copyright issues.

Adobe is trying to adopt a differentiated market positioning in this area and become a "white knight" in the AI arms race, emphasizing the legitimacy of its model training data and promising to pay claims in copyright disputes caused by the use of images generated by Adobe Firefly. But the strategy didn't calm all users' concerns. Some users, such as senior designer Ajie, jokingly call themselves "Adobe genuine victims" and believe that Adobe uses its huge creative ecosystem to train AI. Although it is a smart business strategy, for users, the platform and creation The distribution of interests among users and the user’s right to know are missing.

In addition, copyright disputes with Adobe have been repeatedly exposed overseas, causing users to question whether Adobe really respects the copyright of creators. For example, artist Brian Kesinger discovered that AI-generated images similar to his work were being sold under his name in the Adobe image library without his consent. The estate of photographer Ansel Adams has also publicly accused Adobe of allegedly selling generative AI replicas of the late photographer's work.

Under pressure from public opinion, Adobe revised its terms of service on June 19, making it clear that it will not use user content stored locally or in the cloud to train AI models. But the clarification didn't entirely quell the creators' concerns. Some well-known bloggers in the overseas AI circle pointed out that Adobe's revised terms of service still allow the use of users' private cloud data to train machine learning models of non-generative AI tools. Although users can opt out of "content analysis", the complicated cancellation operation often puts many users away.

Different countries and regions have different regulations on user data protection, which also affects the strategies of social media platforms when formulating user terms of service. For example, under the General Data Protection Regulation (GDPR), users in the UK and EU have a “right to object” and can explicitly opt out of having their personal data used to train Meta’s artificial intelligence models. However, U.S. users do not have the same right to know. According to Meta's existing data sharing policy, content posted by U.S. users on Meta's social media products may have been used to train AI without explicit consent.

Data has been hailed as the “new oil” in the AI era, but there are still many gray areas in the “exploitation” of resources. Some technology companies have adopted a vague approach in obtaining user data, which has triggered a double dilemma of users' personal information rights: digital copyright ownership and data privacy issues, seriously damaging users' trust in the platform.

At present, the platform still has major deficiencies in ensuring that generative AI does not infringe on the rights of creators, and it also lacks adequate supervision. Some developers and creators have taken action and launched a series of "anti-AI" tools, from the work protection tool Glaze to the AI data poisoning tool Nightshade, to the anti-AI community Cara, which became popular in the face of technology companies' unauthorized use of users/creators. With the consent of the author, relevant data is captured to train the AI model, and people's anger has intensified.

Today, with the rapid development of AI technology, how to balance technological innovation and user privacy security and protect the rights and interests of creators still requires further development of the industry and continuous improvement of legal regulatory measures. At the same time, users need to be more vigilant, understand their data rights, and take action when necessary to protect their creations and privacy.

The Adobe incident is just the tip of the iceberg of data trade-off issues in the AI era. In the future, how to find a balance between technological progress and user rights will become an important issue for all technology companies and regulatory agencies. Only by strengthening supervision and clarifying data usage rules can we build a healthier and more sustainable AI ecological environment.