Today, with the rapid development of AI technology, personalized experience has become the focus of users' pursuit. How to make AI truly understand user needs and provide customized services has become a major challenge in the field of artificial intelligence. The PMG (Personalized Multimodal Generation) technology jointly developed by Huawei and Tsinghua University provides a new idea to solve this problem. This technology can generate personalized multi-modal content based on users' historical behaviors and preferences, such as emoticons, T-shirt designs, and movie posters, bringing users a more thoughtful and convenient AI experience.

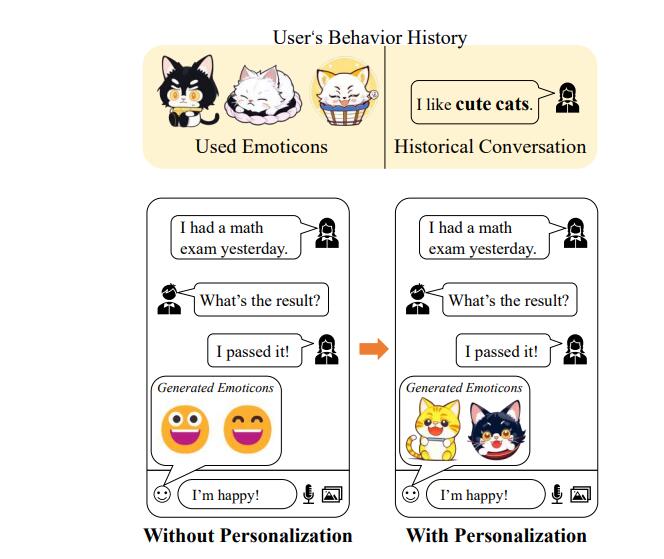

In this era where personalization is paramount, how can AI understand you better? Imagine that when you type "I passed, I am very happy!" in the chat software, an AI that understands your thoughts not only recognizes your excitement, It also remembers that you prefer smiley cat expressions, so it has created a series of unique smiley cat expression packs tailored for you.

In the field of personalized generation of artificial intelligence, Huawei and Tsinghua University have joined forces to create a new technology called PMG (Personalized Multimodal Generation). This technology can generate multi-modal content that meets the user's personalized needs based on the user's historical behavior and preferences, such as emoticons, T-shirt designs, movie posters, etc.

How does PMG work? It extracts the user's preferences by analyzing the user's viewing and conversation history, combined with the reasoning capabilities of the large language model. This process includes explicit keyword generation and implicit user preference vector generation. The combination of the two provides a rich information basis for the generation of multi-modal content.

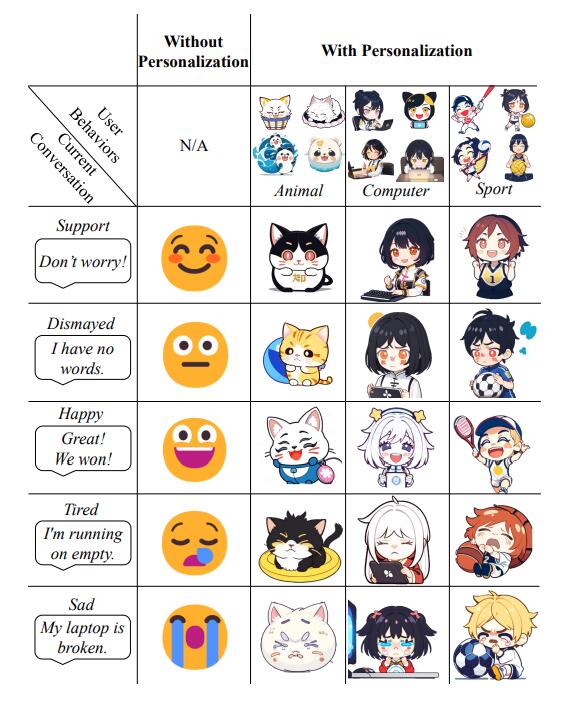

In practical applications, PMG technology can achieve the following functions:

Keyword generation: Construct prompt words to guide the large model to extract user preferences as keywords.

Hidden vector generation: Combining user preference keywords and target item keywords, using the bias correction large model fine-tuned by P-Tuning V2 to learn multi-modal generation capabilities.

Balance of user preferences and target items: By calculating the level of personalization and accuracy, quantitatively measuring the generation effect and optimizing the generated content.

The research team verified the effectiveness of PMG technology through three application scenarios: e-commerce clothing image generation, movie poster scene and expression generation. Experimental results show that PMG is able to generate personalized content that reflects user preferences, and performs well on the image similarity indicators LPIPS and SSIM.

This technology is not only innovative in theory, but also shows great potential and commercial value in practical applications. With the growing demand for personalization, PMG technology is expected to experience explosive growth in the future, bringing users a richer and more personalized experience.

Project address: https://github.com/mindspore-lab/models/tree/master/research/huawei-noah/PMG

All in all, PMG technology achieves highly personalized content generation by combining large language models and multi-modal generation capabilities, providing users with a more creative AI experience that is closer to their needs. It has broad application prospects in e-commerce, entertainment and other fields, and it is worth looking forward to its future development and application.